|

Research Ideas and Outcomes :

Grant Proposal

|

|

Corresponding author: Tony Ross-Hellauer (tross@know-center.at)

Received: 07 Dec 2022 | Published: 08 Dec 2022

© 2022 Tony Ross-Hellauer, Thomas Klebel, Alexandra Bannach-Brown, Serge P.J.M. Horbach, Hajira Jabeen, Natalia Manola, Teodor Metodiev, Haris Papageorgiou, Martin Reczko, Susanna-Assunta Sansone, Jesper Schneider, Joeri Tijdink, Thanasis Vergoulis

This is an open access article distributed under the terms of the Creative Commons Attribution License (CC BY 4.0), which permits unrestricted use, distribution, and reproduction in any medium, provided the original author and source are credited.

Citation:

Ross-Hellauer T, Klebel T, Bannach-Brown A, Horbach SP, Jabeen H, Manola N, Metodiev T, Papageorgiou H, Reczko M, Sansone S-A, Schneider J, Tijdink J, Vergoulis T (2022) TIER2: enhancing Trust, Integrity and Efficiency in Research through next-level Reproducibility. Research Ideas and Outcomes 8: e98457. https://doi.org/10.3897/rio.8.e98457

|

|

Abstract

Lack of reproducibility of research results has become a major theme in recent years. As we emerge from the COVID-19 pandemic, economic pressures and exposed consequences of lack of societal trust in science make addressing reproducibility of urgent importance. TIER2 is a new international project funded by the European Commission under their Horizon Europe programme. Covering three broad research areas (social, life and computer sciences) and two cross-disciplinary stakeholder groups (research publishers and funders) to systematically investigate reproducibility across contexts, TIER2 will significantly boost knowledge on reproducibility, create tools, engage communities, implement interventions and policy across different contexts to increase re-use and overall quality of research results in the European Research Area and global R&I, and consequently increase trust, integrity and efficiency in research.

Keywords

Open Science, Reproducibility, Research quality, Epistemic diversity, Tools and practices, Policy intervention, EOSC, Reproducibility Networks, Community engagement

List of participants

Detailed list of all paricipants is available in Table

|

No.* |

Participant organisation name |

Principal investigator |

Co. |

|

1 (Coordinator) |

Know-Center GmbH Research Center for Data-Driven Business & Big Data Analytics (KNOW) |

Tony Ross-Hellauer (m) |

AT |

|

2 |

Athena - Athena Research & Innovation Center In Information Communication & Knowledge Technologies (ARC) |

Thanasis Vergoulis (m) |

GR |

|

3 |

Stichting VUmc (VUmc) |

Joeri Tijdink (m) |

NL |

|

4 |

Aarhus Universitet (AU) |

Jesper Schneider (m) |

DK |

|

5 |

Pensoft Publishing (PENSOFT) |

Lyubomir Penev (m) |

BG |

|

6 |

GESIS-Leibniz-Institut Für Sozialwissenschaften EV (GESIS) |

Hajira Jabeen (f) |

DE |

|

7 |

OpenAIRE AMKE (OpenAIRE) |

Natalia Manola (f) |

GR |

|

8 |

Charite - Universitätsmedizin Berlin (Charite) |

Alexandra Bannach-Brown (f) |

DE |

|

9 |

The Chancellor Masters & Scholars of The University of Oxford (UOXF) (Associated Partner) |

Susanna-Assunta Sansone (f) |

UK |

|

10 |

Biomedical Sciences Research Center Alexander Fleming (FLEMING) |

Martin Reczko (m) |

GR |

Introduction

TIER2 is a new project funded by the European Commission under their Horizon Europe programme (call HORIZON-WIDERA-2022-ERA-01-41 - Increasing the reproducibility of scientific results*

Lack of reproducibility of research results has become a major theme in recent years. As we emerge from the COVID-19 pandemic, economic pressures (increasing scrutiny of research funding) and exposed consequences of lack of societal trust in science make addressing reproducibility of urgent importance. TIER2 does so by selecting 3 broad research areas (social, life and computer sciences) and 2 cross-disciplinary stakeholder groups (research publishers and funders) to systematically investigate reproducibility across contexts. The project starts by thoroughly examining the epistemological, social and technical factors (epistemic diversity) which shape the meanings and implications of reproducibility across contexts. Next, we build a state-of-the-art evidence-base on existing reproducibility interventions, tools and practices, identifying key knowledge gaps. Then TIER2 will use (co-creation) techniques of scenario-planning, backcasting and user-centred design to select, prioritise, design/adapt and implement new tools/practices to enhance reproducibility across contexts. Alignment activities ensure tools are EOSC-interoperable, & capacity-building actions with communities (i.e., Reproducibility Networks) will facilitate awareness, skills and community-uptake. Systematic assessment of the efficacy of interventions across contexts will synthesise knowledge on reproducibility gains and savings. A final roadmap for future reproducibility, including policy recommendations is co-created with stakeholders. Thus, TIER2 will significantly boost knowledge on reproducibility, create tools, engage communities, implement interventions and policy across different contexts to increase re-use and overall quality of research results in the European Research Area and global R&I, and consequently increase trust, integrity and efficiency in research.

We here present the TIER2 project “Description of Action”, which programmatically guides project activities. This text represents our initial project proposal as submitted to the EC, with only slight modifications (e.g., streamlining and clarification of some deliverables and milestones, removal of some administrative information for readability).

As TIER2’s success will depend on community engagement we make this information public not only as part of our commitment to principles of open and reproducible research, but also to inform the community of our plans and invite interested parties to get involved.

1 Excellence

This section describes: (a) the rationale and objectives of TIER2, including how the project aims to go beyond the state-of-the-art (subsection 1.1), and (b) our overall methodology for the project (subsection 1.2).

1.1 Objectives and ambition

The European Research Area (ERA) is an ambitious effort to create a “single, borderless market for research, innovation and technology across the EU”. Launched in 2000 and “revitalised” in 2018, the current ERA Policy Agenda sets out 20 concrete actions for 2022-2024, including to “Enable Open Science, including through the European Open Science Cloud (EOSC),” “Upgrade EU guidance for a better knowledge valorisation”, “Strengthen research infrastructures” and “Build-up research and innovation ecosystems to improve excellence”. Enabling increased reproducibility*

Reproducibility is often claimed as a central principle of the scientific method (

As the world emerges from the COVID-19 pandemic, economic pressures (and hence increased scrutiny of research funding) and the exposed consequences of lack of societal trust in science make addressing such issues of urgent importance. Doing so will reduce inefficiencies, avoid repetition, maximise return on investment, prevent mistakes, and speed innovation to bring trust, integrity and efficiency to the ERA and global Research and Innovation (R&I) system in general. To set the scale of potential savings, according to

Increasing reproducibility is a multifaceted challenge, however. At the practical level, there is an urgent need for capacity-building to improve infrastructure/services, skills, communities, incentives and policies to enable and encourage reproducibility-maximising practices. At the theoretical level, moreover, three key information gaps currently hinder progress:

- Limited clarity on meanings, limits and implications of reproducibility across modes of knowledge production;

- Limited understanding of optimal reproducibility impact pathways to maximise gains and minimise costs in reproducibility reform;

- No coherent roadmap for implementing policies and practices to optimise reproducibility across the whole R&I system.

An influential EC scoping report Reproducibility of scientific results in the EU (

- Reproducibility is an opportunity, not a crisis;

- Epistemic diversity (variation across modes of knowledge production and socio-technical contexts) must be centred;

- Evidence must be systematised for informed policy across contexts;

- Action must be targeted holistically to boost capacity at all levels.

TIER2 will centre epistemic diversity by selecting three broad research areas (social, life and computer sciences) and two cross-disciplinary stakeholder groups (research publishers and funders) to systematically investigate reproducibility across contexts. In tandem with curated co-creation communities of these groups, we will design, implement and assess systematic interventions addressing key levers of change (tools, skills, communities, incentives, and polices). The project will start by thoroughly examining the epistemological, social and technical factors (epistemic diversity) which shape the meanings and implications of reproducibility across contexts (epistemic contexts). Next, we will build a state-of-the-art evidence-base on extent and efficacy of existing reproducibility interventions and practices, as well as an inventory of relevant tools, identifying key gaps in current knowledge. Then TIER2 will use (co-creation) techniques of scenario-planning, backcasting and user-centred design to select, prioritise, design/adapt and implement new tools to enhance reproducibility across contexts. Alignment activities will ensure tools are EOSC-interoperable, and capacity-building actions with communities (i.e., Reproducibility Networks) will facilitate awareness, skills and community-uptake. Systematic assessment of the efficacy of interventions across contexts will enable a synthesis of knowledge regarding reproducibility gains and savings. This will inform a final roadmap for future reproducibility, including policy recommendations co-created by stakeholders. Through these activities, TIER2 will significantly boost knowledge on reproducibility, create tools, engage communities, implement interventions and policy across different contexts to increase re-use and overall quality of research results in the ERA and beyond, and consequently increase trust, integrity and efficiency in research.

TIER2's core objectives, along with their relative key activities and forseen results, are outlined in Table

|

Objective 1 - CONCEPTUALISE: Create the conceptual & evidential framework for the project |

|

|

Key activities |

|

|

Key results |

|

|

Relation to call |

“Creating an open knowledge base of results, methodologies & interventions on the drivers and consequences of reproducibility for the R&I system; and to fill the main gaps in such knowledge” |

|

Objective 2 – DESIGN: Co-create interventions for improved reproducibility across contexts |

|

|

Key activities |

|

|

Key results |

|

|

Relation to call |

“[F]ill the main gaps in … knowledge”; “Find … solutions and best-practices to increase the reproducibility of research, including through the more systematic integration of sex and gender as variables” |

|

Objective 3 – IMPLEMENT: Drive change through community-driven stakeholder development & piloting of new interventions & tools for reproducibility |

|

|

Key activities |

|

|

Key results |

|

|

Relation to call |

“Develop, validate, pilot and deploy practices and practical tools for funders, publishers and scientists”; “fill the main gaps in … knowledge”; “experiment and mainstream concrete solutions and best-practices to increase the reproducibility of research” |

|

Objective 4 – ASSESS: Synthesise findings from across the project using impact pathway logics & econometric analysis to validate the framework |

|

|

Key activities |

|

|

Key results |

|

|

Relation to call |

“Determine how increased reproducibility generates gains and savings in the R&I process and improve overall performance - alongside the demonstrated positive effects on their quality, integrity and trust-worthiness” |

|

Objective 5 – RECOMMEND/REFLECT: Co-create a cohesive roadmap for future developments |

|

|

Key activities |

|

|

Key results |

|

|

Relation to call |

“Assist further policy development, based on scoping work by the Commission. While solutions should be applicable to Europe, attention should be paid to reproducibility in global science.”; “It is expected that the funded action(s) will adhere to best practices in open science and reproducibility (e.g., re-use existing results, fully document the research process), and provide a final reflection based on their own experience at the forefront of reproducibility” |

|

Objective 6 – NETWORK/EMPOWER: Equip researchers, funders, publishers & others with the skills, connections & resources to exploit state-of-the-art guidance, tools & services |

|

|

Key activities |

|

|

Key results |

|

|

Relation to call |

“Creating an open knowledge base of results, methodologies and interventions on the drivers and consequences of reproducibility for the R&I system; and to fill the main gaps in such knowledge”; “promote uptake, greater collaboration, and increased alignment of the activities of stakeholders - scientific and technical communities, publishers and funders among others - to increase reproducibility” |

The ambition of TIER2 is to increase trust, integrity, & efficiency in research through next-level reproducibility tools, practices & policies across diverse epistemic contexts. We will achieve this by:

- Taking stock of existing knowledge/evidence, and clarifying the meanings/implications of reproducibility across epistemic contexts.

- Building capacity and innovating new EOSC-native tools and practices for funders, publishers and researchers through community-led pilots addressing infrastructure/services, skills, communities, incentives and policies.

- Enumerating gains and savings to build a common understanding and roadmap for promoting and monitoring reproducibility impact pathways across epistemic contexts.

Thereby, TIER2 will enhance reproducibility beyond the state-of-the-art in diverse ways:

- Deepened understanding of reproducibility across modes of knowledge production: epistemic diversity is at the core of TIER2. Simply put, profound differences in the aims and methods of knowledge production have deep implications for the types of reproducibility that can/should be expected. Thus far, the conversation on reproducibility has been dominated by a relatively narrow section of this spectrum (e.g., behavioural and clinical sciences). TIER2, through its commitment to first understanding these differences and then studying them in depth via a cross-disciplinary case-study approach will greatly expand this understanding.

- Network & capacity-building: TIER2 will harness network effects by connecting existing networks of researchers, funders and publishers. TIER2’s activities will be a keystone in furthering development of reproducibility communities-of-practice, especially by linking the national Reproducibility Networks (grassroots local networks embedded at individual institutions, already present in the UK, Finland, Germany, Italy, Norway, Portugal, Slovakia, Sweden and Switzerland) to higher-level networks of funders (e.g., Science Europe, RDA Research Funders IG, Open Research Funders Group), publishers associations (e.g., STM Association, OASPA, EASE) and infrastructures (e.g., EOSC, ESFRIs, OpenAIRE). These actions will sustainably spur collective action amongst major stakeholders. (For full list of stakeholders, see section 2.1).

- Vision & roadmap for future reproducibility: TIER2 will work with communities of researchers, funders and publishers to envisage future optimal conditions for reproducibility in their contexts and use back-casting to prioritise what types of tools and innovations are necessary for this future of reproducibility. All pilot activities will be implemented to enable maximum assessment of efficacy, and synthesise all findings into our framework of epistemic diversity. In a final stage, we will use innovative methods of co-creation (modified Delphi method) to collaboratively construct a practical roadmap for future actions amongst researchers, funders, and institutions.

- Next-level EOSC-native reproducibility tools & practices: EOSC aims at seamless access across infrastructures to support a 'Web of FAIR Data and services' for science in Europe. FAIR (Findable, Accessible, Interoperable and Reusable) research objects are a cornerstone of reproducibility (

Wilkinson et al. 2016 ). TIER2 will create tools and practices designed to be interoperable with EOSC from the start, building on existing key elements of the EOSC ecosystem (e.g., OpenAIRE, and FAIR-enabling components connected to EOSC science clusters, such as FAIRsharing). TIER2 will seamlessly enrich EOSC’s range of value-added services to increase reproducibility and reuse. Table3 below summarises the provisional range of tools to be potentially developed in TIER2.

|

Reproducibility Hub (incl. checklists) |

TRL* |

Lead: VUmc |

|

Training, skills and information resource for researchers, publishers and funders. Building on: Embassy of Good Science (VUmc/TRL 9) |

||

|

Reproducibility Management Plan tool |

TRL (start/end): 2 to 5 |

Lead: OpenAIRE |

|

New concept to extend Data Management Plans to enable reproducible research. Building on: Argos DMP Tool (OpenAIRE/TRL 8), ROAL (ARC/TRL5), FAIRsharing (UOXF/TRL9), ResearchGraph (OpenAIRE/TRL9) |

||

|

Reproducible research workflow tools |

TRL (start/end): 6 to 8 |

Lead: ARC |

|

Containerised workflows to facilitate reproducibility and data/code reuse in social (lead: GESIS), life (lead: UOXF), and computer sciences (lead: ARC). Building on: SCHeMa (ARC, FLEMING/TRL8), Methods Hub (GESIS/TRL3), FAIRsharing, ROAL, OpenAIRE ResearchGraph |

||

|

Data/code review workflow |

TRL (start/end): 5 to 8 |

Lead: KNOW |

|

Streamlined publisher workflows for review of data/code to facilitate publishing checks in soc, life and comp sci. Building on: F1000 platform (F1000/TRL9), CODECHECK (TRL3), SCHeMa, ROAL, OpenAIRE ResearchGraph |

||

|

Standards for threaded publications |

TRL (start/end): 3 to 7 |

Lead: KNOW |

|

New common standards and best practice guidelines for to enable links between connected research outputs - and associated meta-data descriptors (e.g., Grant information; author and contributor details). Building on: F1000 platform, OpenAIRE ResearchGraph, FAIRsharing, Docmaps (KNOW/TRL6) |

||

|

Funder Reproducibility Plan instrument |

TRL (start/end): 0 to 9 |

Lead: KNOW |

|

New tool to assist funders create a holistic plan to increase reproducibility of their results. Building on: RiPP (AU, VUmc/TRL8) |

||

|

Reproducibility monitoring dashboard |

TRL (start/end): 4 to 7 |

Lead: ARC |

|

Dashboard for funders to check levels of FAIRness and re-use of research objects from funded research. Building on: ROAL, FAIRsharing, Argos, OpenAIRE ResearchGraph |

||

1.2 Methodology

Here, we present the overall methodology for TIER2, including key concepts, methodologies and project open and reproducible research practices.

1.2.1 Overall concept

In 2020 the EC commissioned a major review “Assessing the reproducibility of research results in EU Framework Programmes”.*

- The “crisis” narrative is unreflective of current reality. Our survey of H2020 beneficiaries found that only around a fifth perceived a significant crisis (much lower than the 2016 Nature survey). We believe this is possibly reflective of two phenomena: (1) the conversation on reproducibility has entered a new phase, (2) our sample, selected randomly from Principal Investigators of H2020 projects, possibly reflects a wider disciplinary scope than the Nature survey which overemphasised certain disciplines (e.g., biology comprised almost half).

- Understanding of the notion of “reproducibility” & the attitude towards reproducibility varies significantly by field. Epistemic and social factors must be taken into account in any policy actions designed to increase reproducibility. Qualitatively and quantitatively assessing documents and outputs (proposals, DMPs, publications, datasets) from 1000 EC projects, we found that practices associated with increased reproducibility (FAIR data, software/materials sharing, reporting standards) apply very differently across fields.

- Interventions to improve reproducibility are currently often targeted broadly, while much of the evidence emerges from distinct fields (esp. medicine/health, psychology). Work to understand issues of reproducibility have been predominantly led by select disciplines, especially psychology and clinical medicine (

Cobey et al. 2022 ). However, these disciplines are only part of the funding landscape. More work is needed to systematise knowledge of which interventions are appropriate in which contexts to determine the impact pathways whereby interventions result in increased reproducibility (and the extent to which this is desirable in different contexts). - Cultural factors (pressure to publish & lack of incentives), followed by training & lack of infrastructure, are perceived by researchers, journal editors & funders as the greatest barriers to reproducibility. Joined-up approaches which work across all levels from technical and skills, to norms and incentives are required. In addition, researchers, publishers, funders and others (including infrastructure providers) all support the principles of reproducibility, yet the landscape of joint action is currently very diffuse. Increasing reproducibility (as a distinct strategic goal), for example, is not yet a high priority for journals or funders. Many diverse initiatives exist whose alignment would have powerful multiplier effects.

Building upon these findings, TIER2 proposes a programme of activities based on four key principles. We believe the reproducibility agenda must enter a new phase. If phase one is typified by the “crisis narrative”, narrow focus on specific fields, and piecemeal initiatives with limited alignment of strategic action across stakeholders and elements of research, phase two (TIER2) must be founded in the following:

- Reproducibility is an opportunity not a crisis. Our finding that far fewer perceive reproducibility a “significant crisis” confirms recent calls to treat enhanced awareness of reproducibility as an opportunity rather than a crisis (

Munafò et al. 2022 ). Thus reframing the debate will enable us to move beyond hyperbole to more considered analysis of which solutions work in which circumstances across the research enterprise. - Epistemic diversity must be centred. Recent work by TIER2 Advisor

Leonelli (2018) adds nuance to our understanding of reproducibility by highlighting the importance of “epistemic diversity” in shaping consequences for reproducibility across research contexts. Factors including degrees of control over environments, reliance on inferential statistics, precision of research aims, and reliance on interpretation, as well as technical, social and cultural factors, all have deep implications for the meanings, implications and even applicability of concepts of reproducibility across epistemic contexts. These factors must be better understood across research contexts to inform thorough analysis of potential gains and savings across R&I. - Evidence must be systematised for informed policy across contexts. Acknowledging that reproducibility has very different meanings and consequences across epistemic, social, and technical contexts epistemic contexts, it is essential that any analysis of gains and savings be rooted in an understanding of how intervention impact pathways vary according to these contexts. Not all impacts will be positive, and trade-offs/unintended consequences are to be expected. For example,

Vazire (2018) suggests that although increased reproducibility may raise productivity in general, productivity may be reduced in some subfields. At the same time, persistent structural inequalities and biases, as well as mechanisms of cumulative advantage within research, may mean that uncritical policies for transparency and openness may have unintended negative consequences which compound inequities (Ross-Hellauer 2022 ,Ross-Hellauer et al. 2022 ). We hence fully agree with the EC reproducibility scoping report that we must “[d]evelop policies that support communities at different levels of maturity, not only advanced disciplines or countries” (DG-RTD 2020 ). (Fig.2 ) - Action must be targeted holistically to boost capacity at all levels. Fostering a culture of maximal reproducibility will require concerted action across levels of research cultures: creating tools (infrastructures and services) to make it possible, equipping researchers and others with skills to make it easy, networking communities to make reproducible practices the norm, revising incentives to make it rewarding, and implementing policies (where helpful) to make it necessary. Much work is already in place across all these elements. Linking and building on such initiatives is an essential task..

Crucial concepts for TIER2’s approach to implementing these principles are the concepts of key impact pathways, epistemic diversity and our case-study approach.

1.2.1.1 Key impact pathways

TIER2 will bring together theory and evidence to design a framework that defines gaps and prioritises new approaches, based upon the Key Impact Pathways (KIPs) methodology currently being operationalised to monitor R&I impact in Horizon Europe, and that TIER2 partners KNOW and ARC will employ in the “PathOS: Open Science Impact Pathways” project commencing Sept 2022.*

Impact pathway methods are grounded in theory-based approaches (

- identify key elements of pathways (input-output-outcome-impact) under the organisational prism of needs and objectives,

- describe how they are linked and work together,

- develop metrics for impact, and

- measure and test on selected cases.

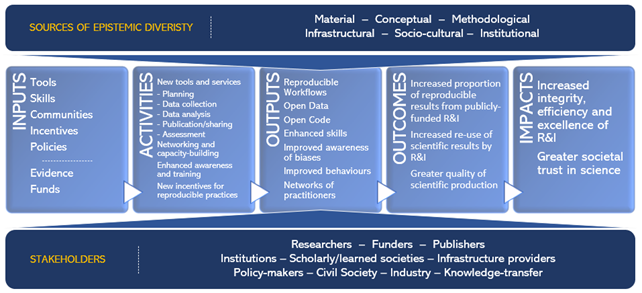

Charting the impact pathways requires that we describe the sequence of input-output-outcome-impact relations that show non-linear linkages and the steps from resources to more long-term impacts. It also entails developing narratives describing causal chains, including the effects of possible enabling factors and barriers. Starting from the key inputs of factors in research culture change, for each of our target domains (comp, life, soc sci), we will trace and prioritise the activities required to produce desired outputs, outcomes and impacts. From the description of the rationales and mechanisms linking the elements of reproducibility impact pathways across epistemic contexts, Fig.

1.2.1.2 Epistemic diversity

Reproducibility impact pathways will vary greatly due to epistemic diversity. Although, as already stated, concerns regarding reproducibility have been most vocally stated from a relatively narrow range of disciplines, they are increasingly addressed in other areas as well (

Reproducibility is not only affected by epistemic and methodological factors, however.

1.2.1.3 Case-study approach

Acknowledging that there is substantial epistemic diversity across research fields, with the need to better understand the relevance, meanings and implications of reproducibility across them, TIER2 hence proposes a comparative methodology which looks at reproducibility across such contexts with as much breadth and depth as possible (given the resources). We hence select three broad research domains (social sciences, life sciences, and computer sciences) for research(er) contexts, as well as two contexts which cut across disciplines (publishers and funders). The Horizon Dashboard indicates that our target domains (computer, life, social sciences) accounted for well over half of H2020 funding. Each of these broad communities is at different stages of recognition of reproducibility as a theme, and face different issues. Analysing them comparatively will enable greater understanding of such variation. We next outline the key issues facing each of these groups to contextualise TIER2’s priorities and methods outlined later (Table

|

Case 1: Reproducibility issues in social sciences |

|

Social sciences can be conceptualised as a very heterogeneous field, encompassing diverse epistemological and methodological approaches, working with various kinds of data including opinion polls, voting records, surveys, self-reported perceptions, behaviours, beliefs or attitudes, social network data, government statistics and indices, GIS data measuring human activity, and various forms of qualitative data, such as interview transcripts, field notes, and observational protocols. Reflecting this diversity, recognition of reproducibility as an issue greatly varies. While parts of psychology have been a dominant part of the ‘reproducibility crisis’ discussion since the start ( |

|

Case 2: Reproducibility issues in life sciences |

|

Life science is a very heterogeneous field with a number of disciplines, study and technology types. The data community in biology and medicine, however, is probably the most active one in creating data and metadata standards to support the reuse (including reproducibility) and sharing of the information, supported by strong international data mandates (e.g., 1996 Bermuda for genomics data, 2009 Toronto agreement for omics/clinical data). Much of the understanding of scientific transparency stems from the experience in bioinformatics, where the focus has been on information (incl. datasets, code, models and software) that is harmonised with respect to structure, format and annotation. Nowadays there are over 900 standards in the life sciences. Since the early 2000s grass-roots initiatives and standards organisation have worked to create:

Standardisation to enable FAIR data, which underpins reproducibility, is at the core of ELIXIR (European Life Sciences infrastructure), and part of its Interoperability Platform activities ( |

|

Case 3: Reproducibility issues in computer science |

|

Computer science research involves a large degree of determinism (high precision of goals, high dependence on statistics, total control on environment). In experimental work, high specification (far higher than in lab or other types of experimental research) of methods is theoretically possible since each computational action is logged ( |

|

Case 4: Reproducibility issues in research publishing |

|

In line with growing concerns about the quality and credibility of research and publishing processes, ensuring the reliability of published research has become increasingly important to publishers and journal editors over the past decade. Several approaches to incentivise or improve the reproducibility of the published record have been proposed. These include both pre- and post-submission measures, such as journal reporting guidelines, improved peer review practices and data availability requirements. However, recent studies have shown that, while improving, the number of journals explicitly demanding or enabling data sharing practices is still limited ( |

|

Case 5: Reproducibility issues in research funding |

|

Research funders have similar motivations to journal editors. Where research is open and reproducible research, it helps maximise potential impact and return on investment (ROI). Funders of scientific research are well positioned to guide scientific discoveries by enabling and incentivising the most rigorous and transparent methods. There are numerous recommendations on how funders should act in order to increase reproducibility ( |

1.2.2 Detailed methodology

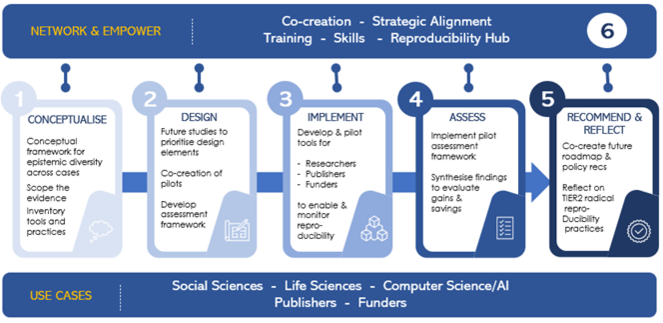

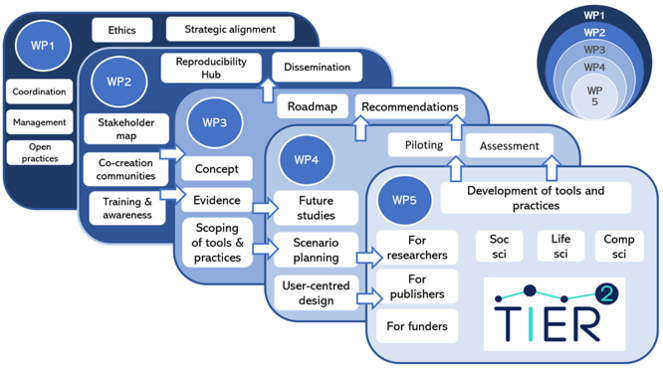

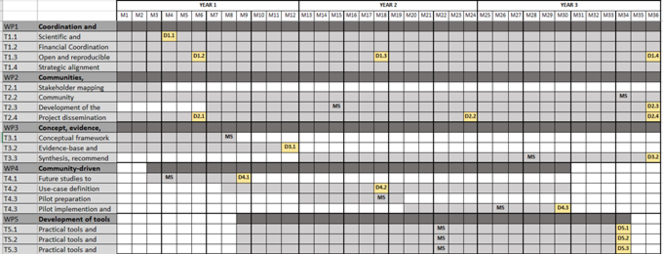

The TIER2 overall methodology, following six stages according to our stated objectives, is illustrated in Fig.

1.2.2.1 Methods for Objective 1: CONCEPTUALISE (conceptual/evidential framework)

Realised through WP3:

- Task 3.1. Conceptual framework for reproducibility across contexts (M1-M8)

- Task 3.2. Evidence-base and inventory of reproducibility tools and practices (M1-M12)

Our first major task within TIER2 will be to consolidate knowledge to date relating to reproducibility across epistemic contexts. Using desk research and three online focus-groups with co-creation communities, we will map out factors influencing epistemic diversity across our case-study domains (life, social and computer sciences). Systematically elucidating pertinent epistemic and methodological factors for reproducibility across these contexts will provide the initial theoretical framework for TIER2. The output will be a scoping report centred around a matrix that maps different epistemological aims and methods to various dimensions of ‘reproducibility’, as well as pertinent framework conditions (e.g., political, ethical, social, legal) that may affect the uptake of reproducibility practices. This framework will enable analyses of diverse conceptions, roles and barriers of reproducibility and permits identification of relevant and targeted tools, irrespective of fields.

Next, we will use a PRISMA-SCR ‘Scoping Review’ methodology to scope literature to date to answer the question: “What tools and practices are suggested to improve reproducibility across these epistemic contexts, and what evidence exists regarding their efficacy?”. We will systematically search for key terms across academic databases (e.g., Scopus, Web of Science, OpenALEX, OpenAIRE) as well for grey literature including policy reports (via Overton.io) and searches of stakeholder/project websites and funder databases of outputs (e.g., EC CORDIS). Methods will be pre-registered in advance (via Open Science Framework), including search protocols and data-charting strategies. In addition to evidence on efficacy of reproducibility interventions, we will also compile an exhaustive list of tools and practices, classified according to the elements of research lifecycle and epistemic contexts to which they pertain. Finally, we will collect and visualise key reporting standards and best practices in use within the EOSC science clusters relevant to our case-study domains, especially EOSC-Life (life sci) and SSHOC (soc sci).

These strands will be synthesised using the in a Deliverable report “D1.1 Reproducibility Impact Pathways: State-of-play on methods, tools, practices to increase reproducibility across diverse epistemic contexts”, which uses the Key Impact Pathways approached outlined above to identify key areas for intervention and tools/practices upon which to build in the project. Synthesising and presenting current knowledge in this way will create a centralised resource which enables all stakeholders (including project partners) to orient themselves easily to the state-of-the-art. In addition to providing the general theoretical and evidential basis for assessing gains/savings of reproducibility in the project, this content will also be used within the Reproducibility Hub (knowledge resource created in WP2) and the Researcher Reproducibility Checklist and Reproducibility Management Plan development activities (WP5).

1.2.2.2 Methods for Objective 2: DESIGN (co-creation of interventions)

Realised through WPs 2, 4:

- Task 2.1. Stakeholder mapping (M1-M3)

- Task 2.2. Community development and coordination of co-creation activities (M1-M36)

- Task 4.1. Future studies to identify priorities from the stakeholder community to predict future of reproducibility and identify actionable steps (M3-M9)

- Task 4.2. Requirements procurement and design (M8-M30)

Co-creation, defined as “a transparent process of value creation in ongoing, productive collaboration with, and supported by all relevant parties, with end-users playing a central role" (

For the design phase, TIER2 will build on partner experience in successful co-creative methodologies in the SOPs4RI and ON-MERRIT projects to conduct five (co-creative) online scenario workshops with relevant stakeholders (8-10 stakeholders per workshop). In these workshops, we will work with stakeholders to:

- envision the ideal future scenario regarding reproducibility for each stakeholder group;

- use “backcasting” techniques (

Inayatullah 2008 ) to identify the major building blocks needed to enable these future scenarios. In a next round, we will use an online survey to - prioritise what interventions for development and piloting are desirable and needed within subsequent TIER2 activities (further design in WP4 and development in WP5).

With these results, we can determine what contexts need more co-creation design in order to better equip researchers, funders and publishers with tools that promote reproducibility. Field notes and transcribed recordings will be used to create draft reports of the outcomes of each workshop, which will then be shared with participants for their further feedback. These priorities will then be carried forward to the use-case definition and design phase. Here, working closely with the developers from WP5, stakeholders will be engaged to define central use-cases for the envisioned tools/practices to be developed. For each case, a “design thinking” canvas-approach based on the JISC innovation canvas tool*

|

TRL: 9 |

Partner: OpenAIRE |

|

|

Argos is an open, extensible, and configurable machine-actionable tool developed to facilitate Research Data Management activities concerning the implementation of Data Management Plans (DMPs). |

||

|

TRL: 9 |

Partner: VUmc |

|

|

The Embassy is a wiki-based platform for the research community to share experiences and insights about research integrity and ethics, continuously contributing to the development of good science. |

||

|

TRL: 9 |

Partner: UOXF |

|

|

FAIRsharing is a curated registry, tool and service for data and metadata standards, inter-related to repositories and data policies. It hosts 1600 standards, 1900 databases, and 160 policies. |

||

|

TRL: 9 |

Partner: GESIS |

|

|

da|ra is a DOI registration service in Germany for social science and economic data, in cooperation with DataCite. It has more than 699k DOI registrations, and ~4m DOI resolutions per year. |

||

|

TRL: 7 |

Partner: GESIS |

|

|

gesisDataSearch enables searching for research datasets based on a periodically crawled index. It has more than 100,000 Datasets with approximately 600 new users per month. |

||

|

TRL: 9 |

Partner: GESIS |

|

|

Integrated search across different Social Science data collections at GESIS. It has 6,500 datasets, 13,000 survey variables, 400 measure instruments, and 107,000 publications. |

||

|

MethodHub* |

TRL: 2 |

Partner: GESIS |

|

MethodHub builds on containerisation technologies and digital lab notebooks to facilitate social science data analysis and its reproducibility, to find, learn, and experiment with computational methods. |

||

|

TRL: 9 |

Partner: OpenAIRE |

|

|

OpenAIRE Research Graph aggregates research data properties (metadata, links) for funders, organisations, researchers, research communities and publishers to interlink information. |

||

|

ROAL* |

TRL: 5 |

Partner: ARC |

|

ROAL (ReprOducibility Assessment tooLkit) streamlines assessment of reproducibility by automating the identification of datasets, their classification in terms of re-use, and the extraction of metadata. |

||

|

TRL: 8 |

Partner: ARC |

|

|

SCHeMa facilitates reproducibility of computational experiments on heterogeneous clusters, exploiting containerization, experiment packaging, workflow description languages, and Jupyter notebooks. |

||

1.2.2.3 Methods for Objective 3: IMPLEMENT (community-driven development & piloting)

Realised through WPs 2, 4, 5:

- Task 2.2. Community development and coordination of co-creation activities (M1-M36)

- Task 5.1. Practical tools and practices for researchers (M9-M34)

- Task 5.2. Practical tools and practices for publishers (M9-M34)

- Task 5.3. Practical tools and practices for funders (M9-M34)

- Task 4.3. Pilots preparation activities (M13-M19)

- Task 4.4. Pilot implementation and assessment (M20-M30)

In the tool development activities in WP5, we will use an agile early/rapid prototyping methodology with short development cycles (release early/often), ensure constant evaluation from domain experts, regular cycles of user-feedback, flexible experimentation with various ideas and directions, and increased collaborative activities. Design sketches and static mock-ups will be produced rapidly to test new concepts and receive critical feedback. Working prototypes with limited functionality (e.g., baseline algorithms, smaller data) will be rapidly developed to convey a more realistic impression of their operation. After feedback, the prototypes will be iteratively improved, until the design is gradually finalised, and the underlying algorithms are configured, customised and optimised. In their development, all tools will aim to be optimised for integration into the European Open Science Cloud, building especially on our existing EOSC links to enrich EOSC’s range of value-added services to increase reproducibility and reuse. These tools will then be piloted in WP4 (Tasks 4.3, 4.4). Table

TIER2 Provisional list of TIER2 pilot activities (to be refined/adapted in line with co-creation methods).

|

Pilot 1. Reproducibility Checklist (for researchers in social, life, computer sciences) [lead: KNOW] |

||

|

Aim: To customise & evaluate the Reproducibility Checklist created in T5.1.1 for researchers across case-study domains. Activities: Conduct user-testing & surveys for the use, refinement, & enrichment of the Reproducibility Checklist by stakeholders in

|

Instruments: Various metadata standards for disciplines & digital objects via FAIRsharing; tool inventory (T3.2) & advice on skills (T2.2) included in the Reproducibility Hub (WP3); GESIS MethodsHub, da|ra |

Expected outcomes: Detailed user-testing & feedback (survey) from stakeholders; Production-level Reproducibility Checklist tool, available via Reproducibility Hub and connected to FAIRsharing; Reproducibility Checklist registered in FAIRsharing, as a new ‘reporting guideline’, and DOI assigned. KPIs: At least 24 participants in the surveys; At least TRL8 for the Reproducibility Checklist tool |

|

Pilot 2. Reproducibility management plans (RMPs) (for researchers in social, life, computer sciences, & funders) [lead: OpenAIRE] |

||

|

Aim: Customise & evaluate prototype “Reproducibility Management Plan” functionalities in case-study domains. Activities: User-testing/surveys for definition, core functionalities & initial prototyping of RMP tool amongst:

|

Instruments: Argos DMP tool (see T5.1.2 & 5.1.3); PENSOFT publishing platforms; FAIRsharing; GESIS MethodsHub, Go-inter |

Expected outcomes: Core RMP functionalities pilot tested KPIs: At least 15 HE projects in soc, life, comp sci participating in the tests; Min 3 funders pilot test; At least TRL5 for the RMP tool |

|

Pilot 3. Reproducible workflows (for researchers in social, life, computer sciences) [lead: ARC] |

||

|

Aim: Customise & evaluate tools/practices for reproducible workflows in 3 epistemic contexts. Activities: (1) Adapt & extend SCHeMa protocol to further facilitate data/code reproducibility in life sci (e.g., scRNA-seq analysis workflow provided by FLEMING); (2) Extend SCHeMa to

|

Instruments: SCHeMa (see T5.1-activity 5.1.3); RO-Crates; FAIRsharing; GESIS Search; GESIS MethodsHub; standards (e.g., DOME recommendations for life science; DDI for social science); RDMKit; FAIR Cookbook; (ReproZip for computational experiment packaging) |

Expected outcomes: Use-cases implemented to extend SCHeMa containerisation workflows; Consolidated knowledge on applicability of SCHeMa for new domains; Mutual learning across research communities KPIs: Min 3 use cases for SCHeMa expansion; SCHeMa min TRL6 for new research domains; Min 25 participants across epistemic contexts (mutual learning activities) |

|

Pilot 4. Workflows to review research datasets & code (for publishers) [lead: UOXF] |

||

|

Aim: (1) Implement & test workflows for data/code, including advanced screening & review of datasets & software regarding reproducibility; (2) Scope common system of ‘stamps’ or validity marks to indicate that work has been checked, validated and/or reuse Activities: (1) user-testing & survey with reviewer-editor pairs; (2) cross-stakeholder focus groups to scope essential features of “validity marks”; (3) piloting workflows to assess reproducibility (based on containerisation & experiment packaging technologies) & review method/model generalisability in CS/AI conferences |

Instruments: Workflows/ tools developed in T5.2.1 (building on ARC’s ROAL toolkit; containerisation elements (RO-Crate, Docker, SCHeMa); FAIRsharing; GESIS Data search (soc sci use-case) |

Expected outcomes: New workflows for review of data/code; Approaches piloted with cross stakeholder fora (publishers, researchers); Scoping report on requirements for common system of validity marks; Testing generalisability workflows in CS/AI conferences KPIs: Min 30 reviewer-editor pairs participate in user-testing/surveys; Min 3 cross-stakeholder focus groups; 1 scoping report for ‘stamps’ or validity marks |

|

Pilot 5. Threaded (linked) publications (for publishers, plus researchers in social, life, computer sciences) [lead: KNOW] |

||

|

Aim: Investigate how models of threaded, linked related publications can support reproducibility in case-study domains (social, life, computer science) Activities: Focus groups & user-testing with researchers using current platforms (e.g., Octopus, ResearchEquals) to iterate required common models, standards, drivers & barriers to mainstreaming of threaded publication models |

Instruments: Octopus, ResearchEquals, F1000 platform, plus other potential tools/standards for incorporation (RO-Crates, Nanopublications, Docmaps, GESIS MethodHub & Knowledge Graph) |

Expected outcomes: Enhanced understanding of common models, standards, drivers & barriers for threaded publication models KPIs: 1 focus group to be implemented; Min two threaded publications platforms tested; At least 24 participants in the surveys |

|

Pilot 6. New models of publishing & review - focusing on open & transparency & mandatory data deposition & availability (for publishers, plus researchers in social sciences & humanities) [lead: KNOW] |

||

|

Aim: Investigate how: (1) the F1000 model (publish then review, with maximally open data & review, & (2) models for registered reports, transfer to humanities & social science research contexts Activities: Study how (1) SSH users of Open Research Europe platform & the new Routledge Open Research (both based on F1000 platform) experience this new publishing model; (2) user-testing & data analysis of Taylor & Francis SSH research published as registered reports |

Instruments: Open Research Europe & Routledge-branded platform for SSH research (both based on F1000 Platform); Taylor & Francis journals piloting registered reports workflows |

Expected outcomes: New publication workflows for SSH research, including new guidelines on “data” deposition; Enhanced understanding of potential role of registered reports in combating publication bias in SSH KPIs: At least 20 users participating to the user-testing; At least 10 registered reports published in the context of testing |

|

Pilot 7. Reproducibility Promotion Plans (for funders) [lead: VUmc] |

||

|

Aim: Produce practical advice for funders on how to create a plan to boost the reproducibility of their funded-results Activities: Pilot the RPP tool with at least two funders (one will be ARC’s ARCHIMEDES) to create a “Reproducibility Promotion Plan” & examine the issues involved in creating & implementing such a plan. |

Instruments: “Reproducibility Promotion Plan” tool developed in T5.3.1 |

Expected outcomes: Validated “Reproducibility Promotion Plan” tool made available to the wider funder community (via Reproducibility Hub) KPIs: At least 2 funders to create RPPs; At least 1 RPP to be tested by each funder |

|

Pilot 8. Reproducibility monitoring dashboard (for funders) [lead: ARC] |

||

|

Aim: Development & testing of tools to enable funding agencies in tracking & monitoring reusability of research artefacts (datasets, software, tools/systems, etc) created in funded projects Activities: Implement dashboard & conduct user-testing with funder communities |

Instruments: ROAL; FAIRsharing, OpenAIRE Knowledge Graph |

Expected outcomes: Validated prototype Funder Reproducibility monitoring dashboard KPIs: At least 8 funder representatives perform user testing, incl. ARC’s ARCHIMEDES scheme for AI research |

1.2.2.4 Methods for Objective 4: ASSESS (evaluation of pilots & findings synthesis)

Realised through WPs 2, 3, 4:

- Task 2.2. Community development and coordination of co-creation activities (M1-M36)

- Task 4.4. Pilot implementation and assessment (M20-M30)

- Task 3.3. Synthesis and recommendations (M13-M36)

A systematic framework for assessment of pilot activities will be developed alongside the design activities (Objective 3) and implemented according to the steps to impact pathway evaluation proposed by (

- What would success look like (what would intended outcomes be)?

- What factors influence achieving each outcome?

- Which factors can the project influence

- Which can it not?

- Which factors, in which ways, will be targeted for change to bring about desired outcomes?

- What performance information should be collected (including to assess the ways in which reproducibility brings gains or savings)?

- How can this information be obtained?

Following (

1.2.2.5 Methods for Objective 5: RECOMMEND/REFLECT (roadmap & policy guidelines/recommendations)

Realised through WPs 2, 3:

- Task 2.2. Community development and coordination of co-creation activities (M1-M36)

- Task 1.3. Open and reproducible research practices (M1-36)

- Task 3.3. Synthesis and recommendations (M13-M36)

- Task 2.4. Project dissemination and communication (M1-M36)

Next, these synthesis activities will inform the creation of policy briefs, guidelines and recommendations for funders, publishers, research institutions and researchers. Firstly, we will reflect upon the challenges, costs and benefits resulting from reproducibility and transparency approaches in the context of TIER2, leading to a final “autoethnographic” self-reflection report that feeds into the synthesis of results obtained from the empirical work in WPs 3-5. As detailed below (sec. 1.2.2.7 on Open Science and Research Data Management) TIER2 aims to be the change we seek by fostering a maximally open, transparent and reproducible approach to the implementation of Horizon Europe projects (beginning from right now, by making our proposal publicly available at the time of submission*

We will then conduct co-creation workshops with individuals selected via purposive sampling from five stakeholder-categories (researchers, funders/RFOs, institutions/RPOs, infrastructures, and umbrella bodies/networks, including those who participated in the approaches implemented) to iteratively create recommendations and policy guidelines for practices and joint action by stakeholders for future training priorities. This will use a co-creative, modified Delphi methodology that combines three rounds of anonymised survey with four online consensus-seeking meetings to work with 30 community members. This methodology has recently been used by the respective Task 3.3. Leader (KNOW) to create the ON-MERRIT recommendations (

In parallel, we will engage in liaison activities to ensure maximum impact of the recommendations. A dissemination and communications strategy (Milestones) will guide this process, whereby the co-creation community will be engaged to disseminate feedback within networks, and key stakeholders will be engaged. We will work with major umbrella bodies (e.g., Science Europe, EUA), as well as funders, research institutions, infrastructures (e.g., ESFRIs clusters, EOSC), and other networks (e.g., Reproducibility Networks, RDA groups). With the support of relevant national and supranational organisations (e.g., EUA, Science Europe) we will ensure that policy briefs are distributed to the senior management of RPOs, the research governance teams of RPOs, and other relevant organisations (e.g., EARME, ENRIO). As a final validation step, we will seek endorsement of this vision from all the stakeholder groups mentioned. Success will be measured via the number of endorsing organisations, with a target KPI of at least 25 stakeholder endorsements.

1.2.2.6 Methods for Objective 6: NETWORK/EMPOWER (capacity-building on skills, connections, resources)

Realised through WPs 1, 2:

- Task 1.4. Strategic alignment activities (M1-M36)

- Task 2.2. Community development and coordination of co-creation activities (M1-M36)

- Task 2.3. Development of the Reproducibility Hub (M10-M36)

- Task 2.4. Project dissemination and communication (M1-M36)

In order to equip researchers, funders, publishers and others with the skills, connections and resources to exploit state-of-the-art guidance, tools and services, we will undertake capacity-building activities throughout the project. Three clusters of activities will particularly add capacity to address reproducibility issues in Europe and beyond.

(a) Training & awareness: In addition to the learning and networking multiplication-effects for stakeholders engaged in our many co-creation activities described above, TIER2 will undertake a range of activities to boost reproducibility skills and awareness. We will host events including a final Reproducibility Conference (Month 34) and a minimum six “Reprohack” events (co-located with discipline-specific conferences) where researchers are engaged to reproduce the work of others.*

(b) Reproducibility Hub: Firstly, all evidence, tools and resources created via the activities described above will be collected and made easily available via our Reproducibility Hub, a sustainable open (minimum CC BY) knowledge base of results, methodologies and interventions on the drivers and consequences of reproducibility for the R&I system hosted at the Embassy of Good Science. The Embassy, hosted by TIER2 partner VUmc, is already a central resource for researchers and others (recently specifically mentioned in many EC funding calls), whose stated goal “to promote research integrity among all those involved in research” aligns with aims of reproducibility (some resources on the subject are already available). The Embassy’s existing functionalities for resources, training materials, and community-building already offer the core functions needed for the Reproducibility Hub, which will be created as an Embassy sub-site, hosting content created across TIER2. Users will themselves be able to add or update content via the Embassy’s Wiki functionalities. Particular highlights will be training modules and checklists, inventories of reproducibility tools and practices for specific research fields (life, social, and computer sciences) and stakeholders (publishers, funders, institutions), guidance on best practice, and interactive elements that allow users to exchange good practice and tools. Created iteratively throughout the project as results become available, this resource will fill the main gaps in current knowledge and provide a platform for exchange regarding reproducibility.

(c) Aligning & empowering networks: A core principle of TIER2 is that action to increase reproducibility must be targeted holistically to boost capacity at all levels. Fortunately, much action is already underway. The last ten years has seen huge investment in infrastructure and services to enable Open Science and Research Integrity. TIER2 includes key Open Science players, most prominently OpenAIRE, the European Open Science infrastructure present with partners in more than 30 countries. Partners AU and VUmc, meanwhile, are key players in Research Integrity with strong links to relevant organisations (e.g., ENRIO). VUmc is host to the Embassy of Good Science, a key RI training and community-building resource. Meanwhile, networks of publishers and funders consider the same questions. Of particular importance to TIER2’s success in networking and empowering communities are:

- Reproducibility Networks (RNs): Building from a model pioneered in the UK (UKRN), RNs now exist in many countries internationally. These national networks of lead researchers who spearhead activities within institutions, especially support grassroots and early-career activities (e.g., ReproducibiliTea journal clubs). ERNs coordinate sharing of best practices and organisation of joint training initiatives. Local networks include grassroots groups of researchers and institutions, and are supported by external stakeholders such as funders, publishers, and other scholarly organisations (incl. FAIRsharing). TIER2 will empower the RNs (including by fostering creation of three new RNs in “Widening Participation” countries) by funding establishment meetings (3 awards of 5k EUR made via an open call for networks of >3 institutions within a Widening country who wish to establish an RN). TIER2 will build upon the RNs’ existing advances through our excellent links (see section 1.2.2.7). TIER2 partners Charite and UOXF are core partners in the German and UK RNs, and we have secured Letters of Interest confirming intent to collaborate from RNs in Australia, Brazil, Finland, Germany, Italy, Norway, Slovakia, Sweden, Switzerland, and UK.

- Research infrastructures (incl. EOSC): The European Open Science Cloud is an EC-funded initiative to develop a “Web of FAIR Data and Services” and “provide European researchers, innovators, companies and citizens with a federated and open multi-disciplinary environment where they can publish, find and reuse data, tools and services for research, innovation and educational purposes.” Increasingly, national infrastructural investments like the German national research data infrastructure (NFDI) are also being designed explicitly to link to the EOSC. Tools and practices are EOSC-native (our term), when they are created to be interoperable and embedded in the EOSC from the outset and linked via relevant EOSC components and community-led infrastructures (e.g., eInfrastructures like TIER2 partner OpenAIRE, ESFRIs like CESSDA or ELIXIR (where GESIS, UOXF and FLEMING are key national nodes), and clusters such as EOSC-Life and SSHOC, where many of our partners also take part. As key players in this landscape, we are uniquely placed to ensure our tools are EOSC-interoperable, and to bring the many EOSC activities on standards, training, and interoperability into contact with the RNs and other networks.

Networking and linking such initiatives has the potential to play an out-sized role in increasing reproducibility. The TIER2 consortium’s close existing connections to such networks means we are uniquely placed to facilitate and strengthen these connections. By aligning such efforts, TIER2 will greatly add to synergies and network-effects between them to boost capacity at national and international levels.

1.2.2.7 Relevant projects/initiatives and TIER2 links.

- BERD@NFDI (GESIS) Integrated analysis platform, Infrastructure. Initiative to build a platform for collecting, processing, analysing & preserving business, economic & related data, with a focus on unstructured (big) data such as video, image, audio, text or mobile data.

-

Data 4Impact (ARC; 2017-2019) Assessment, Infrastructure, Impact. Developed new indicators for assessing research & innovation performance based on a data-driven approach.

-

EnTIRe (VUmc; 2017-2021) Platform for dissemination, Stakeholder hub, Research Integrity. Created the wiki-platform “Embassy of Good Science” to share knowledge on fostering Research Integrity.

-

EOSC-Life (UOXF; 2019-2023) Data sharing, Data & metadata standards, Infrastructure. Coordinated by ELIXIR, this cluster brings together 13 ESFRI Research infrastructures in the Health & Food domain to create an open collaborative digital space for life science in the EOSC.

-

EpistemicProgress in Humanities (VUmc; 2020-2023) Reproducibility, Replication. Studies reproducibility in the humanities, conducting theoretical, conceptual & empirical work.

-

IntelComp (ARC, OpenAIRE; 2021-) Science, Technology & Innovation policy, InfrastructureDevelops big data/AI tools to model & assist Science Technology & Innovation (STI) policy making.

-

NFDI4Datascience (GESIS; 2021-) Reproducibility, Research data lifecycle, Infrastructure. Initiative to support all steps of interdisciplinary research data lifecycles, including collecting/creating, processing, analysing, publishing, archiving, & reusing resources in Data Science & Artificial Intelligence.

-

ON-MERRIT (KNOW; 2019-2022) Open Science, Responsible research & innovation, Equity. Investigated dynamics of equity & inclusion in open & responsible research, including reproducibility.

-

OpenUP (KNOW, ARC; 2016-2019) Open Science, Open peer review, science communication. Built a framework for the review-disseminate-assess phases of the research life cycle to support & promote Open Science, focussing on Open Peer Review, innovative dissemination, & research impact measurement.

-

PathOS (ARC, KNOW, OpenAIRE; 2022-2025) Open Science for science, economy & society, Reusability. New EC HE project (starts Sep 2022). Quantifies the Key Impact Pathways of Open Science relating to the research system & its interrelations with economic & societal actors.

-

POIESIS (AU; 2022-2025) Research integrity, Open Science, Public trust in science. New EC HE project (starts Sep 2022). Studies the connections between public trust in Science, research integrity & open science practices.

-

RTD/2020/SC/010 - Reproducibility (ARC, KNOW; 2021-2022) Reproducibility. Tender study to assess the reproducibility of research results in EU Framework Programmes for Research.

-

SARI MOAP RTD/2019/SC/021 (ARC; 2020-202) Open Science, Impact, Open Access. Tender study, commissioned by DG RTD, on "Monitoring the Open Access Policy of Horizon 2020”.

-

SARS (ARC; 2020-2022) Horizon Europe Key Impact Pathways & related indicators, Open Science. Study to support the monitoring & evaluation of the Framework Programme for research & innovation along Key Impact Pathways.

-

SSHOC (GESIS; 2019-2022) Data sharing, Confidential data, EOSC. Coordinated by CESSDA, this cluster unites 51 organisations in developing the social sciences & humanities area of EOSC, encouraging secure environments for sharing & using sensitive/confidential data.

-

SOPs4RI (AU, VUmc; 2019-2022) Research Integrity, RE, guideline development. Fostering research Integrity in institutions (Research performing institutions & research funders).

-

VIRT2UE (VUmc; 2018-2021) Research Integrity. Developed a sustainable train-the-trainer programme for tailored ERI teaching across Europe, focusing on understanding & upholding the principles of the European Code of Conduct for Research Integrity.

-

UKRN UKRI-funded project (UOXF; 2019-2024) Reproducibility, community engagement. Peer-led consortium of 18 UK universities & several partners to drive uptake of open research practices for reproducible research, connected to a network of RNs.

1.2.2.8 Other methodological building blocks

Interdisciplinarity

To bring reproducibility into its next phase, TIER2 convenes a unique constellation of experts in reproducibility issues in targeted research fields, processes of research culture change, capacity-building & knowledge infrastructures to propose a holistic, pragmatic methodology that critically addresses all these elements simultaneously. We include: Domain experts for the target communities (computer science [ARC, KNOW], life science [UOXF, Charite, FLEMING], social science [GESIS, AU, VUmc]); Experts in science and technology studies, research ethics and meta-research with profound understanding of political and social aspects of research culture reform (on e.g., skills, incentives, policies: KNOW, AU, VUmc); experts in socio-economic impact assessment (AU, KNOW); data science (ARC); standards for digital objects across disciplines (UOXF); Core technical expertise in infrastructure and services, including established links to national and EC infraspheres (especially EOSC - OpenAIRE, UOXF, GESIS, ARC). As outlined in the methodology above, integration of the interdisciplinary expertise present in TIER2 will happen at the junctions between objectives, when moving from concept and design to implementation and assessment, as well in particular in Objective 5 (recommend/reflect), where results will be integrated and synthesised, by taking in perspectives across disciplines. In addition, TIER2 will engage in continuous co-creation dialogue with researchers in many different scientific fields, bringing even broader perspectives to bear in the design, implementation and assessment of our activities.

Gender dimension & EDI (equity, diversity, inclusion) factors

Gender and diversity considerations will be reflected in all stages of TIER2: in the research design, methods, analyses, interpretation, dissemination and creation of guidelines and recommendations. As outlined in the provisional schema of elements to map reproducibility impact pathways (Fig.

Open Science practices

TIER2 convenes experts in open and reproducible research practices. TIER2 partners are committed to Open Science as proven by their involvement, leadership and expertise in Open Science for the past decade. TIER2 will build on this expertise to follow current best practices to ensure Open Science embedded in all aspects of the project:

- Project proposal made public immediately upon submission*

13 - Policies for early results sharing, quality processes for full documentation of methodologies and tools (incl. standards for data and use well-established ontologies) produced in WPs4/5, and for pre-registration of all pilots and interventions will be defined in the project handbook (D1.1) and monitored for adherence (Task1.3)

- Data Management Plan developed and regularly updated (min. M6, M18) according to Horizon Europe template, using the OpenAIRE Argos service

- OpenAIRE Guidelines used when publishing results to ensure all artefacts are linked for discovery; our tools will be based on open source technologies, and all code will be shared according to the FAIR4RS Principles

- All possible data will be shared according to FAIR principles – where some information needs protection or anonymization, e.g. surveys, interviews, the metadata will be made “as open as possible, as closed as necessary”, with qualitative data suitably anonymised to meet ethical and data protection requirements, and shared via qualitative data repositories (TIER2 partners GESIS are world-leaders in such techniques)

- Upstream engagement and participatory approaches will be used throughout TIER2, fostering open collaboration by actively enrolling users in a co-design and evaluation processes for co-creation with major stakeholders throughout

- All publications pre-printed and made Open Access (with preference for venues practicing open peer review where possible). For OA, we will at a minimum publish in Green OA (self-archiving) deposited in an OpenAIRE compliant repository (e.g., Zenodo) at the time of submission (pre-prints) and update with Author Manuscript (post-print), or in Open Research Europe (ORE) which offers an open peer review process. Budget of 3000 EUR has been allocated per partner for publishing in APC gold OA journals.

Research Data Management

Through our expertise (see Table 19, section 3.3) on Research Data Management (RDM) and FAIRification of research objects, TIER2 will enable state-of-the-art practices to ensure effective management, preservation and sharing of all the research objects we create:

- FAIR research objects: TIER2 will create a wide range of methodologies, tools and data. Input data will be derived through a range of databases (mainly open), desk research and interviews. Once the indicators produced in WP3 have been validated by the experts from the case studies, TIER2 partners Athena RC, CWTS, CNRS will collect and package all components used in the development of an indicator and publish in open repositories such as Zenodo, using well-established open type licenses (e.g., CC, GNU, GPL, MIT). Special attention will be given to output data that is derived from proprietary /commercial databases, where we ensure the inclusion of only aggregate/statistical data. The same will apply to evidence from interviews and surveys that, in compliance to privacy policies, will be processed in an anonymous way and made public only in aggregated form. Finally, any training material developed in WP5 will be published according to the emerging EOSC QA practices in the OpenAIRE Learning Portal.

- Standards: TIER2 will use existing standards such as OpenAIRE Guidelines, DataCite, DDI to publish the research objects, adapting them to cover specific needs of the development of indicators. The landscape report in WP1 will be produced using the PRISMA framework, while interviews/survey results will be fully anonymised and published using appropriate repositories. TIER2 will additionally ensure that all metadata complies to RISIS, the emerging EOSC Interoperability Framework.

- Reproducibility/Ethics: To ensure transparency, all algorithms and training sets developed in WP2/3 will be documented presenting assumptions, biases and limitations. Code will be published via GitHub-Zenodo integration and will be linked to publications and data.

Curation & storage/preservation: Even though TIER2 has foreseen actions for coordinated data management and FAIR publishing with allocated effort in WP1 led by Know-Center, partners will use their own facilities for storing intermediate data (from databases, surveys, interviews) with well-established and agreed upon procedures. GitHub will be used for sharing code. The estimated cost for effective RDM is in the order of 80K, spread across WP activities.

2 Impact

This section outlines how TIER2 aims to contribute to the outcomes and impacts described in the EC work programme, the likely scale and significance of this contribution, and our measures to maximise these impacts.

2.1 Project’s pathways towards impact

As the world emerges from the COVID-19 pandemic, economic pressures (and hence increased scrutiny of research funding) and the exposed consequences of lack of societal trust in science are of urgent importance. Increased reproducibility of results in the European Research Area and beyond will reduce inefficiencies, avoid repetition, maximise return on investment, prevent mistakes, and speed innovation to bring trust, integrity and efficiency to the ERA and global Research & Innovation (R&I) system in general. To set the scale of potential savings, according to

TIER2 sets out an ambitious yet pragmatic programme of activities founded in four fundamental principles:

- Reproducibility is as an opportunity not a crisis;

- Epistemic diversity (variation across modes of knowledge production and socio-technical contexts) must be centred;

- Evidence must be systematised for informed policy across contexts;

- Action must be targeted holistically to boost capacity at all levels.

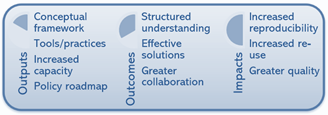

To enact these principles, in TIER2 stakeholder communities of researchers, publishers and funders will positively inform

- a new structured understanding of the nature of reproducibility (concrete interventions, drivers, barriers, gains and savings);

- creation and implementation of effective new solutions at all levels (from technical to policy) to increase the reproducibility of R&I results;

- empowered communities and networks whose new linkages and shared vision have powerful network effects that enable alignment and joint action on training, skills, infrastructure and more.

This will be done through innovative, applied research performed, providing tangible and feasible policy options. By the conclusion of the TIER2 project, Key Outputs will have been achieved which will already be translating to outcomes (mid-term benefits achieved during, or max two years after, the project). In turn, these outcomes will result in longer-term impacts (3+ years after project end). In this section we describe these Key Outputs and follow the causal chains (pathways) whereby we are certain they result in maximal short, mid and long-term impact (see Table

|

TIER2 Key Output |

Lever(s) for change |

Enables |

|

KO1. Conceptual framework |

Evidence |

Structured understanding, clarity of aims /means |

|

Results: TIER2 will create a new framework for assessing reproducibility impact pathways across epistemic contexts, with synthesised findings on gains & savings possible via increased reproducibility. This will consolidate evidence on the state-of-the-art regarding evidence/uptake of reproducibility interventions, & provides inventory of reproducibility tools/practices across contexts. This enhanced theoretical/evidential basis will enable shared understanding and orientation on best practices to increase reproducibility. |

||

|

KO2. Innovative tools & practices |

Tools |

Concrete solutions (policy-, technical- & practice-based), empowerment, innovation |

|

Results: After TIER2, eight ground-breaking new tools have been successfully implemented & piloted activities successfully implemented. These concrete, innovative solutions for at the levels of policy, technology & practice will empower our key stakeholders to take action.

|

||

|

KO3. Increased capacity |

Skills/Communities |

Collaboration, alignment, joint action, skills |

|

Results: TIER2 will empower individuals and networks to boost capacity for the long-term, including via

The increased alignment and collaboration possible through these linkages, as well as increased capacity for action, will spur reproducibility across all actors. |

||

|

KO4. Policy roadmap |

Incentives/policy |

Direction, momentum, sustainability, inclusivity |

|

Results: TIER2 will finally result in a consolidated stakeholder roadmap on priorities for future reproducibility, including reform of reward & recognition structures. This will include practical policy & implementation recommendations/guidelines/briefs for research funders, institutions, policy-makers, publishers & researchers in Europe & beyond. By the end of the project 30 funders, institutions & networks will have endorsed the recommendations. This vision for future action will provide direction and momentum to unite stakeholders in sustainable efforts to address issues of reproducibility for the long-term. |

||

2.1.1 TIER2 contributions to outcomes

In turn, these Key Outputs will directly lead to a host of short- to medium-term Outcomes which significantly address the main concerns in reproducibility. Fuelled by the dissemination and exploitation measures described below (Sec 2.2), by the end of (or max 3 years after) our project, the contributions outlined below in Table

|

Expected Outcome 1: “Structured understanding of the underlying drivers, of concrete and effective interventions - funding, community-based, technical and policy - to increase reproducibility of the results of R&I; & of their benefits” |

|