|

Research Ideas and Outcomes :

Grant Proposal

|

|

Corresponding author: Christoph Steinbeck (christoph.steinbeck@uni-jena.de), Oliver Koepler (oliver.koepler@tib.eu)

Received: 25 Jun 2020 | Published: 26 Jun 2020

© 2020 Christoph Steinbeck, Oliver Koepler, Felix Bach, Sonja Herres-Pawlis, Nicole Jung, Johannes Liermann, Steffen Neumann, Matthias Razum, Carsten Baldauf, Frank Biedermann, Thomas Bocklitz, Franziska Boehm, Frank Broda, Paul Czodrowski, Thomas Engel, Martin Hicks, Stefan Kast, Carsten Kettner, Wolfram Koch, Giacomo Lanza, Andreas Link, Ricardo Mata, Wolfgang Nagel, Andrea Porzel, Nils Schlörer, Tobias Schulze, Hans-Georg Weinig, Wolfgang Wenzel, Ludger Wessjohann, Stefan Wulle

This is an open access article distributed under the terms of the Creative Commons Attribution License (CC BY 4.0), which permits unrestricted use, distribution, and reproduction in any medium, provided the original author and source are credited.

Citation: Steinbeck C, Koepler O, Bach F, Herres-Pawlis S, Jung N, Liermann JC, Neumann S, Razum M, Baldauf C, Biedermann F, Bocklitz TW, Boehm F, Broda F, Czodrowski P, Engel T, Hicks MG, Kast SM, Kettner C, Koch W, Lanza G, Link A, Mata RA, Nagel WE, Porzel A, Schlörer N, Schulze T, Weinig H-G, Wenzel W, Wessjohann LA, Wulle S (2020) NFDI4Chem - Towards a National Research Data Infrastructure for Chemistry in Germany. Research Ideas and Outcomes 6: e55852. https://doi.org/10.3897/rio.6.e55852

|

|

Abstract

The vision of NFDI4Chem is the digitalisation of all key steps in chemical research to support scientists in their efforts to collect, store, process, analyse, disclose and re-use research data. Measures to promote Open Science and Research Data Management (RDM) in agreement with the FAIR data principles are fundamental aims of NFDI4Chem to serve the chemistry community with a holistic concept for access to research data. To this end, the overarching objective is the development and maintenance of a national research data infrastructure for the research domain of chemistry in Germany, and to enable innovative and easy to use services and novel scientific approaches based on re-use of research data. NFDI4Chem intends to represent all disciplines of chemistry in academia. We aim to collaborate closely with thematically related consortia. In the initial phase, NFDI4Chem focuses on data related to molecules and reactions including data for their experimental and theoretical characterisation.

This overarching goal is achieved by working towards a number of key objectives:

Key Objective 1: Establish a virtual environment of federated repositories for storing, disclosing, searching and re-using research data across distributed data sources. Connect existing data repositories and, based on a requirements analysis, establish domain-specific research data repositories for the national research community, and link them to international repositories.

Key Objective 2: Initiate international community processes to establish minimum information (MI) standards for data and machine-readable metadata as well as open data standards in key areas of chemistry. Identify and recommend open data standards in key areas of chemistry, in order to support the FAIR principles for research data. Finally, develop standards, if there is a lack.

Key Objective 3: Foster cultural and digital change towards Smart Laboratory Environments by promoting the use of digital tools in all stages of research and promote subsequent Research Data Management (RDM) at all levels of academia, beginning in undergraduate studies curricula.

Key Objective 4: Engage with the chemistry community in Germany through a wide range of measures to create awareness for and foster the adoption of FAIR data management. Initiate processes to integrate RDM and data science into curricula. Offer a wide range of training opportunities for researchers.

Key Objective 5: Explore synergies with other consortia and promote cross-cutting development within the NFDI.

Key Objective 6: Provide a legally reliable framework of policies and guidelines for FAIR and open RDM.

Keywords

Research Data Management, Databases, Chemistry, NFDI, NFDI4Chem

Consortium

Research domains or research methods addressed by the consortium, objectives

Chemistry is a core natural science influencing and supporting many other research areas such as medicine and health, biology, materials science, engineering, or energy. The long-term preservation and re-use of research data from chemistry therefore also fertilises other disciplines. Research Data Management (RDM) in chemistry is currently not organized systematically and separated solutions of individual institutions lead to a low visibility, accessibility and usability of research results. The lack of (interdisciplinary) use of research data not only causes high costs for society, but also delays national and international developments and thus innovation in central research areas. The added value that emanates from the preservation and study of scientific data in chemistry is particularly high, since the significance of the data is often immortal and older data can also be used for current investigations. In most cases it is even absolutely necessary to access older data, because experimental data or complex simulation data in particular can only be generated with high costs and great effort. A loss of the previously acquired data can be an irretrievable loss of knowledge. The vision of NFDI4Chem is the provision of a sustainable RDM infrastructure through the application of digitalisation principles to all key steps of research in chemistry. NFDI4Chem will support scientists in their efforts to collect, store, process, analyse, disclose and re-use research data in Chemistry. Measures to promote Open Science and RDM in agreement with the FAIR data principles are fundamental aspects of NFDI4Chem to serve the community with a holistic concept for access to research data. To this end, the overarching objective is the development and maintenance of a national research data infrastructure for the research domain of chemistry in Germany, and to enable innovative services and science based on research data. NFDI4Chem intends to represent all disciplines of chemistry in academia. We aim to collaborate closely with thematically related consortia. In the initial funding phase, NFDI4Chem focuses on molecules and data for their characterisation and reactions, both experimental and theoretical.

This overarching goal is achieved by working towards a number of key objectives:

Key Objective 1: Establish a virtual environment of federated repositories for storing, disclosing, searching and re-using research data across distributed data sources. Connect existing data repositories and, based on a requirements analysis, build one or multiple domain-specific research data repositories for the national research community, and link them to international repositories.

Key Objective 2: Initiate international community processes to establish minimum information (MI) standards for data and machine-readable metadata as well as open data standards in key areas of chemistry, where missing, in order to support the FAIR principles for research data.

Key Objective 3: Foster cultural and digital change towards Smart Laboratory Environments by promoting the use of digital tools in all stages of research and promote subsequent RDM at all levels of academia, beginning in undergraduate studies curricula.

Key Objective 4: Engage with the chemistry community in Germany through a wide range of measures to create awareness for, and foster the adoption of, FAIR data management. Initiate processes to integrate RDM and data science into curricula. Offer a wide range of training opportunities for researchers.

Key Objective 5: Explore synergies with other consortia and promote cross-cutting development within the NFDI.

Key Objective 6: Provide a legally reliable framework of policies and guidelines for FAIR RDM.

Composition of the consortium and its embedding in the community of interest

NFDI4Chem started as a grassroots initiative driven by experts in the field after the first position paper by the German Council for Scientific Information Infrastructures (RfII) to establish a national research data infrastructure for Germany. It has therefore already been maximally inclusive and consulted a wide range of user communities in chemistry in Germany. This broad community inclusion was achieved through working with our peers in a series of widely advertised workshops, a nation-wide user survey which will be discussed in detail below, as well as through working with our learned societies in Chemistry over the course of two years prior to submitting this proposal. The consortium consists of individuals and groups who shaped open and FAIR RDM in Germany in the past.

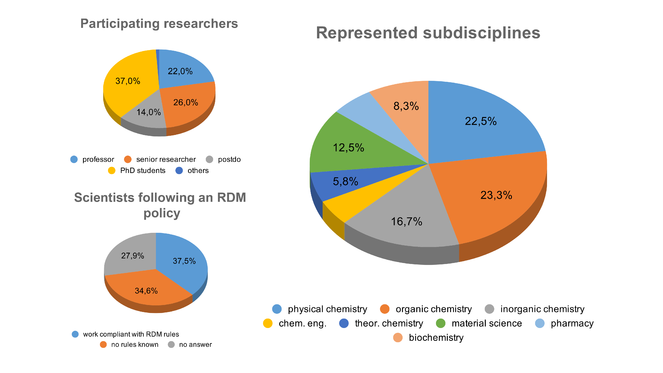

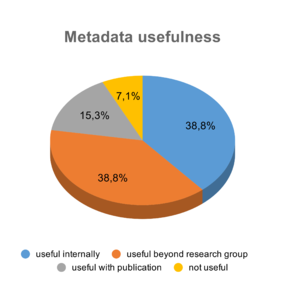

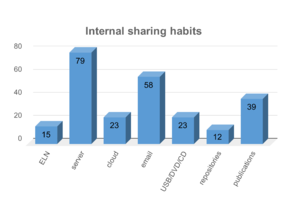

NFDI4Chem is supported by the German Chemical Society (GDCh), German Bunsen Society for Physical Chemistry (DBG) and German Pharmaceutical Society (DPhG) - representing approximately 40,000 members - to reach out to the chemistry community as a whole. All learned societies will continue to serve as participants and members of our advisory boards during the implementation phase to ensure a continued deep embedding in our community. Our assumptions, considerations and knowledge of user needs are based on an intensive exchange with the community. For this purpose, we have evaluated past and current surveys of which our latest is summarized in more detail as follows (

Our latest international survey was performed by the NFDI4Chem survey team, of which data from July through September 2019 were analysed. The survey was announced via the newsletter of the German Chemical Society (GDCh), emails to universities, Twitter, and a guest editorial in Angewandte Chemie (

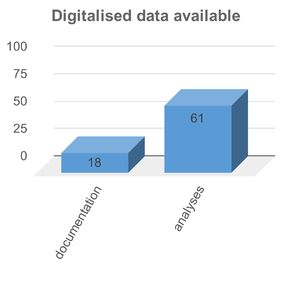

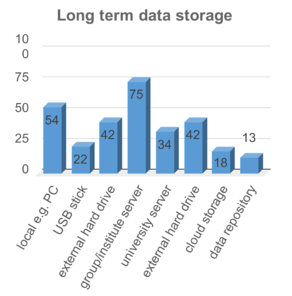

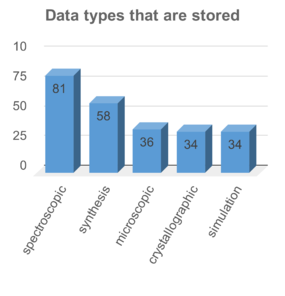

Most important insights with respect to the current digitalisation status, the data storage solutions, and responses on types of data are summarised in Fig.

Some desired improvements of the current RDM system were mentioned:

- general guidelines for data organisation and accepted standards for data formats and metadata annotation,

- solutions to the time-consuming manual extraction of metadata,

- need for software which simplifies the process of recording, analysing and saving data. Many participants indicated that they need an ELN capable of handling spectroscopic data. Generally, the participants wished that

- data should be searchable more easily, e.g. in a national or Europe-wide repository on spectroscopic parameters of molecules including raw data.

The introduction of data stewards was considered desirable due to various problems ranging from non-functioning infrastructure to the lack of compliance with research data management requirements within teams.

Based on this analysis, we have designed the work programme of NFDI4Chem to achieve a breakthrough in RDM in chemistry. Our six task areas (TA) each address one or more of the issues identified above. We base our work on integrating the existing lighthouses of RDM in our research domain, fill gaps both in the repository landscape and the underlying standards, develop and disseminate powerful tools to enable early digital data and metadata capture in the lab, and develop a strong training programme for chemists to understand and adopt the concepts developed in NFDI4Chem. Also, we dedicate a full task area to leveraging the synergies between the NFDI4Chem and the NFDI as a whole:

TA1 Management and Coordination will ensure the lean and efficient financial and organisational management of the project.

TA2 Smart Laboratory (Smart Lab) focuses on the implementation and adaptation of existing and development of so far missing IT components embedded in a flexible work environment, necessary to capture data early in the life cycle and to further manage, analyse and store associated information. TA2 enables a digital change in chemistry by supporting scientists with digital infrastructure of tools, services and repositories interoperable within the NFDI infrastructure.

TA3 Repositories enables the reliable storage, dissemination and archival of all relevant research data at each stage of the data lifecycle. This includes raw data in diverse formats as well as curated datasets. TA3 will adapt major existing chemistry repositories and databases to standards and interfaces, thus fostering interoperability and FAIRness as well as facilitating storing, disclosing, searching and re-using research data across distributed data sources.

TA4 Metadata, Data Standards and Publication Standards creates and maintains the specification and documentation of standards required for archival, publication and exchange of data and metadata on molecule characterisation and reactions, together with reference implementations and data validation. Ontologies are used where possible, and missing terminological artifacts are added.

TA5 Community Involvement and Training interfaces between community and infrastructure units: the community’s requirements are collected, analysed and channeled. Equally, dissemination and training on all levels are organised, starting in early undergraduate studies, and discipline-specific training material is developed. TA5 also fosters the awareness of the community for RDM and offers incentives for innovations.

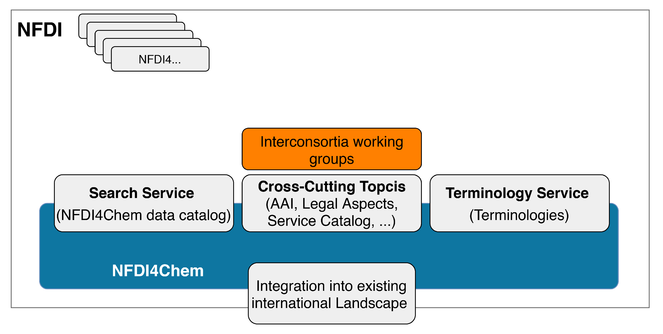

TA6 Synergies coordinates the activities of NFDI4Chem with the other NFDI consortia. TA6 is responsible for the coordination of the cross-cutting topics, including cross-domain metadata standards, semantic data annotation for cross-domain mapping of ontologies, provision of terminology services as well as legal aspects of FAIR RDM. Harmonisation will be sought by working with international bodies such as the Research Data Alliance (RDA) and the International Union of Pure and Applied Chemistry (IUPAC). TA6 develops an overarching search service and terminology service, that both will be linked to the NFDI.

To fulfil this ambitious work programme, we have assembled a hand-tailored consortium to perform the proposed work:

The resulting NFDI4Chem consortium consists of those institutions, groups, and individuals, who have been previously recognised for developing and supporting electronic infrastructure for chemistry in Germany and beyond. The consortium is led by Christoph Steinbeck, Professor for Cheminformatics at the University of Jena, with 20 years of experience in building open chemistry databases and cheminformatics infrastructure components. For nine years, Steinbeck led the chemistry area at the European Bioinformatics Institute (EMBL-EBI), which is tasked by its 27 member states to perform the open access RDM for the biosciences in Europe. Apart from building production quality open chemistry databases such as ChEBI and MetaboLights, Steinbeck led European e-infrastructure consortia to develop standards in biochemistry (COSMOS, FP7 EC312941) as well as for high-performance cloud computing environments in chemistry-based molecular biology (PhenoMeNal, H2020 EC654241). In this context, Steinbeck founded ELIXIR and goFAIR communities in his areas of working and is active in cheminformatics related working groups of the IUPAC. He leads the INF project of ChemBioSys CRC in Jena and is a PI in the data synopsis work area of the Microverse cluster of excellence in Jena.

Oliver Koepler is head of the Lab Linked Scientific Knowledge, part of the Digital Library and Data Science research group of Prof. Sören Auer at Technische Informationsbibliothek. He has nearly 15 years experience in building e-infrastructures and digital libraries for chemistry and research data, fostering open data and metadata standards. His work involved projects like the virtual library of chemistry - personalised information services for chemical research and industry (DFG), the VisInfo project for interactive, graphical retrieval processes for research data (Pakt für Forschung und Innovation, SAW-Programm), and development of digital preservation processes and document delivery service APIs within the Specialised Information Services for Pharmacy - FID Pharmazie (DFG). Oliver Koepler coordinated the TIB IT project to merge the TIB discovery service and the library catalogue, introducing a user-centred design approach. Current projects include the development of a research data and knowledge management system for engineering in the INF-project of the CRC 1153 - Tailored forming and the IUPAC Smiles+ project.

Nicole Jung works on method development in synthetic organic chemistry and is heading the projects of the Institute of Organic Chemistry at the KIT in chemoinformatics and laboratory digitalisation. These projects include the development of infrastructure in the form of an electronic lab notebook (Chemotion-ELN) and the establishment of the chemotion-repository (chemotion projects - DFG grant BR1750/34-1). The Chemotion-ELN is developed to support the digital work in chemistry laboratories and provides extensive functionalities and tools for the scientific work of chemists, in particular organic chemists. The chemotion repository is an Open Access repository designed to store, manage, and publish research data that is assigned to molecules, their properties and identification as well as reactions and experimental investigations. These infrastructure activities are supported by the programming of software tools to support digitalisation, and the initiation of data curation and data collection efforts in synthetic chemistry. N. Jung strongly supports the ideas of FAIR and Open Access data and was awarded as SPARC Europe Open Data champion.

Felix Bach works on generic RDM and big data analysis and is heading the RDM group at the Steinbuch Centre for Computing (SCC) at Karlsruhe Institute of Technology (KIT). He is also part of the joint management of the service team RDM@KIT. Felix Bach concentrates on big data management and analysis - especially in the analysis of large time series and has developed a generic concept and software framework for structural analysis of huge multivariate time series data. He has worked and is still involved in several large scale data management projects including bwDataArchive in which the first generic research data archive infrastructure for long-term bit preservation of hundreds of petabytes of data was built up for the universities of the state of Baden-Württemberg. Additional projects were focused on enabling better data flows between the heterogeneous RDM systems, to make archiving and publishing of data sets easier for scientists of all fields, integrate existing systems as part of the research data cycle and create and install RDM policies and a RDM support team involving all organisational units that play a role in RDM (library, computing centre, legal department, research funding etc.). Felix Bach is engaged in several projects and initiatives that foster open source research software and their sustainability e.g. by creating and installing software development policies for universities within the Helmholtz Community. He is also engaged in the RDA, RDA-DE and regional RDM forums to make research reproducible by advancing FAIR and Open Access research data.

Matthias Razum is heading the e-research department at FIZ Karlsruhe – Leibniz Institute for Information Infrastructure. Since 2004, he has been intensively involved in the design and implementation of solutions for RDM, virtual research environments and digital long-term archiving. He has conducted research on these topics in several large BMBF and EU projects (e.g. eSciDoc, SCAPE) and built infrastructures together with partners from science and humanities. Since 2017, he has been responsible for the operation of the generic research data repository RADAR, which is now used by eight universities and research institutions in Germany. Matthias Razum has participated in several working groups on RDM on a national and state level. He is a Steering Committee Member of the Preservation and Archival Special Interest Group and Advisory Board Member of the Generic Research Data Infrastructure (GeRDI). Between 2010 and 2017, he served as a Steering Committee Member of the International Conference on Open Repositories.

Steffen Neumann is head of Research Group Bioinformatics and Scientific Data at the IPB, a non-university research centre and a member of the Leibniz Society, dedicated to chemical, and biochemical plant research. He is an expert in the area of statistical mass spectrometry data analysis and metabolite identification. In this context he is pushing Open Data and Open Standards, leading to community-wide e-Infrastructures, and to exploit these for functional annotation through advanced metabolomics analyses. Dr. Neumann was WP lead for “Standards Development” in the FP7 COordination of Standards in MetabOlomicS (COSMOS) project and WP lead for “Tools, workflows, audit and data management” in the H2020 project PhenoMeNal on computational metabolomics, is PI in the BMBF funded MASH which is part of the german Network of Bioinformatics infrastructures (de.NBI). He is a member of the scientific advisory boards of french and australian metabolomics infrastructures, and associate editor for BMC Bioinformatics, MDPI Metabolites and Nature Scientific Data.

Sonja Herres-Pawlis heads the Chair of Bioinorganic Chemistry at RWTH Aachen and combines bioinorganic chemistry (mainly Tyrosinase models and entatic state models) with sustainable polymerisation catalysis. In both fields, she and her team combine advanced synthetic methods, extensive kinetics with a multitude of spectroscopic methods and density functional theory to derive a comprehensive mechanistic understanding. She was one of the founders of the Molecular Simulation Grid (MoSGrid), a BMBF-funded initiative between 2009 and 2012, which focused on the design and development of a user-friendly electronic environment for theoretical workflows in chemistry with implemented search engines for molecules (

Johannes Liermann is the head of the analytics core facility at the Institute of Organic Chemistry (JGU) and is specialized in NMR spectroscopy of small molecules. He has been cooperating with Nils Schlörer (UzK) in the context of the public domain NMR database nmrshiftdb2 which was originally conceived by Christoph Steinbeck. In cooperation with Nils Schlörer, J. Liermann is PI in two DFG projects for establishing an electronic assignment workflow to improve the quality of published NMR data (IDNMR project, LI2858/1) which strongly involves research data management aspects and offers important elements for an RDM infrastructure for molecular chemistry such as NMR data repositories. In addition, J. Liermann is elected board member of the Magnetic Resonance Discussion Group (FGMR) in the GDCh.

The consortium within the NFDI

Thematic Embedding

In preparation for this proposal, NFDI4Chem has extensively cooperated via joint workshops with FairMat, NFDI4Ing, NFDI4Cat, DAPHNE, PAHN-Pan, NFDI4Phys, NFDI4BioDiversity, NFDI4Agri, NFDI4Health, NFDI4Microbiota, and further neighbouring consortia. The cooperation has covered topics like interdisciplinary (meta)data standards, cross-domain search, legal aspects, and access to repositories. Networking with other consortia has been facilitated by the fact that many members of NFDI4Chem are also active in other consortia. These are: NFDI4Biodiversity, NFDI4Medicine, PAHN-PaN, NFDI4Culture, MaRDI, NFDI4MobilTech, NFDI4Agri, NFDI4MSE, FAIRMat, NFDI4Ing, NFDI4Phys, NFDI4Earth, GHGA, DAPHNE.

To emphasise the importance of cross-cutting topics in the NFDI as a whole, 21 NFDI consortia signed the Berlin Declaration (

We see the following topics as areas where NFDI4Chem would specifically invest effort to coordinate across consortia with the whole of the NFDI:

General principles of FAIR data management, international networking and awareness-raising: Key personnell of NFDI4Chem are active in a number of international efforts, such as GO FAIR, RDA interest groups, ELIXIR implementation networks, the European Open Science Cloud (EOSC) and more, which promote FAIR data in both the chemical as well as biomedical domain. We will aim to harmonize those existing efforts with FAIR data aspects across the whole of NFDI and engage in international networking with generic and specialized bodies promoting RDM and standards. As leaders and participants in collaborative research and excellence clusters in Germany, we will help to promote and implement the principles of FAIR data management in our local community, gather requirements and promote the adoption of the NFDI.

Repository technology and customisation toward individual domains: Repository technology will be at the heart of virtually any NFDI consortium’s implementation plan. To foster the interoperability of a potentially diverse portfolio of repository technologies, NFDI4Chem wants to promote standardisation of interfaces and technological platforms across the NFDI which can be customised to individual research domains and application scenarios.

Catalogue of all services developed by the NFDI: Following the model of the European Open Science Cloud (EOSC) and enabling easier integration into the same, we suggest the cross-cutting service catalogue enabling the keyword based discovery of services by users. Individual catalogues for exposure on individual consortium portals can then be generated on the fly from the central catalogue. The central catalogue will be designed to feed automatically, if desired, into the EOSC service catalogue.

Mechanisms and instruments for agreeing on international standards: Research data can only be re-used when annotated with sufficient metadata adhering to community agreed standards. New standards required for the NFDI cannot be negotiated at a national level but require extensive and long-term international consultations. The NFDI4Chem leadership has been engaged in such efforts for the past 10 years and we want to contribute to agreeing on common best practises for international development of standards within the NFDI.

Ontologies, terminology services: Once agreed, controlled vocabularies and ontologies will ideally be managed through lookup terminology services used across the entire NFDI.

Machine-readable data, data validation: Especially for cross-domain applications data needs to be unambiguously semantically annotated, both for humans and machines. Using discipline-specific terminologies we will describe research data in machine-readable form and adopt and develop research data semantics for properties, methods, units.

Efficient and harmonised materials and measures for outreach and training across NFDI: Established outreach instruments such as workshops, conferences, tutorials and training material, feedback mechanisms ranging from electronic surveys via issue trackers to social media elements will be explored throughout the NFDI. We further expect public policy, funders and learned societies to increase their demand for FAIR and open data management which will naturally increase the incentive for users to engage with these ideas. NFDI4Chem would like to promote concerted efforts with the NFDI towards those goals.

Legal aspects of research data management, data sharing: NFDI4Chem participants have expertise to address legal aspects of RDM and provide support for the NFDI community on e.g. legal questions about data ownership, legally compliant operation of the NFDI infrastructures, and the development of science-friendly guidelines for RDM. We assume that there will be similar legal issues in other consortia at a higher level and propose a joint approach to those fundamental issues.

Unified and interoperable governance models across NFDI: NFDI4Chem leadership and participants have extensive experience in building international research data infrastructures in the biomolecular and chemical domain and beyond and will happily share this knowledge during discussion across NFDI domains.

International networking

The community-wide negotiation of standards in chemistry, covering both data and metadata standards, require global efforts and cannot be achieved on a national level. Here, NFDI4Chem leadership and participants are already been entertaining a wide range of activities and have extensive experience in driving global standardisation efforts in cooperation with international bodies for standardisation in chemistry and beyond. In independent standardisation efforts, we were instrumental in establishing Chemical Markup Language (CML) as one of the truly open and versatile data formats in chemistry (

NFDI4Chem members are furthermore active in institutionalized international efforts such as:

The International Union of Pure and Applied Chemistry (IUPAC) has traditionally played a significant role in creating and maintaining standards in chemistry. In chemical information, the most widely used open standards such as the International Chemical Identifier (InChI) and its variants as well as the spectroscopic standards JCAMP are maintained and developed by IUPAC divisions. NFDI4Chem key personnel are involved in the development of those standards. Speaker Christoph Steinbeck is a member of the InChI subcommittee of the IUPAC. Participant Thomas Engel is the delegate of the German Chemical Society (GDCh) to the IUPAC Division VIII committee. Oliver Koepler is engaged in the IUPAC SMILES+ specification project. Participant Patrick Théato is secretary of the subcommittee on polymer education (IUPAC Division IV) and member of the subcommittee on polymer terminology (IUPAC Division IV). All members will link and push the outcomes of the NFDI4Chem TA4 to an international level, for a broader discussion, and finally to influence world-wide recommendations of the organisations. As a former member of the CPEP committee on printed and electronic publications, Christoph Steinbeck contributed to the maintenance of the JCAMP standard. He still maintains the JCAMP reference implementation hosted on SourceForge.

European Open Science Cloud (EOSC): NFDI4Chem aims to seamlessly integrate all services developed into the service catalogue of the European Open Science Cloud (EOSC). Applicants of this proposal are active contributors to the EOSC. The Steinbuch Centre for Computing (SCC) at Karlsruhe Institute of Technology (KIT) participates in the EOSC related projects EOSC-hub, EOSC-Secretariat, EOSC-synergy, EOSC-Pillar as well as in the AAI related work in the GEANT4-3 project. The Leibniz Institute of Plant Biochemistry was partner in the PhenoMeNal project, led by NFDI4Chem speaker Christoph Steinbeck, that build an infrastructure for data processing and analysis for medical metabolomics. All applicants will use their existing expertise to ensure interoperability of the NFDI4Chem services with the functionalities of the forthcoming EOSC. Within EOSC, the Molecular Open Science Enabled Cloud Services project (MOSEX) has expressed its interest to cooperate on all key objectives with NFDI4Chem (see LoS).

Research Data Alliance (RDA): Five key members of NFDI4Chem are engaged in the Chemistry Research Data Interest Group (CRDIG) of the RDA (

GO FAIR: NFDI4Chem Speaker Christoph Steinbeck and co-applicant Steffen Neumann were instrumental in instantiating the GO FAIR implementation network for metabolomics, one of the first implementation network in GO FAIR at all. Metabolomics as an interdisciplinary field has a strong analytical chemistry and cheminformatics component. Members of NFDI4Chem also have strong links to GO FAIR Chemistry Implementation Network (ChIN) (

ELIXIR is the pan-European infrastructure for biological information (

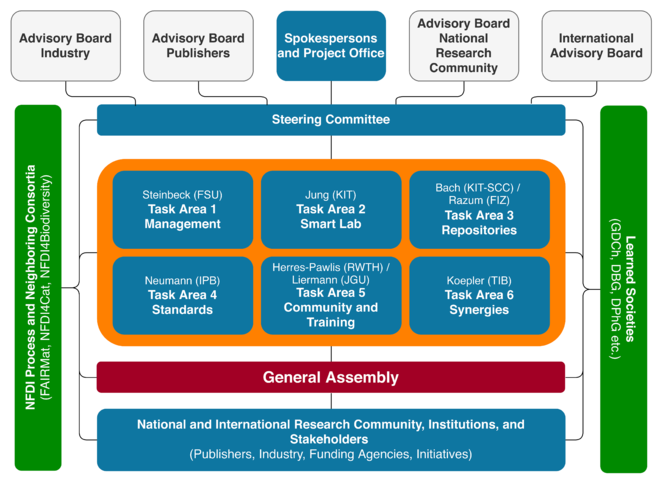

Organisational structure and viability

We have designed the organisational structure of NFDI4Chem to ensure that the work programme can be pursued in an efficient and agile manner, that the decision making process within NFDI4Chem is legally sound and transparent and that the community and our stakeholders are closely attached to our operation. In the following, we describe the various components of our organisational structure as shown in Fig.

The General Assembly (GA) is the central decision making body of the project. The GA consists of all co-applicants and participants (represented by one delegate from each partner), as well as 5 members of the community elected by the GA. The GA will decide on all issues of fundamental importance for the whole project. The GA will be held at the annual NFDI4Chem project meetings or online if urgent matters require that. These meetings will be used for strategic planning, presentation of scientific results, and discussion of major management issues. The GA decides when overall agreement is required in the matters of budget and consortium management.

The Steering Committee (SC) is the central body responsible for monitoring and evaluating project progress and supervising project objectives, and which takes the necessary decisions in scientific coordination and administration of the project. Based on the contributions from task areas, the SC prepares periodic reports and the final report. The SC consists of the two speakers, the project manager and the task area leads. The SC is headed by project speaker Christoph Steinbeck.

NFDI4Chem will establish a focused set of Advisory Boards, which are consulted regularly to ensure that the consortium is on track and develops and delivers services which are:

- aligned with the mission of the NFDI in general and

- address the needs of the chemistry community.

Advisory Board Industry comprises companies developing data producing equipment, data management systems and data analysis solutions. They will provide insights on data formats and software, and aim at including the NFDI4Chem recommendations and processes into their software developments.

Advisory Board Publishers will provide insights on research data associated with scientific publications. They will aim at including the NFDI4Chem recommendations and processes into their guidelines for authors, editors and reviewers. The manuscript submission systems should support compliance with the guidelines.

Advisory Board National Research Community represent researchers and organisations performing research in Germany. We aim to cover the subject areas reflected in the list of DFG review boards (DFG Fachkollegien) mentioned in the section General Information above.

International Advisory Board complements the advisory boards described above, with a special focus on international organisations and individuals which are lighthouses of collaboration and alignment of efforts to collect, store, process, analyse, disclose and re-use research data.

Decision Making: The distributed nature of the NFDI4Chem project necessitates a decentralised administration of execution control for effective decision making. The operational level comprises all the project partners who are responsible for the execution of the strategic work plan detailed in the task areas (TA). The TA leaders will be responsible for keeping track of the measures with the listed deliverables. Any deviations will be brought to the attention of the project office (PO) and discussed latest at the next steering committee (SC) meeting. The TA leaders oversee the budget, technical aspects including quality checks and communications with the project office when required. This process is supported by the detailed project management plan maintained at the FSU. The main scientific controlling and decision making body in the project is the steering committee (SC). The SC is responsible for all decisions regarding project management, distribution, monitoring and re-organisation of specific tasks if necessary and for all cases which do not require the voting of the GA. The SC convenes by electronic communication on a regular basis and on-demand, as organized by the project office. The GA will be the highest decision making body in the project and will be consulted for strategic planning, major management topics and other fundamental issues. The GA will take in particular decisions if overall consensus is required in the matters of inclusion of a new partner, exclusion of an existing partner, major changes in budget or the project strategy and in other unforeseen situations that need discussion or decision making. Decisions of the GA that need voting require a simple majority of the project partners based on the principle “one partner – one vote”. To have a quorum, 75% of the partners have to be present at the GA physically or present through teleconference facilities at the time of decision making and voting. In a stalemate situation, the Project Coordinator will have the deciding vote.

Operating model

Our operating model follows the unanimous agreement amongst consortia during the governance workshop in Bonn on August 30, 2019, where we were strongly advocating for an overarching NFDI e.V. which consortia can join as dependent legal entities. Until agreement has been reached about the legal model for the NFDI as a whole, we will operate NFDI4Chem under a normal academic consortium model. This will be based on a consortium agreement (CA) which will clarify the operational dependencies between co-applicants and participants, the mode of operation as described above and in TA1, and allow for the transfer of funds from the applicant institution to the co-applicants and participants.

Special attention will be given to the financial compensation model for participants, where no exchange of goods and services in a commercial sense is currently foreseen. Since all NFDI consortia have to solve these issues, we already agreed to work together to design a CA and contracts that are compliant with the German law. As a strong advocate of the Berlin Declaration, NFDI4Chem looks forward to close collaboration with other NFDI consortia and the NFDI directorate on this topic.

Research Data Management Strategy

Research Data Management in Chemistry - a status quo

Chemistry consists of many subdisciplines with a large variety of methods and data. Chemical research dealing with molecules, their reactions and properties can be described by experimental procedures, observations, theoretical models and their computation, and by the resulting data. Data are further processed, analysed, evaluated, and in many cases assigned to a molecule as proof of a hypothesis. The large diversity of experimental and theoretical methods (e.g. NMR, IR, UV/VIS, MS, HPLC, Electron Microscopy, bioactivity assays, quantum and force-field calculations, cheminformatics approaches) results in a plethora of different data types and formats, most of them being proprietary. These data often consist of spectroscopy and spectrometry results (such as nuclear magnetic resonance (NMR), mass (MS), IR/Raman, UV). Other data come from elemental analysis (EA), X-ray, electron paramagnetic resonance (EPR), cyclic voltammetry (CV), gel permeation chromatography (GPC) and differential scanning calorimetry (DSC) measurements or thermogravimetric (TGA) or dynamic mechanical thermal (DMTA) analysis, only to mention a few. Proprietary data formats can consist of a single, binary file of unknown structure, to directories populated with several files, some in ASCII text or (rarely) XML. Efforts in the life-sciences towards open MS formats resulted in mzML (

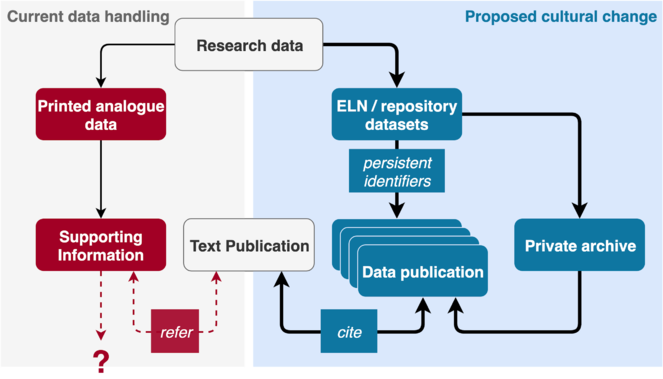

While in theoretical chemistry or computational chemistry research data workflows are seamlessly digital, the provenance and context of data produced in experimental laboratory workflows are mostly documented in hand-written lab journals. In our survey only 18% of researchers declared to use an electronic lab journal, with some of them considering text processors such as MS Word to be ELNs. When investigating a research problem, multiple devices generate multiple data sets of various data formats, stored in different locations within an institution. Keeping track of all these data trails is an enormous challenge for researchers. Efficient systems for the curation of user-provided descriptive and contextual data and connection to related device-captured experimental and analytical data are missing. Although many devices provide experimental data in a digital format, accessibility and reuse of data is hampered by missing standards for data exchange formats, as can be seen from the wide-spread use of proprietary data formats in device-generated experimental output. As a result most descriptive metadata about these research data are still non-digital at the beginning. This coexistence of analogue metadata and data next to digital research data causes considerable problems later on, when it comes to the publication of research results and the corresponding research data.

When a researcher reaches the point of preparing a publication or even data publication based on formerly generated data, the deficits from the previously described points become visible. For instrument data, insufficient ways of presentation are common in chemistry journals. So far, research data are included in very condensed form in the publications, e.g. in the experimental section, in tables or images. Often additional provenance and context information about the research data and representations of the data can be found in the supplements to an article publication. Published as PDF files or bitmap images, these supplements may contain more detailed tables, peak lists or images of spectra, none of which can be re-analysed in their presented form. These existing conventions are in stark contrast to RDM in accordance with the FAIR principles. As our survey described above revealed, so far only 16% of researchers published their research data in data repositories in addition to an article publication at least once. This is certainly also caused by the fact that data repositories for annotated raw data exist only for a few domains in chemistry. More often, there are curated databases in which the derived data from the raw data sets can be found, usually combined with an assignment to a molecule. Those are often curated by human domain experts from the primary literature in a painstaking process. In the end, deposition of data and metadata is currently a rather complex and time-consuming process resulting in low acceptance by researchers.

Nevertheless, data is often exchanged among scientists. This is mostly accomplished through direct contacts. Only 15% of researchers have reused original data files of data that was obtained in their research field. Our survey reveals that only 40% of researchers have defined workflows and curation standards. In most cases curation and final storage procedure is chosen by the individual researcher or by rules of the research group.

Examples of successful RDM exists only in few subdisciplines such as crystallography, where the CIF file format enabled the community for 30 years to share data and embedded this data sharing into the publication process (

Databases and Data Repositories: Chemistry has a long history in indexing chemical data and creating searchable data collections. The extraction and indexing of chemical data found in literature by Beilstein/Gmelin handbooks or by CAS, resulting in the digital databases SciFinder and Reaxys, are the most prominent examples. In addition to these outstanding commercial databases, there are a number of databases with a narrower focus on specific data about molecules, reflecting the importance of molecule characterisation data. Rather than a full assessment of the global landscape of chemistry databases, we describe those relevant to the NFDI4Chem strategy described below. Widely used molecule-centred databases like ChemSpider (

However, besides being partly proprietary, these databases lack the availability of the original research data and comprehensive metadata about it, which is often not available from the literature. This level of granularity can be achieved by data repositories where the actual research data file can be deposited with their corresponding metadata providing provenance and context. The Crystal Structure Database (CSD) of the Cambridge Crystallographic Data Center (CCDC) is an example of such a curated data repository, and is probably the best known repository for research data in chemistry. The publication of crystal structures via the CSD using the CIF standard is accepted as a standard procedure and well embedded in the article publication process in chemistry. A comparable database is ICSD in the field of inorganic crystallography, which cooperates closely with CSD. These repositories may serve therefore as kind of a best practice model in terms of user attraction.

With a national focus, Germany hosts the spectroscopic databases nmrshiftdb2 (

A true data repository with a focus on primary research data in chemistry is the Chemotion repository (

Data Flow into repositories: A key insight from our analysis described in section 2 is that data handling is currently not yet fully digital and that manual steps are often necessary, especially at the beginning of the process of data generation in the lab. This slows down RDM workflows and hinders the publication of FAIR data in repositories. Solutions to this problem have been addressed by the chemical and pharmaceutical industry already in the 1980ies and software as well as cheminformatic processes were developed to foster digitalisation strategies. Those solutions, however, did not find their way into academic data management. Work environments such as Laboratory and Information Management Systems (LIMS) and ELNs in combination with chemistry specific software and identifiers demonstrate how successful digitalisation of work processes can be achieved. Data transfer from devices to a digital management area solved by LIMS allows for control of the data flow, data tracking, and data transfer (

The availability of software to analyse and process data is an important factor to achieve re-usability of research data. Here, the heterogeneous situation in the field is reflected by many individual software packages and tools. Important components are open source tools, enabling the creation of FAIR data are chemoinformatic libraries such as RDKit, CDK and OpenBabel which are used for diverse RDM applications in chemistry. Specific software in chemistry is also needed for spectroscopic data (e.g. NMR, MS, IR and UV/Vis), playing a pivotal role with respect to the identification and verification of research results. The current practice is to analyse those data either manually from printed spectra or using stand-alone software. To enable FAIR RDM, the visualisation and processing of spectroscopic data, but also its annotation (assignment) to the research data provenance and context, is important. While web-based solutions to be used embedded to ELNs or repositories, are necessary, only a few open source, web-based visualisation tools are available (

The use of standards in chemistry is characterised by pragmatic mix of open standards and what one could call publicly known (meta)data formats, where open standards are characterised by an open, public and inclusive development process, whereas publicly known formats have been developed by companies, documented somewhere, can be re-used, but the public has no or little influence on the development and if often not notified of changes, in the case of the commonly used SMILES format. A full account of available standards is outside of the scope of this section. Standards for structure representation, for example, are InChI and SMILES which was reworked by the Blue Obelisk community (

Experimental data is represented by a plethora of data formats where open standards exist only for a fraction of the methods. Documentation for proprietary data formats are often not available. The most prominent example for the long term usage of an open data format is the Crystallographic Information File (CIF) (

In conclusion, the consistent, long-term and safe storage in repositories, with a few exceptions like silico chemistry and crystallography, is still rather uncommon. Standardised and normalised data, and descriptive metadata play only a minor role in the local management and storage of data. Journals do not require authors to deposit primary research data in well annotated form in community accepted repositories. NFDI4Chem aims to fundamentally change this situation through a concerted effort combining technical and cultural change with outreach to publishers and community training.

The strategy of the NFDI4Chem consortium

NFDI4Chem will support the scientists in all steps of the research data management data life cycle. The measures will address the seamless integration of identified key components into NFDI4Chem infrastructure to optimise and facilitate data workflow and capture of metadata. These efforts will be supplemented by the development of standards and policies, resulting in a substantial increase of data quality in the RDM process. Finally, comprehensive community outreach activities will ensure that the initiated digital change will be transformed into an increased and sustainable awareness of RDM in the minds of researchers, thus fostering a cultural change.

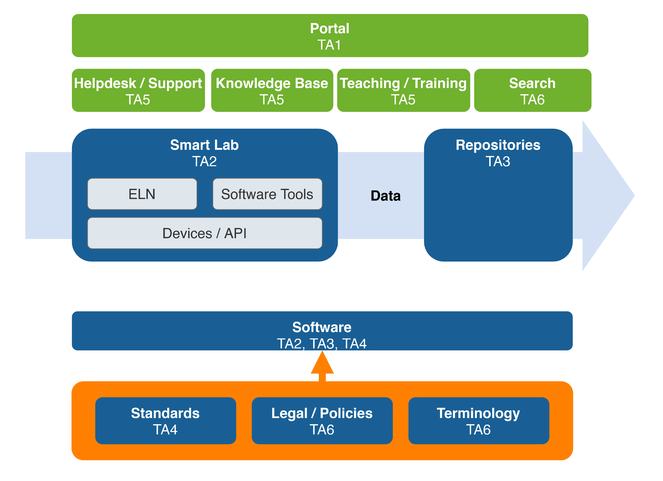

The workflows to be supported start with data acquisition in the lab with data captured at the workbench, by analytical instrumentation or data that arise by calculation or simulation. The data is captured, managed and analysed via virtual research environments and can be collected, shared and disclosed by repositories and curated databases. At all levels of this workflow, we selected existing infrastructures, software, and services which will be integrated with NFDI4Chem. Single missing key elements were identified and their development will complement the envisaged infrastructure (Fig.

NFDI4Chem will not only facilitate RDM but will create added values for scientists to accelerate the acceptance of concepts and services by the community. A very important aspect is to minimise additional efforts by data management and to allow a seamless data transfer throughout all components of the NFDI4Chem. By this, scientists will be faster to collect FAIR data, standards can be introduced and kept easier and errors due to manual rework will be avoided. Data and metadata should be transferred without manual interaction from the devices generating data to the virtual work environments to the discipline-specific or generic repositories. All components of the infrastructure that are necessary for this fully digital workflow should be available to every scientist. Therefore, different measures such as the installation on site or a centralised operation, have to be realised depending on the type of component. For the operation of services and in particular with respect to the hosting of repositories, the NFDI4Chem strategy relies on the distribution of responsibilities to different partners within the consortium. Among those, three infrastructure centres (TIB, KIT-SCC, and FIZ) offer, due to their experience and expertise with chemistry research, the basic infrastructure support. Additional infrastructure centres (e.g., ITC Aachen, ZIH Dresden) further support the strategy directly by agreeing to host selected national repositories operated by participants (UzK, UFZ). NFDI4Chem establishes a strategy based on infrastructure components, software, and services that are operating on a national level but also international solutions will be included to the overall strategy by different measures. The concept of NFDI4Chem offers infrastructure for chemists covering all subdisciplines. To ensure a broad coverage, experts of the subdisciplines organic chemistry, inorganic chemistry, physical chemistry, polymer chemistry, biochemistry, pharmaceutical chemistry and computational chemistry are involved to build NFDI4Chem. In the first funding phase, the components of NFDI4Chem have a strong focus on those aspects of chemical research that have the greatest need for catching up in terms of digitisation. While the areas in chemistry, where theoretical research is predominating the daily work, are already well-positioned at least in terms of digital data availability, the support of experimentally driven subdisciplines includes fundamental digitalisation instruments. The proposed concept is a strongly demand- and user-driven approach, including all components and measures that were identified to be crucial for FAIR data management and those to be essential to allow RDM customised to the needs of the subdisciplines. The latter aspect is the most important prerequisite for the acceptance of the NFDI4Chem by the community. The requirements were identified in the past two years leading to this proposal by the members of the consortium, feedback of additional experts in the field and by the outcome of the requirement analysis from different surveys summarised above. Regular surveys covering the whole community in addition to a constant contact to the users of the NFDI4Chem infrastructure including road shows and workshops in universities are key instruments to constantly improve the service portfolio. The direct communication with scientists via hands-on trainings and live demos ensures the effective knowledge transfer and the awareness of new services and functions of the NFDI4Chem infrastructure. Both activities, consulting and training the community, foster the cultural change in chemistry.

Metadata standards

The FAIR principles developed by the international FORCE11 initiative (

To guide researchers in reporting metadata, NFDI4Chem will support international processes for the creation of Minimum Information standards in selected areas of chemistry. The accepted consensus for a specification of Minimum Information about a Chemical Investigation (MIChI) will be reached and discussed with the stakeholders (researchers, infrastructures and journals) and will guide the parameterisation of ISA-Tab descriptions of studies.

Extensions developed in the context of metabolomics capture the analytical information such as chemical shift and multiplicity in NMR-based experiments, and m/z, retention index, fragmentation and charge for mass spectrometry. Both SMILES or an InChI and database references are used for reporting metabolites.

NFDI4Chem will embrace these existing data standards and extend them based on requirements in the chemistry subdisciplines. Together with device vendors, publishers and our infrastructure developments these will become an integral part of the digital workflow in chemistry research.

Implementation of the FAIR principles and data quality assurance

The FAIR principles promote data to be Findable, Accessible, Interoperable and Re-usable. FAIR data management is also a necessary condition for exchange with other disciplines - a key aspect of the NFDI as a whole. The dissemination and application of FAIR RDM services and repositories in chemistry is still at the beginning. Reasons are manifold; a lack of data and metadata standards, insufficient data quality, low data coverage, lack of efficient digital workflows, lack of search functionalities or scientific acknowledgement for data publications resulting in low acceptance of RDM services. To address the technical challenges and to foster acceptance by scientists, data acquisition in FAIR and open data formats need to be established continuously over the research data lifecycle, beginning at the earliest point in time in the research process at the lab bench and minimising efforts that arise with RDM.

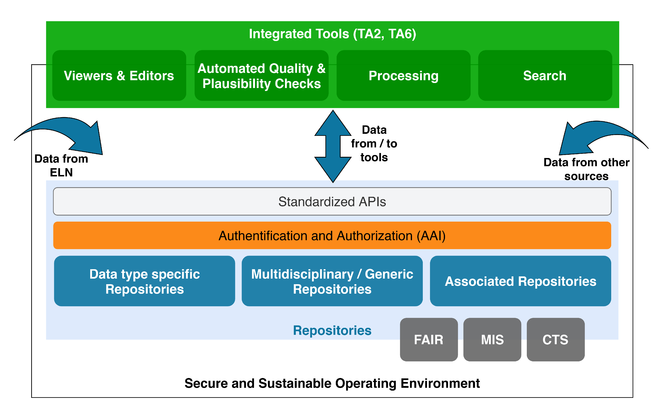

In NFDI4Chem, we will work towards a fully FAIR ecosystem of chemical RDM by following a now well-accepted strategy (

To be Findable, data in all of our resources will have rich machine-readable (meta)data, linked with domain-specific and cross-domain vocabularies. Our ELN and device integration strategy outlined in TA2 will foster the aggregation of rich metadata early in the data generation process. Repositories within NFDI4Chem will register their datasets at DOI services like DataCite assigning globally unique and eternally persistent identifiers (DOIs) to enable indexing in a data catalog to be build for the Search Service in TA6.

To be Accessible, all data will be retrievable by their identifier using HTTP(S) as standardised communications protocol, which is open, free and universally implementable. Repositories and services will provide standardised APIs (see TA3). NFDI4Chem components, all being Open Access, will be using a NFDI-AAI service which allows authentication and authorisation procedures, where necessary (see TA6).

To be Interoperable, our (meta)data will use a formal, accessible, shared, and broadly applicable language for knowledge representation (see TA4), which uses vocabularies that follow FAIR principles and includes qualified references to other (meta)data (see TA6). Like the minimum information (MI) standards in biology, the chemistry community will develop MI metadata standards to semantically describe experiments and simulations, molecule characterisations and others. At the same time, NFDI4Chem will promote open data formats.

To be Re-usable, our (meta)data will have accurate and relevant attributes, be released with a clear and accessible data usage license supported by legal policies and guidelines defined in TA6, their provenance, and meet domain-relevant community standards. This information is verified in the data curation process (see TA4). We will enable the reuse of data to support domain cross-linking, big-data analysis and future artificial intelligence (AI) methods.

Agreeing on data and metadata standards in a particular research domain is an international effort where NFDI4Chem input will advance the field. Thus, the effort will be pursued through collaboration with scientists and standardisation bodies such as the IUPAC, the Research Data Alliance (RDA) or the GO FAIR initiative. The strong links from NFDI4Chem to those international organisations are outlined in section 2.4 above.

Quality assurance of data in NFDI4Chem is supported by measures in TA2, TA3 and TA4. The definition of MI standards allows for automated checks at all steps of the data life cycle.

At the time of data curation, automatic plausibility checks and data validations can support the reviewers in the (peer) review process. Data in repositories and databases will be curated by a mix of automatic and manual quality checks. Last but not least, the re-use of data enables a quality check by the community, which will be the ultimate corrective measure for data quality in NFDI4Chem repositories. Details of these measures will be discussed in the individual task areas that address them.

Services provided by the consortium

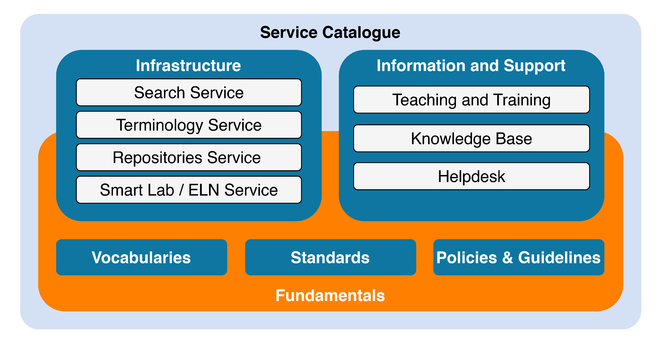

NFDI4Chem will develop and implement a coherent service catalog (see Fig.

The virtual environment of federated repositories for storing, disclosing, searching and re-using research data across distributed data sources, complemented by a Search Service, Terminology Service and ELN as a service will be the core services of NFDI4Chem, enabling the implementation of further concepts like the Smart Lab or the NFDI4Chem portal.

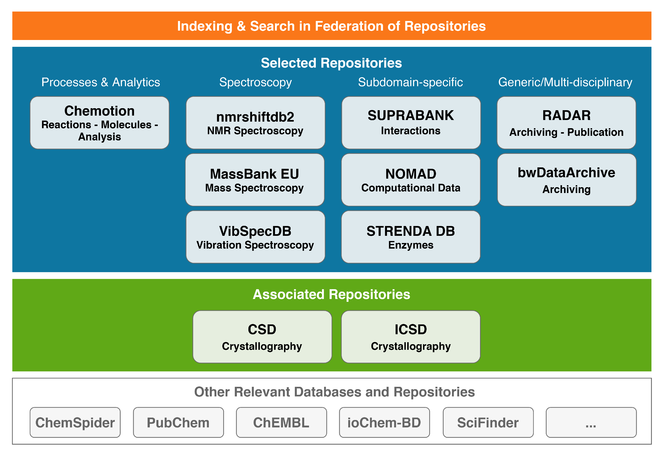

Databases and repositories that cover the relevant data types used by the NFDI4Chem community will be included into the envisioned federation of repositories shown in Fig.

MassBank EU, hosted at UFZ (Leipzig) is the first public repository of mass spectral data for sharing them among the scientific research community (

To complement our spectroscopic portfolio we have identified VibSpecDB, a currently internally utilised database for vibrational spectra (Raman and IR spectra) that is hosted at FSU (Jena). In the course of this project, VibSpecDB will be integrated into the NFDI4Chem spectroscopy concept and converted to full open access. Currently, the database itself features APIs for programming languages like Python or R, but no GUI based import routines, web-interface or viewers. These functionalities will be developed in the course of NFDI4Chem and a license as well as access-right management system will be added to the database forming a repository for vibrational spectra.

The Chemotion Repository covers research data that is assigned to molecules, their properties and identification as well as reactions and experimental investigations and is hosted at KIT (Karlsruhe). Scientists are supported in their efforts to handle data in FAIR manner: The data is stored along with molecule and reaction specific identifiers and DOI-assigned data files are given with distinct ontology-supported metadata (

Suprabank, also hosted at KIT (Karlsruhe), is a curated database that provides data on intermolecular interactions of molecular systems which are not available in other repositories or databases. SupraBank is mainly aimed at supramolecular and physical chemists that deal with binding, assembly and interaction phenomena. Molecular properties are retrieved from PubChem, allowing the correlation of intermolecular interactions parameters to molecular properties of the interacting components. All molecules, solvents, and additives are searchable by their chemical identifiers. At present, the Suprabank stores >1100 curated data sets of intermolecular interaction parameters.

StrendaDB is a repository operated at BI (Frankfurt) for enzymology data providing the means to ensure that data sets are complete and valid before scientists submit them as part of a publication. Data entered in the STRENDADB are automatically checked, allowing users to receive notifications for necessary but missing information. Currently, more than 50 international biochemistry journals already included the STRENDA Guidelines in their Instructions for Authors.

The NOMAD repository (hosted at FHI, Berlin) enables the confirmatory analysis of calculated materials data, their reuse, and repurposing. It facilitates research groups to share and exchange their results, inside a single group or among two or more.

The repositories RADAR (FIZ Karlsruhe) and bwDataArchive (KIT, Karlsruhe) provide (certified) data archiving services and serve as catch-all repositories.

For the deposition of crystallographic data, NFDI4Chem will collaborate with the CSD (CCDC, Cambridge) for organic structures and for the ICSD (FIZ Karlsruhe) for inorganic structures. Both repositories, although being commercial, are established in the community and serve as a standard host for crystallographic data. The interoperability of both infrastructures with NFDI4Chem will be established during the funding period (see LoS).

In addition to the described infrastructure components in Germany, other international repositories and databases such as ChemSpider (molecule and physicochemical data), PubChem (molecule data, vendor and toxicology information), and ChEMBL (bioactivity data) will be connected.

The Repository Services are complemented by a Search Service and a Terminology Service (see TA6). The Search Service facilitates the access to all the resources available in the NFDI4Chem core and associated repositories. It will be built on a metadata catalog and provide a semantically harmonized access to the federated repositories based on the standardisation efforts for chemistry disciplines. Chemistry-specific search options include molecular structure and properties search. This approach will be combined with interconsortia harmonisations measures allowing not only cross-repository, but also cross-domain data discovery. The NFDI4Chem portal realises the concept of a single point of entry to NFDI4Chem services and further information. The Search Service, the knowledge base or the helpdesk will be provided via the portal. The Terminology Service will provide machine-readable and human-readable descriptions of research data, thus enabling researchers and components of NFDI4Chem and NFDI to access, curate and update vocabularies for chemistry and related domains. Terminologies will be developed by community driven workshops and will be continuously extended to cover the needs of all relevant subdisciplines. In addition to the Repository Services, the components of the NFDI4Chem as well as the services, in particular the Smart Lab will integrate the Terminology Service to ensure the semantic description of the data with standardised vocabularies.

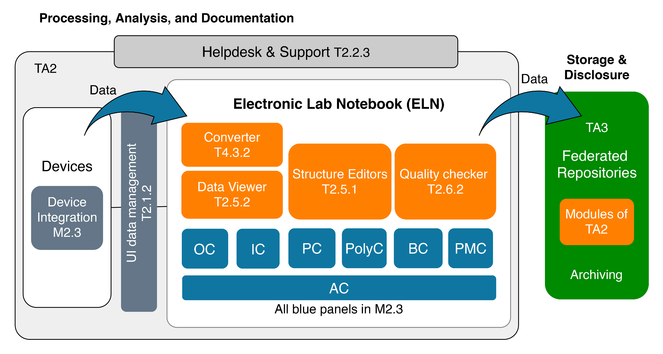

The Smart Lab, as part of the infrastructure, fosters data workflows from devices towards repositories in the federation. It integrates software components that are necessary requirements to build the NFDI4Chem services as well as an ELN that is provided as open source software component and as a service. Crucial building blocks for almost all NFDI4Chem services, but of special importance for the Smart Lab, are software and tools. The drawing, processing and visualisation of chemical structures is an important part of a chemical data infrastructure that enables comprehensive search, identification, quality assurance and curation of datasets in chemistry. Widely used software libraries such as Chemistry Development Kit (CDK), RDKit, OpenBabel support these functionalities. CDK (

The ELN supports scientists in collecting, managing, storing, analysing, and sharing data as a preparatory step to disclose data via a repository. NFDI4Chem will extend today’s notion of ELN by complementing its core functions with additional features (see software tools and libraries), thus broadening the scope of the ELN to a digital research environment. The ELN will be designed as an open, modular platform, combined with concepts to handle device integration and to transfer data interoperably to repositories or databases. It will offer interfaces to external sources and web services. The ELN to be developed covers the needs of multiple subdisciplines of chemistry. NFDI4Chem has chosen Chemotion ELN as reference service but will also consider other ELN used by the community (e.g. ElabFTW, Open Enventory). Chemotion ELN provides generic management tools as well as extensive functionalities for workflows in organic chemistry (

A cultural change in data handling and management in chemistry, as envisaged by NFDI4Chem, is necessary to implement the FAIR data principles. This change is an ambitious undertaking, in particular as new software and instruments have to be introduced, new workflows have to be established and the overall behaviour of the daily work has to be changed. NFDI4Chem will support the scientists in this process with a service for Teaching and Training and a Helpdesk to foster acceptance and use of the community. The teaching and training team is of high importance to foster the general acceptance of the infrastructure and to enable the scientists to use the components of the infrastructure in the right manner. NFDI4Chem will teach scientists about how to use the provided infrastructure for digitalised work according to the FAIR data principles and will raise awareness for its importance. The training team will explain the details of the single components of NFDI4Chem, and will train the use of especially the ELN and the repositories including the data transfer methods. The team will plan roadshows and will be available on request, offering not only theoretical advice but live demo sessions with the centrally hosted ELN and its functions.

Besides NFDI4Chem services, we will further build on NFDI services relevant to NFDI4Chem like the AAI-service for user authentication and authorisation, and on multi-disciplinary services like DataCite (via the TIB), ORCID, and Re3Data.

Contingency measures

The reliable and sustainable operation of the services provided by NFDI4Chem is of great importance for their acceptance by researchers. Extensive and multifaceted contingency measures are therefore planned.

Long-term data availability is boosted by the use of standardised data formats. In addition to the definition of such data formats, the consortium will also provide data converters and software components for the generation, viewing and verification of these formats. This is supported by the development of curation criteria that ensure data quality (see TA4). Central databases and repositories are supported both technically and organisationally in successfully passing certification (Core Trust Seal) and thus demonstrating their sustainability (see TA3). The repository operators thereby draw on the expertise of experienced infrastructure facilities. These facilities also offer established services for the long-term archiving of research data. At the same time, the consortium develops exit strategies for repositories and provides resources for data rescue in order to preserve important but no longer maintained data sets by transferring them to central repositories.

As software plays an increasingly important role in the digital transformation of chemistry, sustainable software design and software quality are important aspects. We will foster a modular software architecture with standardised components for reuse in multiple services provided by the consortium and beyond. We introduce Continuous Integration (CI) and Continuous Delivery (CD) processes in order to provide always workable and tested software in short development cycles even with distributed software development across the many participants. NFDI4Chem will broaden the knowledge of state-of-the-art software design and software quality by organising workshops in cooperation with the German chapter of the Association of Research Software Engineers (see LoS of de-RSE and R. Reussner). All software created in NFDI4Chem is developed as open source software and is published under OSI-compliant licenses on public repositories such as GitHub. Extensive and good documentation both of source code and of services as well as training for developers and users contribute to the growth of active communities that ensure the ongoing maintenance and further development of the services.

Work Programme

Overview of task areas

Table

|

Task Area |

Measures |

Responsible Co-Spokesperson(s) |

|

Management |

M1.1 - M1.4 |

Christoph Steinbeck |

|

Smart Lab |

M2.1 - M2.6 |

Nicole Jung |

|

Repositories |

M3.1 - M3.4 |

Felix Bach, Matthias Razum |

|

Standards |

M4.1 - M4.5 |

Steffen Neumann, Christoph Steinbeck |

|

Community Involvement and Training |

M5.1 - M5.6 |

Sonja Herres-Pawlis, Johannes Liermann |

|

Synergies |

M6.1 - M6.4 |

Oliver Koepler |

Task Area 1: Management

Description and General Objectives: TA1 provides adequate and lean leadership and support to all Task Areas in achieving their objectives. The highly collaborative and distributed nature of NFDI4Chem calls for an effective management structure and a sound decision making process to be in place, to ensure: efficient planning and controlling of project activities, seamless communication across partners, robust and transparent decision making, balance multiple responsibilities and competing priorities of consortium partners, prompt reporting and finally, successful project delivery. The overall management structure is illustrated in Fig.

The Project Office (PO) is located at the Friedrich-Schiller-University (FSU) in Jena where space and facilities are available for administrative purposes. The PO supports the Project Speaker as well as the steering committee in the day-to-day operational management of the project and handles the administrative management, the compliance to contractual obligations of the Consortium Agreement and the correct dissemination and exploitation of the project results. The PO is also responsible for the appropriate communication with the consortium and the DFG and will handle the financial administration and safeguard the adequate execution of the project budget. The PO will manage and monitor the project progress in order to meet the project objectives, handling time and resource constraints appropriately.

The PO is headed by Prof. Steinbeck and consists of the project management team with a project manager exclusively hired for this project, and experienced staff from the FSU administrative and financial team, the FSU funding coordinator Dr. Margull and the FSU press office as required during the course of the project. The PO members guarantee adequate administrative project controlling, coordination of the reporting, take care of financial and budgetary matters.

To summarize, the objectives of TA1 are:

O1.1: Efficiently manage the consortium activities to maximise NFDI4Chem's impact. If necessary, handling time and resource adjustments appropriately.

O1.2: Organise and document all NFDI4Chem services, consortium, advisory board and stakeholder meetings and decision making processes, as well as regular staff exchanges between the NFDI4Chem partners in collaboration with our consortium partners.

O1.3: Safeguard compliance with the contractual obligations of the Governance Model and correct dissemination and exploitation of the project results.

O1.4: Manage central funds to react to necessary future project extensions.

These objectives will be pursued through the following measures:

Measure 1.1: Overall legal, contractual, ethical, financial and administrative management of the consortium

Goals: Ensure the legal and financial operation of the consortium

Description: This measure will deal with the management of the project funding and the monitoring of the decision-making procedures, always in compliance with contractual obligations under the consortium agreement.

Task 1.1.1 Negotiate and conclude a consortium agreement with all partners

A consortium agreement will be negotiated with all partners to ensure a legally sound distribution of funding from the funder via the FSU to all co-applicants and participants. The consortium agreement will further set the framework for the successful project implementation and sets out the rights and obligations between the partners.

Task 1.1.2 Transfer of annual budget to partners. Retrieve and collate reports on the use of financial resources by partners

Based on the transfer agreement negotiated above, the FSU administration will ensure timely and correct transfer of funds to all partners. We will also collect all information for the reporting required by the DFG.

Task 1.1.3 Maintain communications with the NFDI headquarter and the DFG

This task comprises regular reporting to and communication with the DFG and the NFDI headquarters. Reporting to the DFG will adhere to their rules of resource usage

Deliverables: (D1.1.1) Consortium Agreement Document, (D1.1.2) Report on resource usage by partners, (D1.1.3) Open documentation of NFDI cross-cutting activities related to NFDI4Chem on portal.

Measure 1.2: Coordination at consortium level of the technical, outreach, training and cross-cutting activities of the project

Goals: Ensure the timely and precise execution of the work plan through effective and agile management of a highly distributed project.

Description: The NFDI4Chem speaker, assisted by the project manager for day-to-day management of the project, will be closely monitoring and coordinating the activities of the consortium based on the work plan laid out in this proposal.

Task 1.2.1 Organise and document consortium and TA meetings

As part of this task, we will organize monthly tele-meetings (Skype, Hangouts, phone) of the NFDI4Chem steering committee. Discussions and decisions will be minuted. We will invite national and international collaborating PI’s to participate if needed. Technical teleconferences of the Task Area participants and leads will be held separately and likewise individually documented.

Task 1.2.2 Organise and document advisory board meetings for NFDI4Chem

In close coordination with our learned societies, we will form the Advisory Boards (ABs) described above and maintain communication with the ABs through meetings which will be held at least bi-annually. ABs will be invited to join the annual consortium meetings organised in this TA. AB’s will be consulted for advice on questions of strategic importance for NFDI4Chem and their advice will be evaluated within the consortia and with neighboring consortia as well as used to steer the future directions of NFDI4Chem.

Task 1.2.3 Day-to-day management of the NFDI4Chem project

This task will be dedicated to the execution of the work plan as laid out in this proposal. At the beginning of the project we will produce a detailed project plan which will include a list of success indicators to monitor during the whole project, as well as the data we will gather that will help in assessing its impact. These indicators and metrics will be reported at least in the annual reports. It will organise, focus, continually motivate, and empower the project staff to do their work. It will perform controlling of the project, by tracking the work and comparing it and results against the this work plan. It will use information from these tracking efforts to make changes to plans when the information suggests that a change is called for. It will continuously monitor project risks and mitigate them if necessary.

Deliverables: (D1.2.1) Continuously updated protocols and minutes of consortium and TA meetings, (D1.2.2) Minutes of Advisory Board meetings published on portal, (D1.2.3) Open project plan continuously updated on portal

Measure 1.3: Coordination of knowledge management, Internet Publishing System and other innovation-related activities

Goal: The consortium, the NFDI as a whole, and the chemistry community will be holistically informed about the work of NFDI4Chem

Description: Efficient, complete and accessible information about NFDI4Chem’s work, progress and results will be maintained and disseminated via our internet publishing system and social media. This will allow our users and stakeholders, the consortium, the NFDI as a whole as well as the wider public to evaluate our progress and best use our services.

Task 1.3.1 Develop, maintain and host an informative portal