|

Research Ideas and Outcomes :

Conference Abstract

|

|

Corresponding author: Pedro Hernandez Serrano (p.hernandezserrano@maastrichtuniversity.nl)

Received: 17 Sep 2022 | Published: 12 Oct 2022

© 2022 Pedro Hernandez Serrano, Vincent Emonet

This is an open access article distributed under the terms of the Creative Commons Attribution License (CC BY 4.0), which permits unrestricted use, distribution, and reproduction in any medium, provided the original author and source are credited.

Citation:

Hernandez Serrano P, Emonet V (2022) The FAIR extension: A web browser extension to evaluate Digital Object FAIRness . Research Ideas and Outcomes 8: e95006. https://doi.org/10.3897/rio.8.e95006

|

|

Abstract

The scientific community's efforts have increased regarding the application and assessment of the FAIR principles on Digital Objects (DO) such as publications, datasets, or research software. Consequently, openly available automated FAIR assessment services have been working on standardization, such as FAIR enough, the FAIR evaluator or FAIRsFAIR's F-UJI. Digital Competence Centers such as University Libraries have been paramount in this process by facilitating a range of activities, such as awareness campaigns, trainings, or systematic support. However, in practice, using the FAIR assessment tools is still an intricate process for the average researcher. It requires a steep learning curve since it involves performing a series of manual processes requiring specific knowledge when learning the frameworks, disengaging some some researchers in the process.

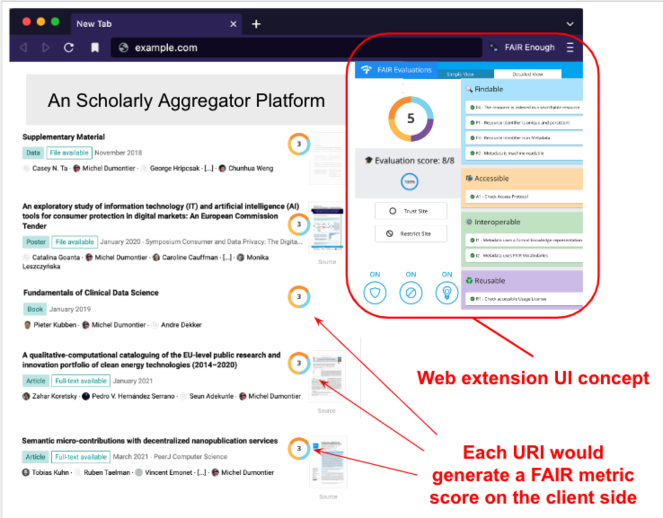

We aim to use technology to close this gap and make this process more accessible by bringing the FAIR assessment to the researcher's profiles. We will develop "The FAIR extension", an open-source, user-friendly web browser extension that allows researchers to make FAIR assessment directly at the web source. Web browser extensions have been an accessible digital tool for libraries supporting scholarship (

The FAIR extension is a service that builds on top of the community-accepted FAIR evaluator APIs, i.e. it does not intend to create yet another FAIR assessment framework from scratch. The objective of the FAIR Digital Objects Framework (FDOF) is for objects published in a digital environment to comply with a set of requirements, such as identifiability, and the use of a rich metadata record (

It is acknowledged that the development of web-based tools carries some constraints regarding platform versions releases, e.g. Chromium Development Calendar. Nevertheless, we are optimistic about the potential use cases. For example,

- A student wanting to make use of a DO (e.g. software package), but doesn't know which to choose. The FAIR extension will indicate which one is more FAIR and aid the decision making process

- A Data steward recommending sources

- A researcher who wants to display all FAIR metrics of her DOs on a research profile

- A PI that wants to evaluate an aggregated metric for a project.

These use cases can be the means to bringing the open source community and FAIR DO interest groups to work together.

Keywords

FAIR assessment, FAIR Digital Objects, FAIR metrics

Presenting author

Pedro Hernández Serrano

Presented at

First International Conference on FAIR Digital Objects, poster

Hosting institution

Maastricht University

Conflicts of interest

The authors of this paper declare that there are no conflicts of interest regarding this work.

References

- The prevalence of web browser extensions use in library services: an exploratory study.The Electronic Library33(3):334‑354. https://doi.org/10.1108/el-04-2013-0063

- Leaning into Browser Extensions.Serials Review45:48‑53. https://doi.org/10.1080/00987913.2019.1624909

- FAIR Digital Object Framework Documentation. https://fairdigitalobjectframework.org/. Accessed on: 2022-7-10.

- Communications in Computer and Information Science.3-16pp. https://doi.org/10.1007/978-3-030-23584-0_1

- A design framework and exemplar metrics for FAIRness.Scientific data5:180118. https://doi.org/10.1038/sdata.2018.118

- Evaluating FAIR maturity through a scalable, automated, community-governed framework.Scientific data6(1):174. https://doi.org/10.1038/s41597-019-0184-5