|

Research Ideas and Outcomes : Grant Proposal

|

|

Corresponding author: Daniel Mietchen (daniel.mietchen@mfn-berlin.de)

Received: 21 Dec 2015 | Published: 22 Dec 2015

© 2016 Daniel Mietchen, Gregor Hagedorn, Egon Willighagen, Mariano Rico, Asunción Gómez-Pérez, Eduard Aibar, Karima Rafes, Cécile Germain, Alastair Dunning, Lydia Pintscher, Daniel Kinzler.

This is an open access article distributed under the terms of the Creative Commons Attribution License (CC BY 4.0), which permits unrestricted use, distribution, and reproduction in any medium, provided the original author and source are credited.

Citation: Mietchen D, Hagedorn G, Willighagen E, Rico M, Gómez-Pérez A, Aibar E, Rafes K, Germain C, Dunning A, Pintscher L, Kinzler D (2015) Enabling Open Science: Wikidata for Research (Wiki4R). Research Ideas and Outcomes 1: e7573. doi: 10.3897/rio.1.e7573

|

|

Abstract

Wiki4R will create an innovative virtual research environment (VRE) for Open Science at scale, engaging both professional researchers and citizen data scientists in new and potentially transformative forms of collaboration. It is based on the realizations that (1) the structured parts of the Web itself can be regarded as a VRE, (2) such environments depend on communities, (3) closed environments are limited in their capacity to nurture thriving communities. Wiki4R will therefore integrate Wikidata, the multilingual semantic backbone behind Wikipedia, into existing research processes to enable transdisciplinary research and reduce fragmentation of research in and outside Europe. By establishing a central shared information node, research data can be linked and annotated into knowledge. Despite occasional uses of Wikipedia or Wikidata in research, significant barriers to broader adoption in the sciences or digital humanities exist, including lack of integration into existing research processes and inadequate handling of provenances. The proposed actions include providing best practices and tools for semantic mapping, adoption of citation and author identifiers, interoperability layers for integration with existing research environments, and the development of policies for information quality and interchange. The effectiveness of the actions will be tested in pilot use cases. Unforeseen barriers will be investigated and documented. We will promote the adoption of Wiki4R by making it easy to use and integrate, demonstrate the applicability in selected research domains, and provide diverse training opportunities. Wiki4R leverages the expertise gained in Europe through the Wikidata and DBpedia projects to further strengthen the established virtual community of 14000 people. As a result of increased interaction between professional science and citizens, it will provide an improved basis for Responsible Research and Innovation and Open Science in the European Research Area.

Keywords

Virtual Research Environment, Wikidata, identifiers, citizen science, collaboration, concept mapping, ontologies

Context

This article describes a research proposal that was submitted on January 14, 2015, to the European Commission's H2020-EINFRA-2015-1 call for proposals for e-Infrastructures for virtual research environments (VRE). Its main proposition was to build this VRE by integrating research workflows with the Web through Wikidata, an existing open environment for managing structured information collaboratively across all domains of human knowledge.

Wikidata is being built entirely using open software and open content, and it had a community of over 14,000 monthly contributors worldwide at the time, which has since risen to over 16,000. A VRE built on that basis would thus avoid some of the problems traditionally plaguing many VREs: software and/ or content that are proprietary and hence siloed, and a lack of community uptake.

Moreover, Wikidata's public version histories of both content and software (another feature missing in many VREs) provide for a solid basis for open science, which emerged as a new priority of the European Commission's research activities (and elsewhere) over the course of the year.

The project proposed to prototype the integration of Wikidata with a set of workflows that are either transdisciplinary (e.g. for handling scholarly references) or particular to specific use cases like chemistry or mineralogy. Apart from project management (WP 1), the individual workpackages then focused on

- (WP 2) describing research-relevant concepts (e.g. molecules, chemical reactions or journal articles) in terms of Wikidata's continuously evolving data model and with a view to maximize compliance with Web standards;

- (WP 3) establishing exchange and curation workflows between Wikidata and external databases hosting information about these concepts (e.g. identifier mapping);

- (WP 4) using Wikidata content in research workflows (e.g. querying it, or using its identifiers in research notebooks), including citizen science projects;

- (WP 5) training, education and outreach around these topics, with a focus on creating openly licensed educational materials that could be reused in other contexts.

Several of the activities proposed in the framework of the project have since experienced progress due to support from different parts of the community. For instance, there are now several mechanisms to query Wikidata in real-time or nearly so, some of which are using SPARQL (cf. Task 4.1). This allows, for instance, to get a list of countries ordered by the number of their cities with a female mayor. Basic support for units is now available too (cf. Task 2.1), and the integration of bibliographic metadata of scholarly citations (part of Task 3.1) is moving forward.

On the other hand, some of the proposed activities have seen little to no progress over the year, although we continue to see them as valuable for the community. These include, for instance, the integration of the European Library's dataset of bibliographic metadata for books into Wikidata (another part of Task 3.1) or Task 3.3, concerned with

- identifying potential external data sources suitable for integration with Wikidata and

- exploring the benefits for data providers to engage in such an open sharing of their data.

or Task 4.4, which was about

- involving Wikidata in scientific curation workflows

- connecting Wikidata with citizen science projects

as well as the reuse of data from the Horizon 2020 Open Data Pilot (part of Task 3.1), or most of WP 5, e.g. the development of online tutorials and course materials.

Some training events (cf. Task 5.5) have already been organized by the community, e.g. at the Semantic Web applications and tools for life sciences (SWAT4LS) conference earlier this month by the Gene Wiki team, whose NIH-supported work on Wikidata had inspired our Wiki4R proposal and who also received that conference's Best paper prize for their contribution "Wikidata: A platform for data integration and dissemination for the life sciences and beyond".

Like most of the submissions to this heavily oversubscribed call, our proposal was rejected. While this may have been appropriate in the context of this specific call (hard to tell, since there is no public information about which projects were submitted, and why the winning ones were chosen over the others), we think that the ideas we put into the proposal are still worth pursuing, and we want to encourage others to build on our proposal to move forward with the integration of research workflows with Wikidata.

There are some signs that this may indeed be happening: the WikiProject Wikidata for Research, which was initially started about a year ago in order to facilitate the open and collaborative drafting of our proposal (including assembling the group of project partners) has developed into a platform for sharing information at the interface between Wikidata and research, and about one third of its ca. 50 participants have signed up after our proposal was submitted. Some of them have, independent of us, explored how medical content on Wikipedia could benefit from closer integration with scholarly databases through Wikidata (similar to Task 3.1), and published a paper about it, while the Wikimedia Foundation recently funded a proposed project to mine selected external websites for facts that could be turned into statements on Wikidata, with the URL of that external site serving as a reference (similar to Task 3.2). Of note, a number of external websites and services have started to use Wikidata identifiers in their workflows (cf. Task 4.3), many of them in the area of cultural heritage (cf. Task 4.5).

In preparing the proposal for publication, we kept as closely as possible to the original text (which is still available as a preprint on Zenodo) and whose DOI was included in the original submission. Bothe the preprint and this version are missing the letters of support, since the logos in the letterheads are under copyright of the respective institutions and cannot be posted under an open license. Similarly, the reviews cannot be posted here, since we do not know who has the copyright, and whether they would consent to this publication. We encourage funders to make it simpler for applicants to share the reviews of their proposals, and we will update this article should we receive permission to post the reviews.

Except for adding this Context section, the changes made here were the addition of some metadata sections (e.g. Keywords, Funding program) and a few copyedits (typos and making sure all tables and figures are actually cited in the text). We also added an Acknowledgements section and dropped Anonymous as co-author in order to comply with journal policy.

Excellence

Participants are listed in

List of participants

|

Participant No |

Participant organisation name |

Country |

|

1 (Coordinator) |

Museum für Naturkunde Berlin (MfN) |

Germany |

|

2 |

Universidad Politécnica de Madrid (UPM) |

Spain |

|

3 |

Maastricht University (UM) |

Netherlands |

|

4 |

Wikimedia Deutschland (WMDE) |

Germany |

|

5 |

Universitat Oberta de Catalunya (UOC) |

Spain |

|

6 |

Europeana Foundation (EF) |

Netherlands |

|

7 |

Université Paris Sud (UPS) |

France |

Objectives

The overarching goals of the proposed project are:

- To support the application of Open Science principles1 by leveraging and strengthening an existing trusted, shared, long-term-sustainable, collaborative platform for the curation of open data.

- To enable researchers across Europe and beyond to perform transdisciplinary research, overcoming the fragmentation of virtual research environments (VREs) and separated data silos by moving to an open, shared data environment with collaborative data curation.

- To support increasingly rich interlinking of information and digital knowledge representations that can be used by both humans and machines.

- To increase the capabilities of both professional and citizen scientists and the capacities of their organisations to collaborate with each other in mutually beneficial and potentially transformative ways.

- To support the development of Open Science policies and, through increased dialogue between scientists and citizens, foster Responsible Research and Innovation in the European Research Area.

1We will use the terms Open Science and Open Research interchangeably here, with the understanding that no domain of research – academic, industrial, governmental or other – should be regarded as excluded from using an open approach. We will underline that by running this transdisciplinary project itself as an open project, where not only the results but also the process of research are made transparent if feasible.

To achieve these goals, we will build a VRE on the basis of Wikidata, the database that anyone can edit, which serves as the semantic backbone for structured data in Wikipedia and its sister projects. It represents a massive development step and paves the way towards a structured future of Wikipedia. Upon its launch, Sue Gardner, Executive Director of the Wikimedia Foundation, wrote: “Before Wikidata, Wikipedians needed to manually update hundreds of Wikipedia language versions every time a famous person died or a country’s leader changed. With Wikidata, such new information, entered once, can automatically appear across all Wikipedia language versions. That makes life easier for editors and makes it easier for Wikipedia to stay current."

Wikidata is a major open data platform for massive online collaboration and is designed to share “the sum of all human knowledge”, including all domains of scholarly and scientific knowledge. It has already become a major focus point for sharing scholarly as well as technical information. As a key element of the wider landscape of citizen-driven open knowledge initiatives, Wikidata is unfolding with the active participation of a global and multilingual community of volunteers – more than 14,000 of them contribute to the project on a monthly basis.

By building on Wikidata, the proposal avoids the problems of many other VRE platforms that struggle to find sufficiently large communities of users. An ecosystem of infrastructure, technologies and tools already exists, but the interactions with professional research are insufficiently developed. Most professional science and citizen science projects presently work on completely separate digital platforms. The present project addresses known barriers and investigates the yet unknown ones. By adding innovative features required for research workflows, providing help with semantic mapping, providing best practices and training materials, and researching pilot use cases, this project will enable the Wikidata platform to develop from a virtual environment to a virtual research environment for Open Science, engaging both professional researchers and citizen data scientists in new and potentially transformative forms of collaboration.

The specific objectives of the project are:

1. Goal 1:

1.1. to provide a VRE where contributions are transparent and trusted because all changes are accountable and a full history of changes is publicly available;

1.2. to enhance the verifiability of statements by accompanying them with provenance and references (Task 3.2);

1.3. to increase the scale and immediacy with which scientific information is made globally available to both people and machines, and curated by them;

2. Goal 2:

2.1. to support transdisciplinary science by promoting the adoption of a single open data VRE platform across disciplines from the natural sciences to the humanities;

2.2. to establish a single open-data VRE platform by piloting it for a set of core use cases (chemistry, library and information science, biodiversity science, mineralogy and the cultural heritage sector);

2.3. to promote the open-source Wikibase software (of which Wikidata is an installation) as a framework also installable and adaptable for institution-internal knowledge management;

3. Goal 3:

3.1. to establish the use of stable identifiers for scientific objects and concepts (which are dereferenceable, i.e. can be actively followed to obtain further information) in a pilot of 5 large and 10 smaller scientific datasets (WP2 and Task 3.1);

3.2. to increase the interlinking of information by making a large number of scientific objects and concepts citable in a long-term stable and sustainable manner in the Linked Open Data Cloud;

4. Goal 4:

4.1. to develop and provide documentation, training materials, tutorials, open educational course materials, best-practices advice, as well as dissemination and community engagement events (WP5);

4.2. to increase the number of citizen data scientists and citizen data curators interacting with professional scientific researchers and professional research organisations with the aim to increase the quality of the data available for all (Task 4.5);

4.3. to increase the number of external citizen science projects that use Wikidata (Task 4.5);

5. Goal 5:

5.1. to participate in the EC’s Open Data Pilot as both provider and re-user of data from multiple domains, which can inform quality improvement measures for the Pilot;

5.2. to analyse the motivations for the open sharing of data in research contexts, identify best practices, and distill recommendations on the design and implementation of institutional and overarching data-sharing policies.

Relation to the work programme

The proposal is strongly aligned with the specific challenges of the Horizon 2020 call for “e-Infrastructures for virtual research environments (VRE)”. It will address the challenge of capacity-building in interdisciplinary European research by adapting a trans-disciplinary collaborative open-data knowledge platform to the needs of professional researchers (WP2–4), developing best practices for and providing training around that (WP5). Being web-based and supporting standard forms of knowledge expression, knowledge integration and computing, the proposed VRE will integrate resources across all layers of the e-infrastructure. Its strengths will be full and inherent transdisciplinarity and mechanisms to support openness with respect to standards, transparency and accountability; options for dissent; and mechanisms to encourage consensus-building. It will provide significant computing resources to improve the analysis and integration options of both existing general knowledge and the newly integrated scientific data.

The Wiki4R VRE will be modular. The existing Wikidata infrastructure already fulfils several requirements of a modern VRE:

- abstraction from underlying infrastructures,

- the use of open source software with a large global developer base,

- the use of globally accessible, well-documented interfaces and APIs,

- the use of web-based workflows,

- a service-oriented architecture supporting both human and machine use,

- an ecosystem of tools interacting with the core infrastructure, and

- full interoperability with standard Semantic Web technologies.

The action will specifically address the presently tentative connection between Wikidata stakeholders (open-source volunteer developers from civil society) and professional researchers and their organisations. With its limited resources, the project will target on-platform development that is beneficial for research across domains. However, we expect that future extensions – by both the project partners and others – will create an ecosystem of domain-specific functionality around this VRE through both on- and off-platform enhancements. Wikidata and DBpedia will provide generic data storage, integration, curation and analysis services to a multilingual open-data user community, with strong attention to trust, provenance, accountability, and verifiability. All data on Wikidata will be open-access. Domain- and discipline-specific services from public, private and commercial research institutions will build on this foundation.

The Wiki4R VRE will be relevant for data-oriented research that addresses a broad range of societal challenges, from the natural sciences (including health, climate research, environment, agriculture and forestry), to engineering (including energy and transport), mathematics, information science, the digital humanities, education and unsupervised learning. The project partners span major branches of the natural sciences, the arts and humanities, the cultural and natural heritage sector, and civil society, along with partners with information science and Semantic Web expertise. Due to the open and participatory nature of Wikidata, people and organisations outside the partner consortium will be free to contribute. Challenges regarded as important by citizens will be addressable: no restrictions will be imposed by the consortium partners on the type and usage of data.

The greatest strength of the action will be new ways of a wide spectrum of citizens in research, data analysis and knowledge sharing, leading to a more inclusive, innovative and reflective European society. Open knowledge and the engagement of citizens and society in a responsible research and innovation process resonate strongly with European values. Global initiatives with a European basis – like the Open Knowledge Foundation, Wikimedia’s Wikidata and DBpedia – provide very valuable contributions to that.

Concept and approach

The research agenda for VREs by Candela et al. (2013) highlights that usability, sustainability and the reuse of services and resources should be built into the design, and that VREs should be integrated with other existing infrastructure in a mutually beneficial way, enhancing their sustainability and their value in the eyes of broader research communities. Promoting a VRE user community early in development will be critical to ensuring sufficient uptake for the sustainability of VREs and the potential for future research on improving them. Ideally, the VRE should come to be seen as an essential technology for the target community; this will require both social and technical innovation. As Candela et al. suggest, the “focus should be primarily on using technology to identify and rationalise workflows, procedures, and processes characterising a certain research scenario rather than having technology invading the research scenario and distracting effort from its real needs.”

Many existing VREs are designed as secure and closed "remote desktop" systems, in which a selected and managed number of researchers can work, and which provide feature-complete, controlled and customised data access and computing services to the larger world. This approach clearly has its applications; but it typically does not scale well to a large number of researchers with diverse research needs. One reason for this concerns the management of access and collaboration rights. If researchers fall into few well-defined groups with standard tasks (e.g. citizen scientists with homogeneous data collection rights), large numbers can be managed. However, researchers typically have very diverse needs. This can extend to citizen scientists, if they are considered as partners who collaborate in the full research process. The rights management for a VRE with a large number of users – possibly 100,000 – could become prohibitively expensive. Another reason concerns VRE-specific methods of access to external data and services, the sum of which can be expensive to implement and sustain. This limits this type of VREs with respect to the number and diversity of research questions and typically results in discipline-specific VREs. Finally, while the results from such a VRE may be channelled into open-access publications, such VREs typically do not easily lend themselves to open science, where the research process itself is shared (

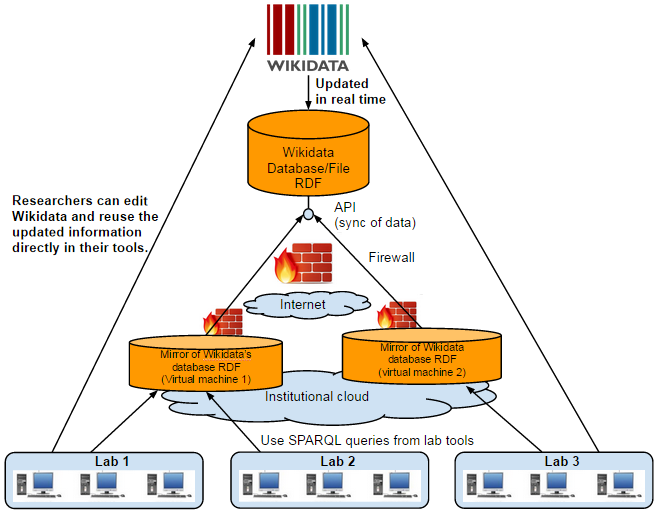

Outline of envisioned platform where Wikidata content can be used within an institutional firewall.

Our proposal does not attempt to build such a secured, highly customised, discipline-specific research environment with expensive-to-maintain, purpose-tailored tools. Instead, it focuses on the needs of open science and empowering researchers to work together across disciplines in an open environment. Ultimately, the web itself – especially the Semantic Web combined with web-based computing services – is the full VRE. Specific components, such as the Wikidata–science connection developed here, are components of this VRE. They provide services to the web, but do not limit the use of the web and its services.

The concept of open science (alternatively called “open research” if disciplines like philosophy, history, are not included in the definition of “science”) is central to this proposal. Open science is highly inclusive, inviting collaboration from professional peers as well as other interested parties, including citizen scientists. It is also open with respect to the process, inviting collaboration and providing access to research data as soon as they are created.

Managing openness is not a trivial exercise. It requires both appropriate technical foundations and procedural and social competencies, rules and policies. Openness and collaboration need to be maximised, dissent needs to be possible, consensus needs to be encouraged, all actions need to be owned, and all actors held accountable. Contributions must be not only technically documented, but also suitably acknowledged with provenance-related information that may be available to both humans and machines, yet transparent to the search process for content.

Many of the technologies and policies have already been developed in the context of Wikipedia and Wikidata. However, there is no automatic adoption of these techniques by professional science. We identify social barriers, especially a lack of examples and lack of training, but also technical gaps. The project therefore studies these problems. It works both on enhanced technical solutions and on providing professional researchers with examples, guidelines and best practice documentation as to how their data can be shared openly and integrated with other open data (see “Optimizing openness”, T3.3).

The Wiki4R VRE, which will be tested in a number of pilot cases, supports web-based, discipline-neutral, standard analysis methods. It empowers researchers to share data widely, to integrate and interlink research data with general, non-discipline-specific data, to cite data and have their own data cited. Finally, it empowers researchers to share the burden of data curation between professional science organisations and citizen data scientists/citizen data curators.

The open-science approach will not work for all possible domains of systematic inquiry (e.g. industrial research aiming at patentable results). If legal, data privacy, commercial, or other considerations prevent openness, it will be necessary to design mechanisms that allow certain data to be stored privately. Aspects of data privacy will be carefully considered where applicable, and the Wikimedia Foundation’s privacy policy will be enforced.

However, where open science is possible, it will be highly desirable. By increasing opportunities for collaboration, by increasing transparency and trust, by providing research progress and feedback opportunities as early as possible, and by providing more examples for students to learn from, research and education will become more efficient.

Factors that facilitate the uptake of the Wiki4R VRE for open science use are open data, coverage of all domains of knowledge, a powerful API and strong support for multilingualism (in terms of the data, the community, and the user interface). The use of a CC0 license will avoid forced attribution stacking for primary data, while at the same time mechanisms support and encourage proper scholarly citation. The deep integration of Wikidata with Wikipedia and its sister projects provides an excellent basis for recognition, long-term sustainability, and high impact. The consortium expects to have fewer issues with the credibility, sustainability and longevity concerns most EU projects typically fight with. One limitation that the present action will address is a lack of acceptance as a VRE for professional research. A key goal of the actions will be to ensure that the Wiki4R VRE will be readily accepted by many partners outside the consortium. The number of researchers targeted by this action is therefore potentially very high.

An important action is the integration of semantic entities and ontologies between research data and existing Wikidata items and properties to improve interoperability (WP2). The study and alignment of ontologies in this context is based on the realisation that research is dynamic: in the foreseeable future, no single ontology will be able to cover the needs of all diverse research groups. Even within disciplines, ontologies will be constantly evolving. Wikidata therefore supports an agile development of multiple ontologies, including the option that ontologies developed by different researchers may be contradictory. The choice of a single ontology will often be required for analysis purposes. The fundamental system is not built for a priori “truth”, but for discourse and research. Thus, instance/subclass assertions will underlie the same requirements for ownership and provenance reference as all other statements.

Sharing the semantics of research data early in the research process has great potential in increasing the efficiency of research. Doing so enables the expression of knowledge by the authoritative researchers, rather than the kind of guesswork that typically has to occur when adding semantic tagging to research publications that are not inherently semantic (but, for example, have been published as PDF). Another problem addressed is the problem that identifiers for concepts or objects may be required for further work long before the formal results are finally published. Even without full open science, much is being reported on conferences and in communications that influences the research of other groups and makes it desirable to semantically refer to new objects and concepts as early as possible. The creation and dissemination of appropriate identifiers should be one of the first steps in an open-science process (

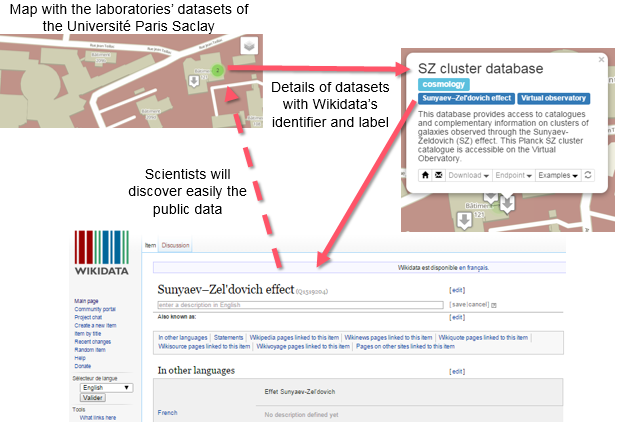

Prototype of the platform at the Center for Data Science of Paris-Saclay, where Wikidata identifiers are already used to link public data and public research data.

The proposed social and technical design of the Wiki4R VRE addresses the needs and requirements of a diverse network of project partners and associate partners, who offer use cases for data deposition and reuse as well as provision of value-added services. There are still comparatively few examples of Wikidata use in research contexts, therefore the project will include the development of new projects as well as associated tools and infrastructure that support these and existing use cases. Several partners are specifically interested in the integration of heterogeneous data from multiple sources, for example:

- providing bibliographic metadata across scholarly domains for direct linking with research data

- integration of data across chemistry, between chemistry and biology and between chemistry and mineralogy

- integration of metadata about heritage collections

- integration of multilingual data.

The Wiki4R VRE will exist in an ecosystem of tools, services and infrastructures at national and European levels. Several parts of the ecosystem have been developed by project partners, maximising the ease with which the present project can build upon these developments. Examples include The European Library (part of the Europeana Foundation, providing access to the catalogues of 48 National and Research Libraries of Europe) and Open PHACTS (providing pharmacological data to aid drug discovery). Further examples are portals for advanced data exploration, multilingual integration, and semantic linking of data. Examples of transdisciplinary and multilingual data-driven research include analysing relationships between data items, such as the social and intellectual relationships between ancient philosophers, linkages between scientific facts extracted from the scholarly literature, or the detection of biases across many languages (see also gender bias examples further down). Value-added data mining services such as ContentMine have already defined use cases for automated deposition and retrieval of data in the context of a social architecture. They combine use of structured data from Wikidata to find connections between incoming data streams from the scientific literature with community curation. A number of visualisation projects use Wikidata, for example to analyse networks of ancient philosophers.

The existing Wikidata community includes domain specialists such as the editors of WikiProject Chemistry. In many cases, this means that mapping and external links to relevant resources already exist, of which the project can take advantage.

The following research innovation activities are especially relevant for Wiki4R:

The Europeana Foundation and The European Library (already mentioned above) are supported as part of the EU’s Digital Agenda and expected to be funded as part of the EU’s Connected Europe Facility. The Europeana Foundation acts as the central aggregator for data about Europe’s culture heritage. Europeana has aggregated 32 million records related to digitised objects in Europe’s museums, archives and libraries. For this purpose, Europeana has developed a Europeana Licencing Framework to harmonise the rights statements about digitised content and a related metadata model (the Europeana Data Model) for creating interoperable metadata from different domains. These two pillars ensure that the data available from Europeana - which will put onto Wikidata as part of this project - is all marked as CC0 and available as standardised Linked Open Data.

In addition to the central aggregator, various other specific aggregators focus on specific areas of the cultural sector (e.g. museums or archives). In the context of this proposal, The European Library will make its dataset of over 90 million bibliographic records available, i.e. data about published books. The data is drawn from national and research libraries across Europe - in essence, the European Library dataset acts as the record of publication in Europe. Similar to Europeana, the dataset is available as CC0 and as standardised LOD of national, regional, and local endeavours where complementarities and new challenges are clearly identified and acted upon.

RENDER is a finished FP7 project which provided a comprehensive conceptual framework and technological infrastructure for enabling, supporting, managing and exploiting information diversity in Web-based environments. Diversity was viewed as a crucial source of innovation and adaptability, ensuring the availability of alternative approaches towards solving hard problems, and provides new perspectives and insights on known situations. Equally important, embracing diversity in information management is essential for enhancing state-of-the-art technology in this field with novel paradigms, models, and methods and techniques for searching, selecting, ranking, aggregating, clustering and presenting information purposefully to users, thus alleviating critical aspects of information overload. The concepts, methods, techniques and technology developed by RENDER will especially inform the diversity approach for Wiki4R.

Gene Wiki (an Associate partner, via Andrew Su at Scripps) is a highly relevant project for our purpose in that GeneWiki successfully implements the dissemination of professional research information into Wikipedia and increasingly Wikidata. Its focus is on human genes, diseases and drugs, which has some very limited thematic overlap with our activities on metabolites (see also T3.1). Methodologically, we envisage a close collaboration, e.g. in terms of sharing in the development of code for curating Wikidata items and in collaborating with relevant WikiProjects in the semantic modelling parts (WP2). There is also a conceptual overlap in that some of our proposed activities map well onto activities that were contained in the Gene Wiki proposal but not approved for funding. This includes a training component (our T5.2-T5.5) but also the idea of partnering with a journal (cf. T5.1). The Gene Wiki project is to run until April 2018, so we envisage organizing a joint session during at least one of the events we are both attending (e.g. during Wikimania 2016, which is to take place in Italy ). Wiki4R will build on the experiences of GeneWiki.

Wikimedia Deutschland is partner in the Commons European Training Network (CONCERTO) proposed by the Autonomous University of Barcelona under the MARIE Skłodowska-CURIE ACTIONS Innovative Training Networks (ITN), Call H2020-MSCA-ITN-2015. This ITN, if funded, will provide a cross-disciplinary approach to research of global, local, digital and non-digital commons and initiate 15 individual research projects, including one on knowledge commons. Synergy effects between CONCERTO and Wiki4R would provide a better understanding of the mechanisms and policies supporting and governing diverse multi-stakeholder knowledge commons such as Wikidata.

The Blue Obelisk Movement is an informal organization of chemistry promoting and developing tools for Open Data, Open Source, and Open Standards (ODOSOS) in chemistry. Besides many cheminformatics tools, they also maintain a manually curated, CC0-waived knowledgebase of element and isotope properties which will be used in the Wiki4R pilots.

Multimedia Objects in Open-Access Publication, a recent DFG (German Science Foundation) application by the German National Library of Science and Technology (TIB) will investigate the “harvesting, indexing and provision of multimedial open access objects using the infrastructure of Wikimedia Commons and Wikidata”. If funded, this action will provide significant synergies with Wiki4R, which will be leveraged through the Leibniz research network Science 2.0, in the framework of which TIB collaborates with MfN and WMDE.

Data analysis tools like Cytoscape or R and workflow systems like Taverna are frequently used in research, and Wiki4R is interested in integration with these tools. Various consortium partners are involved in projects that expose data platforms in such tools. These projects include BioVEL/ViBRANT, Wf4Ever, and eNanoMapper, all aimed at making data integration and analysis easier.

Other relevant past and present projects are OpenUp! (natural history media aggregator into Europeana), OpenAIRE (EC Open Access Infrastructure for Research in Europe), and Zenodo (open access literature repository, used also by OpenAIRE).

Wiki4R will focus on use case scenarios of the VRE that cross disciplinary boundaries in that they:

1. are useful for all disciplines: scholarly publications and associated multimedia, ontology development, metadata about institutions or researchers;

2. cover a broad range of disciplines (H2020 Open Research Data Pilot, citizen science, GLAM collections, structured historical information, big data);

3. focus on information from chemistry that is used in a wide range of other disciplines (from agriculture to medicine and pharmacology to palaeontology and mineralogy to the restoration of paintings);

4. focus on information from biological taxonomy that forms the basis for research in an entire field (life sciences);

5. range from monolingual to multilingual;

6. give guidance to software development representative for a diverse set of potential users.

Ambition

The development of a completely universal, transdisciplinary, transnational, and translingual VRE is an unsolved challenge. It involves extremely complex technical, legal, procedural and social issues and is, in fact, a challenge by far exceeding the resources and funding durations typically available. The proposal is highly innovative in addressing this problem by recognizing three key points:

- The Web itself, in the form of globally interconnected data and services, is the VRE of the future.

- The focus must be on establishing globally accepted points of collaboration to iteratively and in an agile development process gather sufficient resources, development and training actions to provide this VRE.

- The open knowledge community has already developed solutions and platforms for many of these issues, solutions which, with limited effort, can be brought to fruition for conventional professional research as well.

Using highly limited resources, the proposal will therefore focus on investigating and developing the functionality of Wikidata for professional scientific research. VREs built on top of existing, widely deployed and utilized platforms are rare, but this approach is essential for sustainability, trust and reaching a large community. The proposal most likely is asking for significantly less resources than many other VRE proposals in this call. In the light of the vision above, it understands itself as a pilot in a global, highly ambitious process of increasing integration, collaboration, and openness.

Professional scientists and researchers as well as citizen scientists (including "Citizen Data Scientist") will be able to use this environment. With the inclusion of Freebase into Wikidata (expected to be accomplished by the Open Knowledge community in 2015), the Wiki4R VRE will be capable of providing a unique service: for the first time, both citizen and professional scientists from any research or language community can integrate their databases into an open global structure. This point of integration can be used to publicly annotate, verify, criticise and improve the quality of available data, to define its limits, to contribute to the evolution of domain-specific ontologies, and to make all this available to everyone, without restrictions on use and reuse. One application will be the analysis of intersections of public (governmental) and research data. An example could be the combination of disease epidemiology data (by country, by year) with public sales data of products (e.g. drugs, food) or events (e.g. concerts, movies).

Open Science in itself is an ambitious new undertaking. The proposal is ground-breaking in combining the open collaboration methods developed in citizen-driven open knowledge initiatives with the new Open Science approaches developed in professional research on a common infrastructure.

Impact

Expected impacts

The research community is experiencing a massive growth in data, a trend that is expected to continue. On the one hand, this manifests itself in the form of large data volumes and data streams with high bit rate of relatively homogeneous data. Particle physics are a prime example where these aspects of big data are dominant. These are addressed through various EU funding actions (e.g., EUDAT). On the other hand, however, the trend also manifests itself in the form of “big complexity issues”. New research information refers to previous information, often across many different disciplines. Traditional isolated databases containing verbatim information in data fields, queryable through human-oriented search portals or custom APIs, do not fulfil today’s data analysis requirements. A first improvement is to disambiguate relations between items through stable, globally unique identifiers. However, this still requires the expert knowledge in which “data silos” related data will be found. The next step in managing the complexity of interrelated scientific, societal, and governmental information is therefore to use identifiers which inform machines how to reach related information. The most general model for this is called the “Semantic Web”.

Whereas funding with respect to high data volume and high speed processing issues is ample, it may be that insufficient attention is yet given to issues of high data complexity. With the present proposal we offer a step into this direction, addressing many issues of the future of scientific data analysis in the face of high data complexity. The proposal contributes to:

- Strengthening the European Expertise in Semantic Web issues,

- interlinking research data early on,

- developing Open Science collaboration expertise in general, and especially

- developing capacities in collaboration expertise between citizen data scientists

While the Semantic Web addresses many technical issues, it becomes truly effective only if data are semantically interlinked to provide context (the final star in Berners-Lee’s five star concept). Reaching this state at the necessary scale is a complex problem. An important answer is community data curation by an alliance professional scientists from multiple institutions as well as citizen data scientists. To achieve this, database maintainers must relinquish some control and become competent in smarter collaboration techniques.

Wikimedia projects are a prime example for crowdsourcing and massive collaboration per se, but they also have a track record of involvement in the curation of external scientific databases. Notable examples include the Gene Wiki project – which curates information about human genes on the English Wikipedia and now increasingly on Wikidata – and the Rfam/Pfam projects, which collaborate with the English Wikipedia in curating databases about RNA and protein families.

The project addresses collaboration opportunities both between professional organisations and between professional research and citizen science. An example may illustrate use cases for the first scenario:

Natural history museum collections across the world hold hundreds of millions of specimens, which are important for studying the diversity of organisms and their uses. The digitisation of theses specimens is a slow and expensive process (because of sheer numbers, diversity of objects and their preservation, hard to decipher handwritten labels, etc.). Knowing itineraries of collectors, routes and dates of expeditions can improve quality control and greatly simplifies expensive processes like geolocating specimens (essential for habitat modelling, nature conservation and climate change research). Having a transinstitutional repository of collector itineraries rather than each institution curating local data would greatly increase the efficiency of work. Existing systems like ORCID (for contemporary authors only) or VIAF do provide much information, but cannot provide enough coverage for the breadth of collectors relevant to museums. The Wiki4R VRE will provide links to ORCID and VIAF, but also support adding new persons as needed in a way, that local work can use the newly created semantic identifiers within seconds.

Highly relevant to the Wikidata argument is that the use case is in fact not limited to natural history collection. Many collectors collecting both natural and cultural history artefacts. Thus, the standard disciplinary approach to institute a shared conventional database across natural history institutions (e.g. in Europe through CETAF) is still not fully satisfying. Going even further, collections are also relevant as a historical record documenting global exploration (and often colonization) of the world and collaboration with historical researchers is highly desirable.

The Wiki4R VRE is ideally positioned for collaborative data curation across scientific disciplines, organisations, countries and languages, since it:

- is already well established and globally available to everyone,

- provides data in a structured format and in multiple languages,

- can be contributed to and corrected collaboratively, through humans and machines,

- can be linked with other ancillary data (e.g. current or historical geographical entities).

In order to realize this transdisciplinary integration potential, groundwork has to be laid by integrating domain-specific research data and scientific knowledge bases with existing Wikidata content. We will support the ontological mapping (WP2), establish tools and workflows for systematic integration (WP3) and analysis methods (WP4), and develop training materials. The effectiveness of the methods developed will be tested. Important pilot use cases are bibliographic metadata about scholarly publications and chemistry data (e.g. small molecules, biochemical pathways, enantiomers). Professional chemical researchers will closely collaborate with Wikidata’s WikiProject Chemistry – a community of volunteers with a shared interest in curating chemical information on Wikidata – and professional librarians with WikiProject Source MetaData, a community interested in metadata about references cited on Wikipedia. Similar WikiProjects exist for a steadily growing variety of topics and will be our general points of contact for ensuring interoperability of our cross-domain activities with domain-specific matters. For instance, WikiProject sum of all paintings collects information about paintings in museum collections around the globe, and lists of these paintings can easily be generated on the basis of Wikidata, e.g. for Kandinsky. Other WikiProjects on Wikidata deal with music, economics, geology, medicine, or tropical cyclones.

The proposed project – in its transdisciplinary character – has also excellent potential for gender research. Existing research examples include the Wikipedia Gender Inequality Index research project, studying questions like among all the gendered biographies, which Wikipedias have the highest percentages of articles about a given gender? As the semantic data becomes richer, it will become possible to study the interrelation between gender and cultural biases, or sexuality and ethnicity biases among Wikipedias. In fact, for any recorded property in Wikidata, we will be able to see how they are biased by language.

We aim to demonstrate that the increased uptake of Wikidata as a central part a Wiki4R VRE will accelerate the pace of scientific discovery. Technically, Wiki4R will improve reliable discovery, access and re-use of public data. Not all data will be in Wikidata itself; the vision is that Wikidata becomes a hub with linking concepts and essential information, with additional detail provided as the professional research organisations. Socially, the project will lead to more effective collaboration among professional researchers and between professional and citizen researchers. Open science has great potential to increase the efficiency and creativity of research. Furthermore, true collaboration with citizens, including discussions about research goals, will greatly strengthen discussions about responsible research and innovation. The project is dedicated to building bridges between user communities. With its breadth of scope and true transdisciplinarity, the project will have impact across disciplines and can become a paradigm for structuring open data for open science.

In many ways, Open Science is an equivalent to open borders. The economic success of the removal of taxation, legislation, and other cross-border obstacles to trade in the EU demonstrates how research can gain by mastering the necessary competencies to remove obstacles between research groups, disciplines and nationally organised research organisations. However, this vision urgently needs a foundation in platforms designed for openness, transparency and trust. Wikidata is the ideal candidate for achieving this, but critically needs enhancements to better interact with professional research.

Measures to maximise impact

Some measures to maximise impact have already previously been mentioned. Wikidata is a generic system designed for most types of structured semantic data from all scholarly knowledge domains and is available openly and widely. Of special importance is the focus on enabling re-use.

By default, any project involving Wikimedia communities or a Wikimedia movement organization will require that all project information, contents, data, and results are made available under an open license. Consequently, this project will be entirely based on open source software (Linux, Mediawiki, Wikibase, etc.) and all software developed in the consortium will be under open source licenses, maximizing the coalition that maintains the software beyond the duration of the project. Research data integrated into Wikidata likewise will be re-usable under open licenses. The project will treat data pursuant to the definition of Linked Open Data, a standard also adopted by the EU for its Open Data Portal (see also T4.1).

Reports or scientific publications resulting from the project will be published under the ‘gold model’ of open access, with additional archival in relevant repositories. The default license will be CC BY 4.0.

Of critical importance for the success and impact of the project is that the Wiki4R VRE becomes compatible with and integrated into the data workflows of professional scientific institutions. Interoperability will be tested with W3C unit tests (Task 4.1). Data can be created and updated both by humans and software/machine and are immediately available for further use or new semantic links (Task 4.2).

Dissemination and exploitation of results

The exploitation plan for the project is primarily based on the concept of openness. The value is generated as a result of lowering barriers to re-use and integration, similar to how the EU economy profits from lowering other barriers, such as tariffs, border controls, and or non-harmonized legislation. Openness is not altruism, but in many cases makes societal and economic sense.

A good example is open source software. This form of software is not the domain of hobbyist or universities alone. It makes economic sense because it allows major competitors in the IT industry to share the burden of most software development, while focusing their proprietary actions on a number of (non-open) additions.

Similarly, a global, transdomain, multilingual structured knowledge base, providing generic facts as well as research results, with open licensing and a powerful API is an excellent basis for entrepreneurial business developments. For example, Histropedia – a startup in the UK and an Associate partner – uses Wikidata statements as the basis for location- and time-aware services that allow users to create or view timelines on topics of their choice. Media from Wikimedia Commons and related Wikipedia articles are automatically added. The system will support combining external data with globally available Wikidata-based data, to create spatio-temporal visualisations for research, education, presentations or as parts of other software applications. Intended user are academic research, education (e.g. a chart of atmospheric CO2 and average temperatures plotted against a timeline of geological events), the tourist industry, and organisations that wish to visualise their own proprietary data sets in combination with publicly available data. Another example is MusicBrainz (a project of the associate partner MetaBrainz ) which already closely interconnects its own work with Wikidata and which is used e.g. by the BBC (UK).

Open data can in general be an excellent basis for business. An apt example is the recent decision to dissolve the Google Freebase system in favour of the broader Wikidata integration possibilities, with Google reasoning: “they’re growing fast, have an active community, and are better-suited to lead an open collaborative knowledge base.“ We agree with Google’s assessment and consider it important to build capacities on exploiting openness such that the European economy may benefit.

Similar benefits from openness can be expected in the area of education, especially the development of Open Educational Resources (OER) and courses. The benefit will not only the availability of data that are directly relevant to the curriculum. The availability of data sets of the scale and complexity present on the Wiki4R VRE will enable courses where real research can be performed based on inquiries generated by the students rather than the teacher.

Thus, the focus of the project is on dissemination, not knowledge protection. Data present in Wikidata can be either used directly or they can be downloaded and imported into mirror deployments. Management is not through access rights, but through accountability. All the data changes are tracked and patrolled by the contributors of Wikidata. Quality control is currently mainly manual, but one of the actions of the present proposal is to develop improved automated quality control systems. Both scientists and citizens can create unit tests to provide timely quality control (Task 4.1). The results can be used to alert community administrators as well as professional research organisations curating their data on Wikidata.

Sustainability

The sustainability of the Wiki4R VRE critically depends on the financial sustainability of Wikidata. Wikidata is a project of both the global Wikimedia Foundation and Wikimedia Deutschland (Germany). Since 2012, both organisations have invested significant amounts of their own funds, supported by third-party funding from, e.g., the Allen Institute for Artificial Intelligence, Google, the Gordon and Betty Moore Foundation, and Yandex. Wikidata has already become a core technology for the functioning of Wikipedia and is thus essential to the Wikimedia movement. It is supported by a number of additional stakeholders from the cultural, information technology and educational arenas who see its potential for making content and knowledge accessible. Wikidata’s business plan for financial sustainability includes a diversity of financial and human resources which are currently secured or under development:

- The invaluable human resources provided by the community of Wikidata online volunteer contributors and editors (currently over 14,000 individuals and growing fast)

- Base funding from the budget of the Wikimedia Foundation (which raised USD 37 million in online donations in the 2013-14 fiscal year)

- Base funding from the general operating budget of Wikimedia Deutschland (which raised USD 8 million in online donations)

- Funding through partnerships with corporate donors

- Funding from private foundations (WMDE fund development staff is currently working with a strong focus on fund acquisition from European-based foundations with an interest in free and open knowledge)

- Public educational and cultural institutions and entities of government, interested in partnering with WMDE and its broad community of online volunteers.

A direct monetization of Wikidata is neither intended nor possible, as it conflicts with core principles of the Wikimedia and Open Knowledge movements. It is, however, possible that at some point computationally expensive services may be provided at a charge or under dedicated funding by public or private partners depending on these services. In the framework of the current proposal, we consider a testing of such services to be premature.

Communication activities

As a basis for engaging with the target communities and beyond, all communications will be as open as possible, as is common practice in both Open Science and Wikimedia contexts.

That practice was already applied to the drafting process of this proposal itself, which was completely open from the initial blog post to the outline on Wikidata and from the actual drafting in public Google docs to the archiving of the final version under a Creative Commons Attribution license in a dedicated Zenodo community and a dedicated page on Wikidata for post-submission updates.

This was complemented by daily communication via a public mailing list, and a dedicated Twitter hashtag as well as contributions to the Wikidata mailing list and community newsletters related to Wikidata or cultural partnerships, in addition to public hangouts and the various communication channels on Wikidata itself.

One article about the project was viewed well over 6000 times prior to proposal submission, and another one offered “uncritical cheering” for the project. Judging from such feedback and from the contributions we received from the community during the drafting process, this multi-channel interactive approach is fruitful. We were further encouraged by public attention being directed to the issue of open grant proposals in response to one of our publications.

A final underpinning of our communication strategy is the awareness that Wikidata was conceived as a tool to help manage data for Wikipedia. While Wikidata has since grown more of an identity of its own, this aspect is still important, and it provides exposure at scale and in multiple languages.

On that basis, the detailed communication strategy varies depending on the characteristics of the respective communities:

Individual professional researchers will be reached through the networks of the project partners, through publications, mailing lists, training events and through conference attendance. We will also address the lack of professional recognition for community curation by exploring ways to couple it to formal publications. For example, the Gene Wiki Reviews couple the publication of review articles on specific genes with contributions to the corresponding articles on the English Wikipedia. This follows in the footsteps of the journals RNA Biology and PLOS Computational Biology, which have been coupling Wikipedia pages and review articles in a similar fashion. One of the MfN team members (DM) is involved in the PLOS initiative, and associate partner Pensoft (who is the publisher for several MfN journals) is interested in such an approach. While such initiatives have created a relatively low number of articles so far, they are widely known in the respective communities and can thus serve to raise community awareness of our activities.

Professional Research organisations: All project partners are involved in professional networks, which will be leveraged to reach out to professional organizations outside the project. For instance, MfN and WMDE are both members of the Leibniz research network Science 2.0, and UPM acts as the Spanish node of the DBpedia network.

Furthermore, we will leverage the growing network of interactions between the Wikimedia and research communities, which has so far been focused on Wikipedia, Wikimedia Commons and to some extent Wikisource, but is now extending towards Wikidata.

One particularly successful initiative in this framework is that of a Wikimedian in Residence, i.e. an active member of the Wikimedia community working inside an organization or institution on enhancing the interaction between the two. Such Wikimedians in Residence have been active in about 100 institutions around the globe over the last 5 years, and they form the nucleus of a very active community at the intersection between Wikimedia and the cultural sector, which increasingly extends into research-related organizations like the Swedish Agricultural University, Cancer Research UK, or ORCID. The Royal Society of Chemistry, specifically, is an Associate partner and will assist the project in engaging the chemical community.

These cultural partnerships have also led to technical developments, e.g. several Wikimedia chapters partnered with Europeana to create a toolset that facilitates the upload of images and other media from heritage collections to Wikimedia Commons with proper metadata.

Higher Education: The consortium has four universities as members (UPM, UM, UOC, UPS), three of which will collaborate to organize a MOOC (T5.4), and all partners are involved in the development of course materials (T5.3). The non-university partners also have strong ties to higher education, e.g. doctoral research being performed at MfN, Europeana materials being used in lectures, and OER conferences organized by WMDE. Furthermore, many users (both contributors and readers) of Wikidata are students or Early-Stage Researchers, and hundreds of courses are organized around the globe each year that have a Wikipedia component and that increasingly involves Wikidata as well.

Citizen Science projects: The European Citizen Science Association, the secretariat of which is hosted at the Museum für Naturkunde Berlin, has established important communication channels to a large number of citizen science projects in Europe. We will communicate through these channels (web site, newsletters, events and meetings).

The Wikidata community primarily communicates through the well-established and widely used Wikidata community portal. It facilitates introduction of new users and editors to the tools, rules and practices, enables users to submit requests and engage in topical on-wiki discussion. It further provides the platform for Wikiprojects, which are groups of editors working together to improve Wikidata. Wiki4R will utilize the existing channels of this platform to provide updates, resolve integration issues, and introduce new users from the science communities to the world of Wikidata.

Wikimedia communities in general: Wikidata is the fastest-growing Wikimedia project globally in terms of the increase in number of volunteer contributors. However, there are other Wikimedia communities – including Wikipedians, Wikimedia organizations, and contributors to cultural heritage projects – whose members have a vested interest in the development of Wikidata. Communication activities for these people and groups will use well-established channels as well, including a variety of Wikimedia blogs, online newspapers, Meta-Wiki pages and Twitter.

Policy and decision makers: Outreach and continuous communication to this group will utilize, among other channels, the joint Brussels office of the EU-based Wikimedia Chapters. Also known as the Free Knowledge Advocacy Group EU (FKAGEU), this Alliance of Open Knowledge advocates will be able to directly communicate policy-relevant project results, barriers, insights and relevant project outputs through its strong network of contacts with Members of the European Parliament and other key decision makers. Some of the main policy priorities of the FKAGEU are EU copyright reform, free and open access to public works (which includes scientific articles produced by publicly funded researchers) and freedom of panorama. All of these policy issues are of crucial importance to the goal of increasing the number of items and data sets that are available under open license and linked in Wikidata.

Implementation

WORK PLAN

Overall structure of the work plan

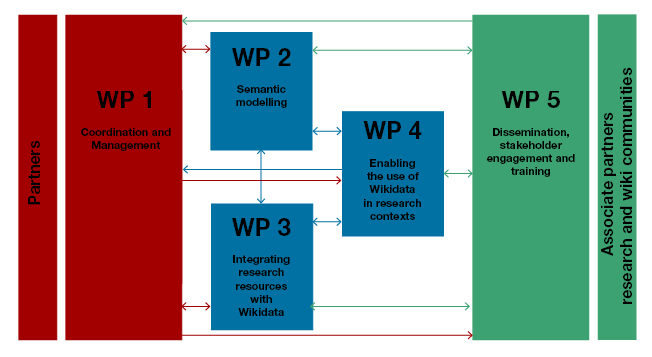

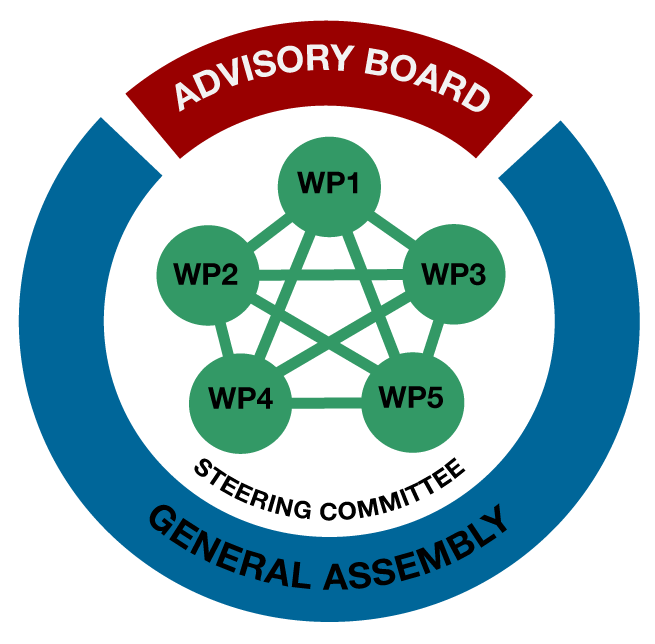

The five work packages (WPs) can be briefly characterized as follows:

WP 1: “Coordination and management” will provide the administrative support for effective project management and support the organisational structures and governance mechanisms for the efficient coordination of the Wiki4R consortium. It will facilitate communication and exchange of information between consortium partners, WPs, and organisational bodies, including an independent Advisory Board. Main outputs will be the implementation and updating all documents required for the full implementation of the projects, including the management of deliverables and all interim and final financial and scientific reports. Another main output will be a highly transparent and open communication, which provides new avenues for assessing and engaging with the research process and its outcomes.

WP 2: “Semantic modelling” will work on community-agreed profiles for the most relevant semantic concepts (core classes and core properties) in a selected number of scientific domains. Profiles include semantic mappings as well as associated policies and best practices that meet the functional requirements of the research use cases addressed in WP3 and WP4. Furthermore, this WP will match these classes and properties to ontologies used by the scholarly community to allow integration of Wikidata into research data, using the common ontology used by both. Main outputs will be property profiles required for the classes of data sets to be integrated in Wiki4R as well as for common classes needed for the research context. Policies and best practices will be documented and semantic mappings provided where each class and property will have at least one relation to external ontologies. Multiple mappings may be provided where required (especially if different use cases have different needs).

WP 3: “Integrating research resources with Wikidata” will demonstrate how research data can be integrated with Wikidata in the Wiki4R VRE. For this purpose, it will incorporate a selection of external research resources into the VRE, demonstrating pilot workflows for semantic integration, quality assurance mechanism, and bidirectional information flow. Attention will be given to the mechanisms in which Wiki4R can promote the open licencing of external resources that are yet unavailable for re-use. Main outputs will be workflows, best practices documentation, approaches to measure the quality of data, and foremost working examples that serve as paradigms and motivations for future applications. In addition, resources suitable for the future application of Wiki4R will be identified and the motivation and pitfalls around sharing data experienced in the pilots will be summarized in reports. This WP depends on WP2.

WP 4: “Enabling the use of Wikidata in research contexts” will integrate Wikidata into the daily workflows of professional scientists to create a full VRE. To address confidentiality issues of ongoing research projects, this WP will develop solutions to dynamically mirror the content of Wikidata and provide controlled information interchange using Semantic Web technologies. Scientists will be able to curate information in Wikidata using workflow-oriented dedicated editing interfaces that will interact with Wikidata via its APIs. The approaches will be tested in the laboratories of one of the project partners, using citizen science projects, and in a collaboration with Europeana. This WP depends on WP3.Main outputs will be a new interaction between Wikidata and a SPARQL endpoint for complex queries, and a ready-to-use virtual machine that allows hosting a Wikibase software instance compatible with Wikidata and Wiki4R in a protected institutional network. Further output will be solutions developed around the use cases to test the approach and reports on the effectiveness of Wikidata use in these use cases.

WP 5: “Dissemination, stakeholder engagement and training” will disseminate the outputs generated by the project to communities of stakeholders. It will practice openness through open communication to the extent that this is practical. In combination with the multiple, largely bidirectional communication channels outlined in the communication strategy, this allows to engage diverse communities more profoundly than traditional methods. Main outputs will be communication and engagement activities, including training activities directed at science students, professional researchers as well as members of the Wikidata community. The ultimate output will be capacities in the European Research Area in engaging in Open Science, using Wiki4R as a hub for research.

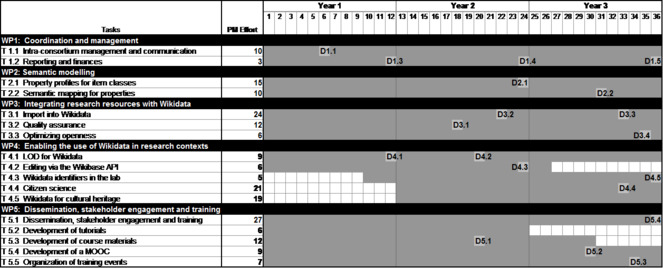

Timing of the different work packages and their components

Detailed work description

Work package 1 - Coordination and management (

WP1 - Coordination and management

|

Work package number |

1 |

Start Date or Starting Event |

M1 |

||||||

|

Work package title |

Coordination and management |

||||||||

|

Participant number |

1 |

2 |

3 |

4 |

5 |

|

|

||

|

Short name of participant |

MfN |

UPM |

UM |

WMDE |

UOC |

|

|

||

|

Person/months per participant: |

9 |

1 |

1 |

1 |

1 |

|

|

||

Objectives

The objectives of this work package are to oversee administration, operational management, and overall implementation of the project, including internal effective communication and collaboration between the coordinator, individual consortium members, and the European Commission (T1.1), to organize and administer consortium networking and governance, including supporting relevant bodies and meetings, including Advisory Board (AB), ensuring efficient and effective management and decision-making procedures (T1.1) and to implement sound financial management as well as controlling systems and quality assurance of deliverables and periodic and final Reports (T1.2).

Description of work

Task 1.1 Intra-consortium management and communication (Lead MfN; all partners; Month 1-36)

The core part of this task will be the organization and coordination of the consortium bodies and their meetings, particularly meetings of the Steering Committee (monthly remotely), and the General Assembly (once a year in person), as well to ensure for efficient and regular communication between the coordination, the consortium bodies, and all partners. In person meetings will be aligned with independent community meetings (such as the “Wikimania”, the yearly meeting of the Wikipedia/Wikimedia/Wikidata community). This task will further establish a high level scientific Advisory Board. The Advisory Board will help to monitor progress, and provide independent guidance and advice to the coordinator, Steering Committee and the entire consortium. Advisory Board members will be supported in their function by the project logistically and with limited secretarial functions. The coordination office will also provide for direct links between the Advisory Board and all consortium bodies, groups and partners as needed. Daily communication will primarily happen via electronic means, including email, Skype and the #wikidata channel on the irc.freenode.net network.

Task 1.2 Reporting and finances (Lead MfN; all partners; Month 1-36)

This task includes the preparation and coordination of the periodic scientific and financial project reports as requested by the EC, and of the Data Management Plan according to the H2020 Open Data Pilot. Further activities include the coordination of common administrative tasks, such as elaborating and providing templates for detailed planning and reporting of the tasks in each WP; providing formats for compiling and editing progress reports; monitoring progress through reaching milestones and deliverables; quality control and submission of products and deliverables. Financial monitoring will ensure a transparent financial distribution of the EC grant, including setting and monitoring payment schedules, and terms of reimbursement. We will also prepare financial controlling reports for the overall monitoring of the project.

Deliverables

D1.1 Data management plan (Month 06)

D1.2 Periodic report M12 (Month 12)

D1.3 Periodic report M24 (Month 24)

D1.4 Periodic and final report M36 (Month 36)

Work package 2 - Semantic modelling (

WP2 - Semantic modelling

|

Work package number |

2 |

Start Date or Starting Event |

M1 |

||||||

|

Work package title |

Semantic modelling |

||||||||

|

Participant number |

1 |

2 |

3 |

4 |

5 |

6 |

7 |

||

|

Short name of participant |

MfN |

UPM |

UM |

WMDE |

UOC |

EF |

UPS |

||

|

Person/months per participant: |

3 |

6 |

6 |

6 |

1 |

2 |

1 |

||

Objectives

The goal of this WP is to assist the Wikidata community in establishing and setting up a framework of classes and properties (by using the W3C Web Ontology Language, OWL ) to enable the support of the selected interdisciplinary VRE research fields (T2.1). Specifically, it develops profiles of classes and properties for concepts the project first focuses on, such, isotopes and their properties, like decay, research papers and properties, like its DOI, title, authors list (using the ORCID), and metabolites and other small molecules and properties like their chemical structure, boiling and melting point, and external database identifiers.The second task (T2.2) will focuses on the required mappings between Wikidata elements (i.e. classes and properties) and elements in external ontologies. Where needed, recommendations will be made to refine or add new classes and properties to allow easier or more accurate integration with research data. Besides actual mappings, also guidelines and procedures will be established that streamline the process of building and extending class and property vocabulary and of populating items with statements involving those properties. Here, the multilingual nature of Wikidata will be included. We will reuse community adopted ontologies and vocabularies, such as ChEBI and CHEMINF, and standards like the CIDOC Conceptual Reference Model used in cultural heritage documentation.

Description of work

Task 2.1: Recommendations for classes and their property profiles (Lead: UM; all partners; Month 1-36)

This tasks focuses on the development of VRE-specific property profiles for classes of Wikidata items for a number of disciplines. Profiles are defined as sets of properties and associated policies and best practices that meet the functional requirements of specific research use cases (WP3 and WP4). Profiles are not limiting the application of properties which are absent from the profile; rather they guide the interaction by reducing the mapping process to a manageable set of properties. Where possible, existing community efforts towards creating property profiles will be built on. For instance, generic lists already exist of properties available for creative works or periodicals, as well as draft recommendations for scholarly articles and chemicals. However, properties considered for profiles are not limited to properties that already exist on Wikidata; new ones will have to be introduced. An important part of this task is also to work with Wikidata development (partner WMDE) to increase the range of available data types (e.g. for supporting units or geo shapes ). Property profiles will be created for a significant number of classes that fall under the use cases outlined in the “Concept and approach” part of the Excellence section of the proposal. The focus will be on the 50-100 most widely used DBpedia classes as filtered by our use cases. Priority will be given to profiles required for data sets to be integrated in T3.1, including form project partners (e.g. minerals, meteorites and taxa in the case of MfN’s collections).