|

Research Ideas and Outcomes :

Research Article

|

|

Corresponding author: Alex Hardisty (hardistyar@cardiff.ac.uk)

Received: 14 May 2020 | Published: 18 May 2020

© 2020 Alex Hardisty, Hannu Saarenmaa, Ana Casino, Mathias Dillen, Karsten Gödderz, Quentin Groom, Helen Hardy, Dimitris Koureas, Abraham Nieva de la Hidalga, Deborah Paul, Veljo Runnel, Xavier Vermeersch, Myriam van Walsum, Luc Willemse

This is an open access article distributed under the terms of the Creative Commons Attribution License (CC BY 4.0), which permits unrestricted use, distribution, and reproduction in any medium, provided the original author and source are credited.

Citation:

Hardisty A, Saarenmaa H, Casino A, Dillen M, Gödderz K, Groom Q, Hardy H, Koureas D, Nieva de la Hidalga A, Paul DL, Runnel V, Vermeersch X, van Walsum M, Willemse L (2020) Conceptual design blueprint for the DiSSCo digitization infrastructure - DELIVERABLE D8.1. Research Ideas and Outcomes 6: e54280. https://doi.org/10.3897/rio.6.e54280

|

|

Abstract

DiSSCo, the Distributed System of Scientific Collections, is a pan-European Research Infrastructure (RI) mobilising, unifying bio- and geo-diversity information connected to the specimens held in natural science collections and delivering it to scientific communities and beyond. Bringing together 120 institutions across 21 countries and combining earlier investments in data interoperability practices with technological advancements in digitisation, cloud services and semantic linking, DiSSCo makes the data from natural science collections available as one virtual data cloud, connected with data emerging from new techniques and not already linked to specimens. These new data include DNA barcodes, whole genome sequences, proteomics and metabolomics data, chemical data, trait data, and imaging data (Computer-assisted Tomography (CT), Synchrotron, etc.), to name but a few; and will lead to a wide range of end-user services that begins with finding, accessing, using and improving data. DiSSCo will deliver the diagnostic information required for novel approaches and new services that will transform the landscape of what is possible in ways that are hard to imagine today.

With approximately 1.5 billion objects to be digitised, bringing natural science collections to the information age is expected to result in many tens of petabytes of new data over the next decades, used on average by 5,000 – 15,000 unique users every day. This requires new skills, clear policies and robust procedures and new technologies to create, work with and manage large digital datasets over their entire research data lifecycle, including their long-term storage and preservation and open access. Such processes and procedures must match and be derived from the latest thinking in open science and data management, realising the core principles of 'findable, accessible, interoperable and reusable' (FAIR).

Synthesised from results of the ICEDIG project ("Innovation and Consolidation for Large Scale Digitisation of Natural Heritage", EU Horizon 2020 grant agreement No. 777483) the DiSSCo Conceptual Design Blueprint covers the organisational arrangements, processes and practices, the architecture, tools and technologies, culture, skills and capacity building and governance and business model proposals for constructing the digitisation infrastructure of DiSSCo. In this context, the digitisation infrastructure of DiSSCo must be interpreted as that infrastructure (machinery, processing, procedures, personnel, organisation) offering Europe-wide capabilities for mass digitisation and digitisation-on-demand, and for the subsequent management (i.e., curation, publication, processing) and use of the resulting data. The blueprint constitutes the essential background needed to continue work to raise the overall maturity of the DiSSCo Programme across multiple dimensions (organisational, technical, scientific, data, financial) to achieve readiness to begin construction.

Today, collection digitisation efforts have reached most collection-holding institutions across Europe. Much of the leadership and many of the people involved in digitisation and working with digital collections wish to take steps forward and expand the efforts to benefit further from the already noticeable positive effects. The collective results of examining technical, financial, policy and governance aspects show the way forward to operating a large distributed initiative i.e., the Distributed System of Scientific Collections (DiSSCo) for natural science collections across Europe. Ample examples, opportunities and need for innovation and consolidation for large scale digitisation of natural heritage have been described. The blueprint makes one hundred and four (104) recommendations to be considered by other elements of the DiSSCo Programme of linked projects (i.e., SYNTHESYS+, COST MOBILISE, DiSSCo Prepare, and others to follow) and the DiSSCo Programme leadership as the journey towards organisational, technical, scientific, data and financial readiness continues.

Nevertheless, significant obstacles must be overcome as a matter of priority if DiSSCo is to move beyond its Design and Preparatory Phases during 2024. Specifically, these include:

Organisational:

- Strengthen common purpose by adopting a common framework for policy harmonisation and capacity enhancement across broad areas, especially in respect of digitisation strategy and prioritisation, digitisation processes and techniques, data and digital media publication and open access, protection of and access to sensitive data, and administration of access and benefit sharing.

- Pursue the joint ventures and other relationships necessary to the successful delivery of the DiSSCo mission, especially ventures with GBIF and other international and regional digitisation and data aggregation organisations, in the context of infrastructure policy frameworks, such as EOSC. Proceed with the explicit aim of avoiding divergences of approach in global natural science collections data management and research.

Technical:

- Adopt and enhance the DiSSCo Digital Specimen Architecture and, specifically as a matter of urgency, establish the persistent identifier scheme to be used by DiSSCo and (ideally) other comparable regional initiatives.

- Establish (software) engineering development and (infrastructure) operations team and direction essential to the delivery of services and functionalities expected from DiSSCo such that earnest engineering can lead to an early start of DiSSCo operations.

Scientific:

- Establish a common digital research agenda leveraging Digital (extended) Specimens as anchoring points for all specimen-associated and -derived information, demonstrating to research institutions and policy/decision-makers the new possibilities, opportunities and value of participating in the DiSSCo research infrastructure.

Data:

- Adopt the FAIR Digital Object Framework and the International Image Interoperability Framework as the low entropy means to achieving uniform access to rich data (image and non-image) that is findable, accessible, interoperable and reusable (FAIR).

- Develop and promote best practice approaches towards achieving the best digitisation results in terms of quality (best, according to agreed minimum information and other specifications), time (highest throughput, fast), and cost (lowest, minimal per specimen).

Financial

- Broaden attractiveness (i.e., improve bankability) of DiSSCo as an infrastructure to invest in.

- Plan for finding ways to bridge the funding gap to avoid disruptions in the critical funding path that risks interrupting core operations; especially when the gap opens between the end of preparations and beginning of implementation due to unsolved political difficulties.

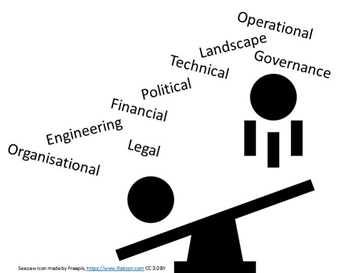

Strategically, it is vital to balance the multiple factors addressed by the blueprint against one another to achieve the desired goals of the DiSSCo programme. Decisions cannot be taken on one aspect alone without considering other aspects, and here the various governance structures of DiSSCo (General Assembly, advisory boards, and stakeholder forums) play a critical role over the coming years.

Keywords

DiSSCo, Distributed System of Scientific Collections, Design, Blueprint, ICEDIG, Deliverable

1. Introduction

1.1. Background

Strategically, it is vital to balance multiple factors – technical and engineering, organisational and political, financial and legal, and operational and governance – against one another to achieve the desired goals of the DiSSCo programme. Decisions cannot be taken on one aspect alone without considering other aspects.

DiSSCo, the Distributed System of Scientific Collections, is a pan-European Research Infrastructure (RI) mobilising, unifying and delivering bio- and geo-diversity information connected to the specimens held in natural science collections to scientific communities and beyond. Bringing together 120 institutions across 21 countries and combining earlier investments in data interoperability practices with technological advancements in digitization, cloud services and semantic linking, DiSSCo makes the data from natural science collections available as one virtual data cloud in association with a wide range of end-user services that begins with finding data, accessing data, using data and improving data. New services, made possible by the expanding variety and volume of data, coupled with open access to that data and new ways of connecting and manipulating it, will transform the landscape of what is possible in ways that are hard to imagine today. In addition to serving as the framework for interpreting and validating species data, DiSSCo connects historical collection data – traditionally connected using scientific species names as primary identifiers – with data emerging from new techniques and not already linked to species names. These new data include DNA barcodes, whole genome sequences, proteomics and metabolomics data, chemical data, trait data, and imaging data (Computer-assisted Tomography (CT), Synchrotron, etc.) to name but a few. DiSSCo will deliver the diagnostic information required for novel approaches and technologies for accelerated field identification of species, contributing to the development of datasets at adequate scale to support regular environmental monitoring, trend analysis and future prediction. The human discoverability and accessibility of the DiSSCo knowledge base will enable researchers across disciplines to tap into a previously inaccessible pool of quality assured data, while the machine readability will enable users to automatically digest these datasets into analytical workflows and tools.

With approximately 1.5 billion objects to be digitized, bringing natural science collections to the information age is expected to result in 90 petabytes of new data over the next decades, used on average by 5,000–15,000 unique users every day. This requires new skills, clear policies and robust procedures to create, work with and manage large digital datasets over their entire research data lifecycle, including their long-term storage and preservation and open access. Such processes and procedures must match and be derived from the latest thinking in open science and data management, as epitomised by the two quotations at the beginning of this section concerned with realising the core principles of 'findable, accessible, interoperable and reusable' (FAIR).

The present document, the DiSSCo Conceptual Design Blueprint is based on the results of the ICEDIG project work. It has been synthesised from the essential outcomes and conclusions of that project. These are mostly (but not all) reported in other deliverable documents. The present document constitutes the essential background needed by the DiSSCo Prepare project to carry out its work to raise the overall maturity of the DiSSCo Programme across multiple dimensions (organisational, technical, scientific, data, financial) to achieve readiness to begin construction.

1.2. Scope

The present document is a ‘blueprint’ report covering the technological design innovations, organisational consolidation, partnership, governance and business model proposals for constructing the digitization infrastructure of DiSSCo. In this context, the digitization infrastructure of DiSSCo must be interpreted as that infrastructure (machinery, processing, procedures, personnel, organisation) offering Europe-wide capabilities for mass digitization and digitization-on-demand, and for the subsequent management (i.e., curation, publication, processing) and use of the resulting data.

By mass digitization, we mean digitizing entire collections or their major distinct parts at industrial scale (i.e., millions of objects annually at low cost (e.g., < c.€0.50 per item), characterised by improved workflows, technological and procedural frameworks based on automation (both hardware and software) and enrichment (link-building). This is critical within DiSSCo to mobilise the data from collections as rapidly as possible, so that these data can be more easily found and used; and can act as an anchor or ‘keyring’ for other data.

Alongside mass digitization, DiSSCo also recognises the need for digitization-on-demand; by which we mean processes, procedures and technologies that respond to and support a request for digital data about specimens that have not yet been through a mass digitization process or project-based digitization workflow. Such requests may sometimes be supported by prioritisation within a mass digitization workflow, or they can help institutions to refine and pilot new mass workflows. But such requests can also demand more data than the ‘normal workflow’ to meet specific needs of the users in each case or to deal with digitization of the most complex specimens and preservation types. Digitization-on-demand is a critical channel for digital access as envisaged for this research infrastructure, to allow truly global access to and use of collections. Mass digitization and digitization-on-demand are treated in detail in section 3.

1.3. Structure of the document

The present document emphasises that the considerations and recommendations (104) in the following sections (and collated as a summary list for convenience in Appendix A for convenience), and the conclusions in section 7 are a balance of multiple factors in play (Fig.

Practically, this means focussing on the arrangements, processes and practices that need to be in place to achieve mass digitization, the architecture, tools and technologies to make digitization and management of the resulting data least-cost, and the culture, skills and capacity-building essential to operating in an efficient and sustainable manner. The present document is structured this way, and the recommendations coming from this practical focus contribute towards increasing overall implementation readiness*

1.4. Conventions in the present document

1.4.1. Key terms

The terms below are used with the meanings given:

Innovation: The alteration of what is established by the introduction of new elements or forms; a change made in the nature or fashion of anything; something newly introduced; a novel practice, method, etc.

Consolidation: The action of making solid, or of forming into a solid or compact mass (also figuratively); solidification; combination into a single body, or coherent whole; combination, unification.

DiSSCo Hub: The infrastructure of integrating services, information technology components (hardware and software), human resources, organisational activities, governance, financial and legal arrangements that collectively have the effect of unifying natural science collections through a holistic approach towards digitization of and access to the data bound up in those collections.

DiSSCo Facility: The geographically distributed collection-holding organisation(s) (i.e., natural science/history collection(s)) and related third-party organisations that deliver data and expertise to the DiSSCo Hub infrastructure, and which can be accessed by users via the DiSSCo Hub infrastructure.

A special kind of DiSSCo Facility could be a DiSSCo Centre of Excellence (DCE), specialised in one or more of researching, innovating, developing and operating/performing techniques and/or processes of digitization or other related facets, and disseminating information on same.

For the meaning of other terms and abbreviations, see the glossary (section 9).

1.4.2. Recommendations of the report

Main recommendations of the report are contained in double-bordered boxes, like so:

Recommendation N: The words of the recommendation.

Supplementary information not integral to the recommendations of the present report but of interest to the reader is contained in single-bordered boxes, like so:

Supplementary information. The words of the supplementary information.

1.4.3. References to other documents

References to other documents include references to ICEDIG project deliverable documents of the form “ICEDIG project deliverable Dx.y”. These are referred to via endnotes and are listed in full in section 8.

References to other documents are cited in the text in square brackets []. These are listed in the References section.

2. The DiSSCo research infrastructure

2.1. Rationale for a Distributed System of Scientific Collections

2.1.1. Digitizing natural science (biological, geological) collections

Digital transformation of society affects all areas of human activity, and science is at the forefront of this development: “Digital science means a radical transformation of the nature of science and innovation due to the integration of ICT in the research process and the internet culture of openness and sharing”. Emphasis on sharing the results and data of publicly funded research has led to the concept of “Open Science” [

Natural science collections are an integral part of the global natural and cultural capital. They include 2-3 billion animal, plant, fossil, rock, mineral, and meteorite specimens. The European collections account for 55% of the natural sciences collections globally and represent 80% of the world’s bio- and geo-diversity. Data derived from these collections underpin countless innovations, including tens of thousands of scholarly publications and official reports annually (used to support legislative and regulatory processes relating to health, food, security, sustainability and environmental change); inventions and products critical to our bio-economy; databases, maps and descriptions of scientific observations; instructional material for students, as well as educational material for the public. Natural science collections, which exist in all the world’s countries, are some of the oldest Research Infrastructures (RI). Their collections have always been open for all scientists and form the hard core of biodiversity science that studies the existence of life on earth and geoscience that studies the earth itself. Life sciences pioneered open access through establishing the GenBank in 1982. In 2001, the OECD Megascience Forum established the Global Biodiversity Information Facility (GBIF) to share biodiversity data freely and openly. Initially, GBIF focussed on digitization of biological collections, but is now an important source of any kind of primary biodiversity observation data.

Digitizing biological collections, which have been gathered for more than 250 years, is a gargantuan task. Europe’s collections are home to about one and a half billion specimens, of which about 10% have been digitally catalogued. For the remaining collections, only the physical objects exist, containing – besides the biological material itself – highly useful biodiversity data attached to each specimen on paper labels. Only about 1-2% of the objects have been imaged. User demand for the available data is high. For example, in 2016, Royal Botanic Gardens, Kew’s herbarium catalogue (which at that time had over 900,000 specimen records available) was visited over 125,000 times, with more than 960,000 page-views.

2.1.2. Accelerating beyond the current situation

The current situation hinders modern science, as contemporary research requires access to data digitally to address some of the biggest challenges of our time e.g., [

One part of the solution is already in place. The e-infrastructure for accessing biodiversity data already exists through GBIF, as well as through other related initiatives such as the Catalogue of Life (CoL), the Biodiversity Heritage Library (BHL), the Encyclopedia of Life (EOL), the International Barcode of Life (iBOL), and the Biodiversity Information Standards organisation (TDWG). These global organisations each tackle their own special data types and services. For instance, GBIF gives harmonised access to over 50,000 datasets with more than 1.3 billion biodiversity records*

2.1.3. Disconnected infrastructure

There is a serious disconnect between the e-infrastructure and the physical infrastructure. Museums do have keen interest in digitizing their collections [

2.1.4. Industrialising digitization

There is evidence that industrialising digitization of natural science collections is achievable [

Pioneered by the Herbarium of Muséum National d’histoire Naturelle (MNHN Paris), outsourced digitization activities have been undertaken in several innovative programmes, including those of Digitarium in Finland, Plantentuin Meise in Belgium, Naturalis in the Netherlands, and across France (ReColNat project) and Norway. Further major initiatives are being proposed, for instance in Germany, Sweden, and the UK, and similar approaches are also used in the USA for mass digitization, for example by the Smithsonian Museum of Natural History. Both inhouse and outsourcing approaches at scale have now been implemented and tried across several projects.

Total spending in known European projects is in the range of €10-20 million annually. In the USA, the Advancing the Digitization of Biological Collections (ADBC) program receives $10 million annually from the National Science Foundation for nationally distributed digitization of non-federal collections. Collections in the USA write grants to compete for these digitization funds. iDigBio receives about a fifth of those yearly funds to aggregate the digitized data in a central infrastructure. In addition, iDigBio works collaboratively with the worldwide community to build capacity development for digitization and data use, foster research use of these data, develop enhanced education and outreach materials using collections data, and lead efforts to increase inclusivity in museum collections. The majority rely on major public investments. A problem is that these projects are funded in the short-term. Sustainability can only be achieved when the results are anchored in long-lived RIs, and the new ways of work are internalised by stakeholders. These initiatives will need a common infrastructure to effectively share their technologies, data, and experiences, and jointly build the most demanding functions. This will further accelerate the process and bring in more resources from more countries, when the task becomes tangible. Furthermore, it is crucial to develop strong links between physical and virtual access programmes. Complementarity between physical and virtual access at European level enhances the overall capacity of researchers to discover and retrieve relevant information.

However, in most countries there are no systematic mass digitization programmes. There is lack of funding, lack of skills, workflows able to cope only with low throughput, and lack of suitable ICT systems. This makes the unit cost of digitization too high for rapid mass digitization. The existence of a large research infrastructure that tackles digitization could change this. In other words, to be viable, digitization requires large volumes to become more affordable.

2.1.5. Understanding digitization

It’s evident there are many understandings of what is meant by digitization, beyond the fundamental definition of being the process of converting analog information about physical specimens to digital format (which includes electronic text, images and other representations). Does it mean creating a database record in a collection management system (CMS)? Does it mean producing one or more photographs of a specimen, and if so, with what characteristics? Does it include transcription of label data into database records? Does it include more than just making the ‘what, where, when and who’ of the gathering event available as digital data? Different digitization initiatives can (and typically do) have varying aims and scope, leading to quite different outcomes in the characteristics of the resulting data sets. This can lead to different uses and benefits and therefore different assessments of usefulness (fitness for purpose) and cost-effectiveness. Small scale, deep digitization can certainly be cost-effective (i.e. offer good value for money in relation to project aims), but it may not be affordable at scale.

Additionally, as digitization proceeds and more varied uses are made of digital specimen data, we are beginning to find out more about what makes such data most useful. This itself is likely to affect what we consider essential data to digitize over time.

2.1.6. International landscape and DiSSCo positioning

DiSSCo data management and stewardship will take place in the context of a wide and extraordinarily varied and complex landscape of data generation and capture, management and use initiatives that extend from the local practices of individual researchers/groups, through institutional and national activities to extensive European and other regional initiatives that collectively make up the global landscape of biodiversity, ecological, and geo-environmental informatics. The range of stakeholders is broad, with many missions and interests, sometimes overlapping. Nevertheless, at present (late 2019) there is hardly any coordinated management of digital specimen and collection data outside of the historical and traditional institutions in all countries charged with caring for collections of physical natural science specimens i.e., the natural science collection-holding institutions such as national and local museums.

The activities of the Global Biodiversity Information Facility (GBIF), whose main mission is the mobilisation of primary biodiversity data are of great significance for DiSSCo. Here, DiSSCo eventually acts as the key stakeholder representing European collections that contribute primary occurrence data to GBIF based on specimens preserved in collections*

Regional initiatives taking place elsewhere in the world are also of significance. These include:

- iDigBio/ADBC program*

6 funded by the USA's National Science Foundation, for advancing the digitization of north American biodiversity collections. Already underway for several years, this initiative is acting out a ten-year plan for digitizing, imaging and mobilising collections data. In the process, much experience and wealth of technical knowledge, practice and procedures has been accumulated that is valuable for DiSSCo to draw upon as DiSSCo sets out its own digitization and data management plan objectives. - National Specimen Information Infrastructure (NSII)*

7 of China, underway since 2003; and, - Australia’s digitization of its national research collections (NRCA Digital)*

8 .

The importance of these initiatives extends beyond exploiting their experience and best practice. Global level alliances and coordination, especially global coordination around persistent identification of Digital Specimens and Digital Collections is essential and beneficial; for example, in working towards:

- A global biodiversity (

Devictor and Bensaude-Vincent 2016 ); - Fulfilling commitments and obligations towards the Nagoya Protocol on Access and Benefit Sharing;

- Implementing the ‘extended specimen’ concept described by [

Webster 2017 ] and [Lendemer et al. 2019 ]; and, - For managing ‘Next Generation Collections’ (

Schindel and Cook 2018 ).

2.2. Innovations and consolidations identified by ICEDIG

Against the foregoing background, the general objective of the ICEDIG project has been to lay the ground work for such an organisation, namely DiSSCo, firstly by identifying and recommending the technological innovations that will be needed to efficiently digitize one and a half billion collection objects (including subsequent management of their data) in a foreseeable time, such as the next 30 years; and secondly to identify organisational consolidations needed to perform this task. When this has been achieved, the natural science community will be a fully enabled player in digital society, and the most fundamental scientific data on the diversity of our planet will be freely and openly available for all.

Table 1 – Table 4 below highlight the innovations and consolidations the various tasks of the ICEDIG project have identified and recommended in their detailed work in each of four areas of consideration. These areas are each considered in detail respectively in sections 3 – 6 below and are:

- Arrangements, processes and practices;

- Architecture, tools and technologies;

- Culture, skills and capacity building; and,

- Governance and business model.

In Tables

|

Description of the recommended innovation or consolidation |

I/C |

A/W |

ICEDIG WP |

DiSSCo Prepare WPs |

Comment |

|

DiSSCo related Policy Analysis |

C |

A |

7 |

||

|

Related research infrastructure landscape |

C |

A |

7 |

||

|

Alignment of related research infrastructure |

C |

W |

7 |

||

|

Procedures for developing standards and their review and adoption |

I |

W |

4 |

||

|

Principles for engaging citizen scientists |

C |

W |

5 |

||

|

Human transcription of specimen label data remains the most accurate way to obtain structured data for each specimen, though automated systems show potential in large volumes and for certain types of data |

C |

A |

4 |

||

|

Collection preparation for digitization is an essential element in the digitization process, though it is easily overlooked and underestimated |

C |

A |

3 |

||

|

Keep digitization processes as lean as possible. Accommodating all exceptions and other collection preservation tasks hinders the rate of digitization |

C |

A |

3 |

||

|

Use imaging of labels to later enrich MIDS level from 0-1 to 2-3 and data validation |

C |

A |

3 |

See table 2 for entomology case. |

|

|

DiSSCo should keep an overview of ongoing development and innovations in digitization workflows and technology (knowledge base) |

I |

W |

1/9 |

||

|

Collection Digitization Dashboard (CDD) is an extremely useful tool for high-level decision-making processes. It facilitates catalyzing categories and different levels of granularity per user community |

I |

2 |

8 |

||

|

DiSSCo should develop a template to record cost and efficiency of digitization workflows to allow real comparisons |

I |

W |

8 |

|

Innovation/consolidation |

I/C |

A/W |

ICEDIG WP |

DiSSCo Prepare WPs |

Comment |

|

Adopt Digital Object Architecture (DOA) as basis for DiSSCo data management |

I |

A |

6 |

||

|

Nine characteristics to be protected throughout DiSSCo lifetime |

C |

A |

6 |

||

|

Store annotations separately |

I |

A |

6 |

Same principle can be applied to other object types/transactions of DiSSCo, such as ELViS transactions. |

|

|

Use Handles as persistent identifiers |

I |

W |

6 |

There is still an open issue as to whether these should be NSId, IGSN or something else. |

|

|

Separate authoritative and supplementary information about a specimen |

I |

A |

6 |

Notion that trusted/approved experts outside of owning institution can modify authoritative information. |

|

|

Root the definition of DiSSCo / openDS object model in Biological Collections Ontology (BCO) |

C |

W |

6 |

Implies extending BCO |

|

|

Base the definition of DiSSCo / openDS object model on ABCD 3.0 with EFG, ensuring alignment and compatibility with DwC |

C |

W |

6 |

Open issue is how to deal with alternate terms used by ABCD and DwC for the same concept. openDS specification should contain a mappings annex. Take note of ABCD/DwC convergence work proposed in TDWG. |

|

|

Support versions of Digital Specimen objects, with accompanying annotations and interpretations of verbatim data |

I |

W |

6 |

||

|

Pipeline for automated text digitization and entity recognition |

C |

W |

4 |

||

|

Review of automated georeferencing methods |

C |

W |

4 |

5 |

|

|

Recommendations for collection management systems |

C |

W |

4 |

6 |

|

|

Benchmark dataset of herbarium specimens |

I |

A |

4 |

5 |

|

|

Interoperability analysis of published specimen data |

C |

A |

4 |

5 |

|

|

Recommendations for improved standards for specimen data transcription |

C |

W |

4 |

5 |

|

|

Efficient semi-automated systems for entomology data and image capture |

I |

W |

3 |

In development but need further improvement to digitize the bulk of NH specimens. See D3.5. |

|

Innovation/consolidation |

I/C |

A/W |

ICEDIG WP |

DiSSCo Prepare WPs |

Comment |

|

Comparison of text digitization by humans |

I |

W |

4 |

||

|

Training in data management, such as the FAIR principles will help researchers take good decisions |

|||||

|

Citizen scientists can play and important role in the creation of data about collections. |

C |

W |

2, 5 |

||

|

Functional Units approach towards skills to more flexibly manage them by reorganising the competencies in respect to the existing capacities per institution |

I |

W |

8 |

3 |

See MS48, MS49 |

|

Recurrent training is urgently needed throughout the entire data cycle and digitization process to allow effective implementation |

C |

8 |

2 |

2.3. Overall approach and direction

2.3.1. Digitization, Digital Specimens and Digital Collections

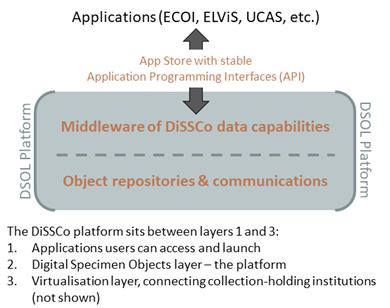

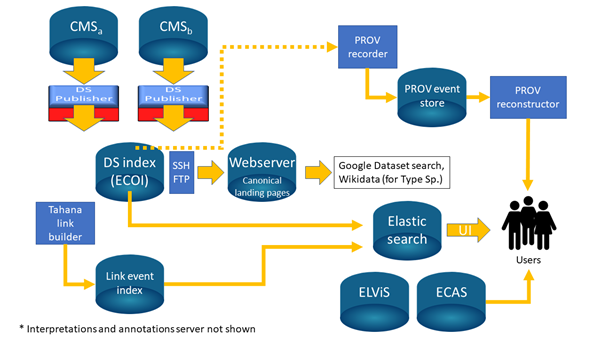

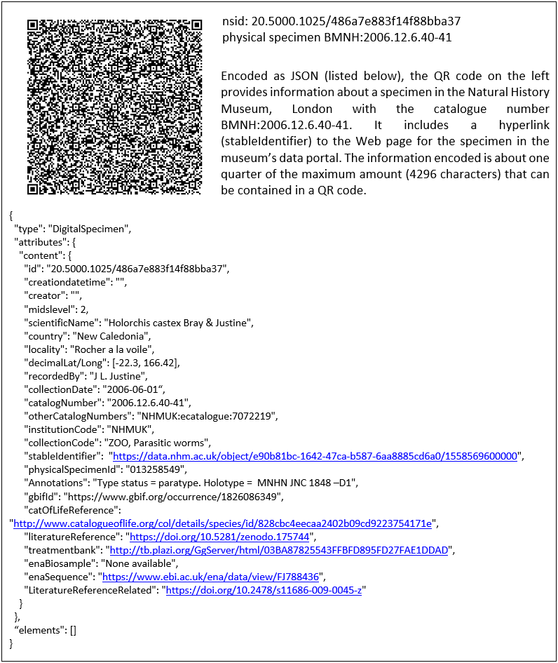

Digitization is the process of making data about physical objects digitally available, and the output of that process in the DiSSCo context is Digital Specimens and Digital Collections. Digital Specimens and Digital Collections are specific types of ‘digital objects’, which are the fundamental entities to be the subject of data management in DiSSCo. Each instance of a digital object collects and organizes core information about the physical things it represents. These identified objects are amenable to processing and to transport from one information system to another. A persistent link must be maintained from the Digital Specimen to the physical specimen it represents, and which acts as a voucher*

Each Digital Specimen or other digital object instance handled by the DiSSCo infrastructure must be unambiguously, universally and persistently identified by an identifier (Natural Science Identifier, NSId) assigned when the object is first created. Each DiSSCo Facility must be responsible for creating (minting) and managing their own NSIds in accordance with the DiSSCo policy for NSIds, and for registering their own Digital Specimens with the DiSSCo Hub infrastructure. Resolution of an NSId must always return the current version of an object’s content, as well as any interpretations and annotations associated with it.

Digital Specimen and Digital Collection objects are examples of the fundamental unit – a digital object – that is manipulated and managed by Digital Object Architecture (DOA), a powerful yet simple extension of the existing Internet. Such objects are treated as mutable objects with access control and object history (provenance) meaning they can be updated as new knowledge becomes available. Provenance data must be generated and preserved by operations acting upon DiSSCo data objects. Timestamped records of change allow reconstruction of a specific ‘version’ of a digital object at a date and time in the past.

Information about Digital Specimens and Digital Collections must be published and managed as part of the European Collection Objects Index (ECOI). Other services, such as Collection Digitization Dashboard (CDD), the European Loans and Visits System (ELViS) and the European Curation and Annotation System (ECAS) build on and work alongside the ECOI service to provide a portfolio of DiSSCo services.

Several characteristics, such as centrality, accuracy and authenticity of the Digital Specimen, protection of data, preservation of readability, traceability/provenance, and annotation history are essential for developing long-term community trust in DiSSCo. They are the protected characteristics of DiSSCo that must be protected throughout the DiSSCo lifetime. Thus, all design decisions (technical, procedural, organisational, etc.) must be assessed for their effect on the protected characteristics. Such decisions and changes must not destroy or lessen the protected characteristics.

2.3.2. The FAIR Guiding Principles

The FAIR Guiding Principles (Findable, Accessible, Interoperable, Reusable) for managing scientific data [

Whilst adhering to FAIR principles, DiSSCo data management principles aim to be technology agnostic to the greatest extent possible, expecting that over the DiSSCo lifetime specific data management and processing technologies can evolve and will be replaced. A framework for data management must accommodate this and one such framework is Digital Object Architecture (DOA) [

2.3.3. Minimum Information about a Digital Specimen (MIDS)

An important concern in digitization is how much detail to digitize from each physical specimen. While a photographic image can be made quickly, transcribing and interpreting all the details from labels, enriching the data with external information, and making specific measurements of the specimen take more time and resources. The idea of ‘Minimum Information about a Digital Specimen’ (MIDS) has been conceived to capture and structure this complexity. In the present document we repeatedly refer to the MIDS levels. Briefly, they are:

- MIDS-0: bare catalogue level information, unique identifiers, etc.; images if available;

- MIDS-1: basic information derived from the collection, such as a (higher) taxon and geography;

- MIDS-2: regular information of what (valid name), where, when, by whom, and how; as derived from specimen labels; and,

- MIDS-3: extended information, enriched with external sources, not directly available from labels.

At the time of writing (January 2020) the MIDS concept is still under development. While the definitive specification and published article about MIDS is not available, the reader is advised to refer to the discussion of the subject in the Open Access Implementation Guidelines for DiSSCo*

A related concept, Minimum Information about a Collection (MICS) capturing and structuring an approach to describing collections has also been proposed and is under study. The suggested MICS levels are:

- MICS-0: Overview information about a collection and the organisation holding it; and,

- MICS-1: Inventory information describing the collection in its entirety.

2.4. The provisional Data Management Plan for DiSSCo infrastructure

The provisional Data Management Plan for DiSSCo infrastructure (hereinafter, ‘the DMP’) [

The intention in the present document is not to reproduce/duplicate all the information, data management principles and requirements documented in the DMP. Readers are referred to the original document for that [

3. Arrangements, processes and practices

Presently (2020), the task ahead of us, of mobilizing data from natural science collections is still enormous. Ninety percent (90%) of the collections still need to be mobilized. It is imperative for DiSSCo as it is in fact for all stakeholders and the community at large to tackle the backlog as quickly as possible, balancing this with a continued focus on impact and work to understand what makes data most useable and impactful. Arrangements, processes and practices, including organisational consolidation and use of selective partnerships must be initiated to efficiently digitize one and a half billion collection objects in a foreseeable time, at reasonable cost and to make this data publicly accessible. In addition, however, DiSSCo will need to recognise that digitization impact – the critical metric of success - depends on user needs; the aims of specific projects and research; and the research readiness of the data. Mass digitization workflows are still being developed and piloted for some collection types, and others may never be suitable for an ‘industrial’ approach (e.g., the very largest specimens). For these reasons, while focusing on mass digitization, DiSSCo will recognise and support the need for balanced portfolios of digitization activities that include digitization-on-demand capabilities driven and prioritised by researcher needs.

3.1. Role and development of a common digital research agenda

The need for greater alignment between digitization and biodiversity research agendas has been highlighted by both the Alliance for Biodiversity Knowledge (ABK) and in the Global Biodiversity Informatics Outlooks (GBIO). ABK specifically names one of its ambitions as working “with other research communities and infrastructures to achieve interoperability with earth observations, social science data and other resources.” Stakeholders at GBIC2 called for better coordination mechanisms between research infrastructures and coordination between scientific communities with different but parallel expertise. A clear international digital research agenda for collections will be important in determining precise criteria for both mass digitization (3.9 below) and digitization-on-demand (3.9.3 below).

Recommendation 1: It is important to set a clear international digital research agenda that can serve as a guideline within DiSSCo to determine what to prioritize in terms of digitization of collections and specimens in more detail.

The coordination is required both internally within DiSSCo and externally with other research infrastructures. Research priorities and policies related to collections, ICT and data management vary widely across DiSSCo institutions and have a significant impact on how institutions can collaborate and facilitate a collective agenda. The first step in overcoming policy barriers that limit research alignment is identifying these internal policies and potential sticking points. ICEDIG task 7.2 took the first step towards documenting the existence and subject of policies across institutions (3.2 below). This lays the initial foundations for assessing where potential policy gaps, overlaps and barriers need to be anticipated and overcome in developing a common research agenda – and policy framework that supports it – within DiSSCo institutions. This will be facilitated in part by a new DiSSCo service providing a new central index service (marketplace) of expertise and facilities that lists the sources and availability of taxonomic and other scientific expertise, equipment and facilities across the DiSSCo collection-holding institutions.

External coordination between DiSSCo and other research infrastructures is also necessary for expanding the impact of bio/geodiversity research through collaboration with parallel areas of expertise such as environmental sciences, digital humanities, molecular biology and many others. A clear understanding of the existing RI landscape will also ensure there are no duplication of efforts but rather provides the opportunity to take advantage of existing work and potential efficiencies for achieving biodiversity research objectives.

ICEDIG task 7.3 was a major first step in documenting existing research infrastructures and their potential contributions to the research priorities of DiSSCo. In collaboration with GBIF and the ABK, the development of a Research Infrastructure Database has been started, containing a list of 59 research infrastructures (RIs) like or adjacent to DiSSCo [

Recommendation 2: DiSSCo should promote that a global initiative, such as the Alliance for Biodiversity Knowledge or GBIF adopt and sustain the RI Database, ensuring that it is open, accessible and usable as a tool for identifying potential synergies and opportunities for collaboration with other research infrastructures.

3.2. Common policy elements

Multiple national and European policy and legal issues can be identified as essentially affecting the successful delivery of DiSSCo. By identifying broad policy areas relevant to digitizing natural science collections and the proposed activities of DiSSCo (Table

|

1. Access and Benefit Sharing (ABS) 2. Data and digital media publication 3. FAIR / Open Data / Open Access 4. Freedom of information (FOI) 5. Intellectual Property Rights (IPR) 6. Data Standards 7. Personal data 8. Protection of sensitive collections data |

9. Public Sector Information 10. Responsible Research and Innovation (RRI) 11. Cloud services and storage 12. Information risk management 13. Information security 14. Collections access and information 15. Collections care, development and scope 16. Digitization strategy and prioritisation |

Each topic in Table

In some cases, such as freedom of information, intellectual property rights, personal data, public sector information, etc., policy areas are covered by national or European legislation, providing for a base level of harmonisation across all institutions. However, there is considerable variation in practical implementation of these policies, with much detail not sufficiently described or left to local interpretation. In comparison to internal, institution-specific policies, externally mandated policy is often generic, technical and not well-tailored to collection-holding institutions. Critically, the ICEDIG survey has revealed major gaps in policy coverage across all institutions, with some relying largely on national legislation that has been locally interpreted but arguably too abstracted from the details of practical implementation to provide comparison with other institutional policies.

Although policies might have been reported as existing, the amount of detail and formality represented by the available documentation varies widely. Furthermore, language barriers create significant potential for misinterpretation during the analysis, as policy documents were submitted in their original languages of Finnish, Estonian, English, French, German and Dutch. The remainder reported that their policies were either in progress, partially complete, developed external to the organisation, or were not in existence.

‘Collections access and information’ and ‘Collections care, development and scope’ have internal coverage in all institutions, which is likely to reflect that physical collections management policy is generally more mature than other elements of digital collections policy. Generic data and ICT policies such as ‘Information security’, ‘Personal data’ and ‘Intellectual property rights’ are also well represented; the legal and/or regulatory implication of these is likely to have been a driver for policy development in these areas. ‘Data standards’, as a more community-driven subject, appears to be less well embedded. Most institutions do not have a formal policy for ‘Cloud services and storage’ or ‘Public Sector Information’.

Another point to consider when reviewing policy coverage is the extent of policy that is followed with little or no internal documentation i.e., with reliance on external documents. For example, if an institution is a member of CETAF there are policies they will be party to (such as those relating to Stable Identifiers and Passports, for example) that are not specifically transcribed into local (institutional) policies. Similarly, when it comes to access and benefit sharing, institutions that are part of the European Union are obliged to follow the Nagoya Protocol, but this may not be stated as a written policy and in some areas (e.g., Digital Sequence Information) subject to significant variance in interpretation. Other examples include GDPR regulations and the CITES Convention.

Not all institutions are able to share their policies externally. Only one third of institutions stated that all their policy documentation submitted could be shared, whereas 60% of one institution’s documentation was not sharable. This is important to consider when creating common policy around digitization as DiSSCo moves toward a common research agenda.

In sum, the ICEDIG policy analysis faced significant challenges around collecting and interpreting policies from ICEDIG partner institutions. This was due to several factors, including difficulties in obtaining relevant policies, differing levels of policy making it harder to map policies to categories, and policy within different legal frameworks and language barriers that created the potential for misinterpretation. To address these challenges within DiSSCo, solutions should consider:

- Proposals on how to introduce and streamline relevant policy towards a common DiSSCo agenda;

- Establishing a knowledge base on how policies are organised within a collection-holding institution;

- Establishing where responsibility lies in ensuring the correct policies are in place and adhered to; and,

- Establishing who has authority over enabling policy change within collection-holding institutions.

Such actions must be framed in the context of developing a common policy framework that addresses the gaps in provision currently experienced across DiSSCo institutions, as well as supporting local variation in how these policies are implemented.

Recommendation 3: Actions on common policy elements across DiSSCo institutions must be taken in the context of a common framework of policy definition and implementation that recognises the organisation of policies and responsibility for implementation at local level, and authorization for change within collection-holding institutions.

3.3. Participation of citizen science

In natural science collection-based research, citizen scientists are most often engaged in transcribing specimen labels. The use of public, web-based transcription platforms, of which there are several available as open-source in the domain*

In general, there is no single best platform to recommend nor any need for DiSSCo to seek to build a single, universal DiSSCo volunteer platform. The community engaged around existing platforms or instances of platforms such as the DoeDat implementation of DigiVol by Meise Botanic Garden*

Integrating the diversity of platforms into digitization workflows is made possible by implementing a common data exchange protocol*

Recommendation 4: DiSSCo should exploit the diversity of available citizen science platforms (e.g., for specimen label transcription), taking advantage of their individual strengths (surrounding community interest, language specificity, etc.) as appropriate and should encourage such platforms to implement the data exchange format and protocol for transcription platforms (doi: 10.5281/zenodo.2598413), as well as supporting this format/protocol in digitization lines, workflows and collection management systems.

As noted above, a strength of existing transcription platforms is their surrounding community of volunteers engaged in collection transcription. Often though, the fact of citizen scientists’ involvement in data enrichment processes is hidden in the final versions of datasets. Giving proper credit to these people for their contribution is morally right and essential for motivating future contributions, as well as being ethically right for the curation and maintenance of collections (i.e., establishing provenance of data). Furthermore, integrating and exposing citizen science activity through DiSSCo dashboards could be a powerful incentive for increased mobilisation of volunteers in the future*

In the future, it is advisable to ensure that attribution details are preserved in transcription datasets, transferred to collection management and systems and published publicly when such data is published. Darwin Core and GBIF Metadata Profile standards allow to some degree to describe also volunteer involvement in data collection and enrichment. A new recommendation for the representation of attribution metadata recently published by the Research Data Alliance*

Recommendation 5: The involvement of citizen scientists in DiSSCo data work and activities must be properly acknowledged and attributed, for example using Research Data Alliance recommendations for the representation of attribution metadata (doi: 10.15497/RDA00029).

For properly engaging the public with collections and collection digitization, deep understanding of the relationships between collections, particularly digital collections; formal and informal education; museum-related citizen science; and the skills and knowledge that these can advance is needed. This is a complex area that ICEDIG has only touched upon briefly*

Recommendation 6: Recognising the likely future increase in citizen science involvement with natural science collections, DiSSCo should further develop a package of business model principles and guidance that collection-holding institutions can use to design and manage citizen science engagements and activities to their collections.

3.4. Organisation and partnering choices

3.4.1. Types of partnering

Strictly speaking, the word 'alliance' should be used instead of partnering or partnership because it's the more accurate and wide-ranging term conferring the idea of being united for a common purpose or for mutual benefit. Alliances match parties’ strength to strength and balance control with collaboration. They increase the capacity and capability of each of the involved parties without necessarily asking the parties to relinquish control from one to the other. From the perspective of DiSSCo needs (and discussed in each of the following three sub-sections 3.4.2 – 3.4.4) three main forms of alliance exist: customer-supplier relationships, strategic alliances and stakeholder investments.

Compared to these three kinds of alliance, true partnerships are much more about participation, pairing and merger of individual interests. Although they are concerned with collaboration, separate control is not retained. They not relevant for DiSSCo at present.

3.4.2. Customer-supplier relationships

Customer-supplier relationships are relationships in which customers receive goods and services and suppliers receive monetary payments or other considerations. Such relationships are usually more tactical than strategic, although they often are important contributors towards some higher strategic goal. They can be straightforward and often involve a tendering process leading to contractual arrangements that usually include a service level agreement (SLA). Customer-supplier relationships can occur on many levels, from institutional through national and regional to pan-European. Choice is normally governed by the available suppliers having the correct solution to meet requirements at a value for money price. DiSSCo and its member institutions are subject to the requirements of EU legislation on public procurement*

DiSSCo has the need for many kinds of customer-supplier relationships, including the following:

- Gaining access to and exploiting vast amounts of storage capability in the form of third-party trusted repositories;

- Speeding up and cutting the cost of digitization processes and procedures; and

- Operating a persistent identifier minting and resolution mechanism;

3.4.3. Joint ventures

Joint ventures, in which the parties/partners/beneficiaries commit resources to jointly pursue common goals can be either operational or strategic, depending on their purpose. Normally they are the latter, being essential to enhancing the value of each individual party and because the parties separately cannot achieve the desired goals on their own. Strategic joint ventures are normally long-term arrangements. When more than two parties contribute, this is a consortium.

Strategic alliances include development of interfaces that do not necessarily include provision of services or products from either side, but which can lead to mutual benefit for both parties. Strategic alliances can include technology, knowhow, and skills transfer. They are governed by bespoke agreements (MoU, consortium agreement, etc.) setting out the ambitions and obligations of each party in the context of the shared goals.

DiSSCo has the need for many kinds of joint venture, including the following:

- Creating bidirectional (resolvable) links between natural science specimens and DNA sequences;

- Creating bidirectional (resolvable) links between natural science specimens and relevant literature;

- Guaranteeing availability of Digital Specimen information for the next 100 years;

- Contributing to and receiving from the European Open Science Cloud;

- Achieving interoperability with cognate research infrastructures (biodiversity science, ecology, environmental sciences, social-economic, molecular, chemical, etc.) at both European and global levels.

3.4.4. Stakeholder investment

There is a third kind of alliance – stakeholder investment – whereby one party makes an investment in a second party with a view to, for example ensuring the sustainability and longevity of the second party, being crucial to the first party's operations, or influencing the behaviour of the second party in a direction more favourable to the first party. The second party benefits by having its resources and future sustainability improved because of the investment. A topical example of this kind of alliance might be the situation whereby DiSSCo becomes a member of the International DOI Foundation or the DONA Foundation, or where investments are made for sustainable software development. This kind of alliance is considered further in the sub-section on shared liabilities (6.4.5 below).

3.4.5. Organising alliances

The landscape within which DiSSCo is positioned is complex, and its mission and needs encompass:

- Collections science;

- Related fields (applied research fields);

- Interdisciplinary fields; and,

- Decision making/policy informing/public engagement.

On top of this, the broader landscape encompasses other related facilities, resources and services used by the scientific community to conduct research such as instruments, archives or structures for scientific information, computer-assisted tools and ICT infrastructure, such as cloud computing environments and Internet communications.

The question then is: How to organise alliances that allow DiSSCo to operate – to deliver what is mandated to the best of its ability and to deliver that better through alliances than DiSSCo could do alone?

Several Roundtables*

- Collection Digitization Dashboard: see 3.10.5;

- Analogue 2 Digital: One of the most time-consuming steps within the digitization process, i.e. the extraction of label information and the different methods available to do so;

- Future of warehousing and use of robotics: see 4.6.6. Also, use of robotics in 3D scanning;

- Partnership Frameworks for Distributed Research Infrastructures, to share experiences, learn from more mature initiatives and identify possible best practices to follow;

- Museums specimen and molecular data linkage; and,

- Cultural Heritage Synergies, establishing digital needs and requirements of humanities researchers.

Seeking out complementary infrastructures, commercial organisations/industries and other initiatives, and identifying where joint efforts could exist; building on existing investments; and leveraging cross-infrastructures collaboration is all important work that must be continued within the overall DISSCo programme.

Technological innovation, efficient deployment, harmonized and collaborative infrastructure development will be critical. Potential innovators working in closely related fields such as optics, robotics, artificial intelligence, geo-localisation, imaging, lab instruments, data storage and many others are to be further engaged as necessary e.g., for joint development of collective technology and infrastructure that would each on its own require investment and competence beyond the capacity of a single RI, or with innovative or already existing technical capabilities such as Artificial Intelligence, or connective infrastructure such as Access and Authentication Infrastructure.

Organising alliances must occur in the framework of the common digital research agenda (3.1) to enhance identification of optimal potential partners, for example where third party data services can potentially augment the DiSSCo offering and produce mutual research benefit e.g., ELIXIR life sciences services. Quantitative assessment and due diligence must be carried out on relevant activities, services or components of organisations and infrastructures that can inform decisions on alliance making as the DiSSCo blueprint is further developed.

Recommendation 7: DiSSCo should estab lish criteria and procedures for assessment and due diligence of activities, services and components of relevance to any potential alliance that keep in mind the common digital research agenda of DiSSCo.

Convergence of the ESFRI RIs in the common landscape is necessary and should be continued. The different state of RIs and their timing do not help in this endeavour as different facets, like needs and solutions, develop at different speeds. This also applies to the relationship between RIs and the e-infrastructure providers. Nevertheless, RIs will have to invest efforts to identify interfaces with common lines of production from where then common services could emerge. This needs commitment from other RIs – the new ESFRIs, established ESFRIs, and ERICs – to continue this dialogue. Such a dialogue began with Roundtable 5 and will be continued.

Recommendation 8: DiSSCo should continue dialogue with representatives of complementary research infrastructures to ensure convergence towards a common approach, especially in the context of the European Open Science Cloud.

Building better and more effective coordination mechanisms for international cooperation across natural science collections globally is essential to overcome the challenges of an increasingly crowded and complex landscape. Specifically, duplication of effort must be avoided but much more importantly: divergence in approach to enabling global collections-based science must be avoided at all costs.

Recommendation 9: Working proactively with international partners DiSSCo should aim to avoid divergence in technical approach to the support of global collections-based science.

3.4.6. Identified strategic opportunities for DiSSCo

When it comes to joint venture, challenges arise from the distributed nature of the RI. DiSSCo will exist in a complicated landscape of: i) European and international obligations and initiatives (2.1.6) ; ii) legal constraints and regulatory implications (3.8); and iii) national interests. Each of these must be successfully navigated.

Alliances must be forged appropriately, along three main lines:

- Within DiSSCo with the national nodes;

- With other thematic players – i.e., other RIs and their technical and strategical interfaces, and global partners (e.g., via GBIF, iDigBio) that either serve or incorporate DiSSCo’s mission; and,

- With the foundational e-Infrastructure providers contributing to the European Open Science Cloud (EOSC).

DiSSCo already has several strategic opportunities available to it that must be further developed during the Preparatory Phase for mutual benefit during the construction and operation phases.

One big strategic advantage for DiSSCo is its fundamental alliance with CETAF, the Consortium of European Taxonomic Facilities*

Another advantage available to DiSSCo is the already well-established network of GBIF national nodes and GBIF itself as a global initiative with the goal to mobilise the world’s primary biodiversity data. Note however, that this also represents an area of overlapping interests and thus potential conflict.

Digitization initiatives around the world (see 2.1.6) such as ADBC/iDigBio, NRCA Digital and National Specimen Information Infrastructure, each with similar aims to DiSSCo have much to offer in the way of mutual support and development in pursuit of seeking global solutions to common aims for digitized and extended natural science collections.

Recommendation 10: DiSSCo should further clarify, develop and nurture the joint ventures (strategic alliances) that will be important to its plans and operations, including with CETAF, GBIF, iDigBio (or equivalent), EOSC, etc.

3.5. DiSSCo Centres of Excellence

A DiSSCo Centre of Excellence (DCE) is a designated DiSSCo Facility that specialises in one or more of researching, innovating, developing and operating/performing techniques and/or processes of digitization or other related facets, and disseminating information on same.

There are several ways by which DCE can be established, funded and governed, including for example by combining private sector technology, innovation and training with publicly funded digitization projects (i.e., private/public partnerships) involving the previously mentioned organisations such as Bioshare, Dinarda, and Picturae. (See also 3.9.5 below).

Thematic DCE (considered in detail in the [

3.6. Role of the private sector and options for public procurement

The private sector has a potential role to play in several areas of DiSSCo operations, being mainly product or service supply and (where appropriate) maintenance and/or training in the following areas:

- Specialist digitization equipment, such as scanners, cameras and other imaging technologies, conveyor machinery and other automation, including associated specialised software;

- Bespoke and/or outsourced digitization services (digitization factory); and,

- Data storage services.

There are opportunities, for example in automated text digitization (4.6.2) for partnerships with global companies such as Google.

A further possibility arises when commercial companies use and benefit from the ‘free’ data, agreeing perhaps to give funding in return. Among geological collections in the USA, this is an opportunity that is just beginning to be tapped.

According to the [

3.7. Open science provider partners

3.7.1. Generic data storage and computation services

Through the ICEDIG project, DiSSCo has worked closely with open providers of data storage and archival services at national level*

Recommendation 11: DiSSCo should exploit generic services for data storage and computation where possible and procure from service providers having the ambitions and aims of open science at the heart of their mission.

3.7.2. EOSC and the FAIR Digital Object Framework

DiSSCo has welcomed and fully endorsed*

Recommendation 12: DiSSCo must adopt the FAIR Digital Object Framework (FDOF) and its realisation through Digital Specimen, Digital Collection and other relevant digital object types as the basis for complying with the FAIR Guiding Principles for natural sciences data management, and as the means of delivering FAIR compliant natural sciences data into the European Open Science Cloud (EOSC).

Note: At the time of writing (January 2020), several institutions active in the DiSSCo programme planning are committing towards a proposal for a new EC-funded project under work programme item H2020‑INFRAEOSC-03-2020*

3.8. Legal and regulatory implications

3.8.1. Implications for establishment of research infrastructures

The legal establishment and financing of a supranational research infrastructure (RI) such as DiSSCo can be lengthy, complex and difficult, taking several years to implement. The applicable laws are: community law, the law of the Member State of the statutory seat, and law of the Member States where operations are carried out. Several formalisms are possible and applicable at different stages of an RI’s lifetime.

3.8.1.1. Early stage arrangements

It’s possible during the early stages of an RI’s life (such as for investigation, planning and organisation) to be adequately served by a simple consortium agreement, or an arrangement based on a Memorandum of Understanding (MoU) signed by a few institutions. Indeed, this is the case currently for DiSSCo whereby stakeholders have committed via an MoU to support and contribute to the development of DiSSCo's mission and goals. MoUs are not legally binding but carry a degree of seriousness of intent and have mutual respect from the signatories. Often, MoUs are the first step towards a more permanent agreement with a legally binding basis.

An MoU acting as a framework for cooperation can be further strengthened when accompanied by a set of statutes. Such statutes can, to all intents and purposes be nearly identical to those that would exist in conjunction with more formal arrangements (see below), and as such can help to provide a smooth transition when necessary.

In addition, specific funded projects during the early stages of DiSSCo’s life, such as ICEDIG, SYNTHESYS+ and DiSSCo Prepare are normally organised based on formal consortium agreements between the beneficiaries, who are not necessarily all signatories to the MoU but nevertheless share some common interests and motivations.

3.8.1.2. More formal arrangements

On the other hand, creation of the final, long-term collaboration over many years expected of a supranational RI most likely requires a negotiated intergovernmental agreement. Being backed by governmental/ministerial decisions, negotiated agreements of this type have a significance and weight beyond other formalisms. Although these arrangements can take a long time to achieve, they can be stable and enduring once established.

To support such agreements in respect of European RIs, the European Commission, responding to requests from EU Member States and the scientific community, has proposed a community legal framework - a European Research Infrastructure Consortium (ERIC) - adapted to the needs of new European-level research facilities and of distributed infrastructures as well. An ERIC is an entity with a legal status recognised in all EU Member States. It meets the needs for recognition of the European identity on a non-economic basis, has a flexible internal structure to accommodate diverse types of infrastructures, and provides some privileges and exemptions (most notably, with respect to Value Added Tax (VAT)). Under certain conditions, an ERIC can also include non-EU partner countries.

Another approach is a public benefit foundation, established to carry out the mission of DiSSCo. However, with no members or shareholders, and no formal recognition in European law, such a foundation must be established in a single country. A foundation of this kind must be certain that it is guaranteed not only a reliable income stream for the long-term (e.g., by contributions from its supporting organisations to carry out their mandated activities) but also that the ongoing and long-term support of the stakeholders is certain. It is not always a reliable arrangement.

3.8.1.3. Importance of correct legal status

The choice of the correct legal status is also important from funding and commercial perspectives. A minimal solution such as the MoU approach described above that permits achieving the scientific goals with the smallest possible legal, administrative and financial complications, whilst administratively preferable, could underestimate the need for an adequate legal foundation. For example, with MoUs and other non-legal forms, a group of institutions cannot enter collectively into commercial contracts and other agreements with third parties (such as product or service suppliers). This must be done (and the risk assumed) by one of the institutions alone. Similarly, funding agencies often have formal eligibility requirements regarding the legal standing of entities that submit proposals or are the recipients of grants. Whether this is a single institution acting on behalf of a consortium (as, for example in European framework funding for research) or whether it is with an entity that is a representation and constitution of the institutions collectively, has significant administrative, taxation, employment and financial regulatory implications.

Recommendation 13: DiSSCo should propose the legal form required to achieve its aims and objectives and to administer and support its operations, keeping in mind the need for long-term viability and stability, the need to be able to enter into legal agreements with third-parties, and the need to assume responsibility for and mitigate risks and liabilities.

3.8.2. Implications for data management practices

Many of the 16 policy areas studied by ICEDIG (see Table

Some examples of specific legislation applying directly to DiSSCo activities with implications on data management practices:

- International multilateral environmental agreements (conventions), for example:

- Convention on Biological Diversity (CBD), including the Nagoya protocol on Access and Benefit Sharing (ABS)*

31 ; - Convention on International Trade in Endangered Species of Wild Fauna and Flora (CITES);

- Convention on the Conservation of Migratory Species of Wild Animals (CMS);

- IUCN Red List of Threatened Species;

- Convention on Biological Diversity (CBD), including the Nagoya protocol on Access and Benefit Sharing (ABS)*

- EC Regulations and Directives on:

- General Data Protection Regulation (GDPR), Regulation (EU) 2016/679;

- Open Data and Public Sector Information (PSI), Directive 2019/1024 (replacing the former Public Sector Information Directive 2013/37/EU);

- Infrastructure for Spatial Information (INSPIRE), Directive 2007/2/EEC;

- Conservation of natural habitats (Habitats), Directive 92/43/EEC;

- Conservation of wild birds (Birds), Directive amended, 2009/147/EEC;

- Invasive alien species (IAS), Regulation (EU) 1143/2014;

- Relevant national legislation.

Further study is needed to compile guidance for how DiSSCo and its member institutions should implement and demonstrate compliance with legal requirements of such legislation.

In other areas, there is more discretion and flexibility. Legislation and regulations may not apply directly (Freedom of Information and Intellectual Property Rights are two example areas) but taking the appropriate legislation into account when designing data management practices and systems can make compliance much easier to achieve and police.

In both cases, it is sensible that DiSSCo and its member institutions adopt, as far as possible common approaches to data management to comply with such requirements. This is a topic for the data management plan of DiSSCo that is marked for further study in the present provisional data management plan [

Recommendation 14: For each broad policy area affecting DiSSCo activities and directly covered by mandatory legal and regulatory considerations for data management, DiSSCo must list the legislation and regulations that apply at national and/or European level and say how DiSSCo and its member institutions will comply with each of the mandatory requirements (for example, by indicating specific clauses in the DiSSCo Data Management Plan). The broad policy areas are: i) Access and Benefit Sharing (ABS); ii) Data and digital media publication; iii) FAIR / Open Data / Open Access; iv) Freedom of information (FOI); v) Intellectual Property Rights (IPR); vi) Data Standards; vii) Personal data; viii) Protection of sensitive collections data; ix) Public Sector Information; x) Responsible Research and Innovation (RRI); xi) Cloud services and storage; xii) Information risk management; xiii) Information security; xiv) Collections access and information; xv) Collections care, development and scope; and xvi) Digitization strategy and prioritisation.

Recommendation 15: For each of the broad policy areas mentioned in recommendation 14 affecting DiSSCo activities and affected by legislation indirectly, DiSSCo should state what practices it will adopt to make compliance easier to achieve and police.

Specific attention will need to be given to the topic of moving sensitive data about collections, specimens, personnel (e.g., collectors) and places across international borders in cases where DiSSCo collection-holding institutions are not located in Member State countries belonging to the European Union. In such cases, there are additional considerations, such as (for example) whether the European Commission has determined that the country in question provides an adequate level of data protection*

Recommendation 16: DiSSCo must give specific attention (perhaps by implementing a ‘compliance and moderation service’) to the rules governing the movement of sensitive data across international borders i.e., between European Union Member States and third countries (including defining specifically what is meant by ‘sensitive data’ in the context of the legislation affecting DiSSCo operations).

3.8.3. Open research data

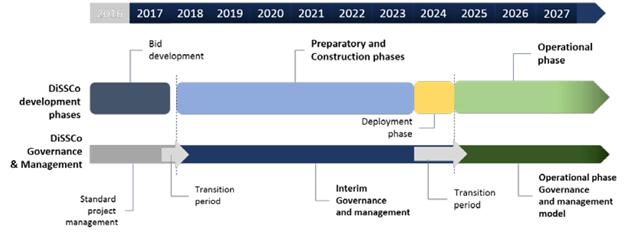

In 2012, the European Commission published a first recommendation, updated in 2018*