|

Research Ideas and Outcomes : PhD Thesis

|

|

Corresponding author: Mikel W Cole (mikel.w.cole@gmail.com)

Received: 28 Aug 2018 | Published: 29 Aug 2018

© 2018 Mikel Cole

This is an open access article distributed under the terms of the Creative Commons Attribution License (CC BY 4.0), which permits unrestricted use, distribution, and reproduction in any medium, provided the original author and source are credited.

Citation: Cole MW (2018) Effectiveness of peer-mediated learning for English language learners: A meta-analysis. Research Ideas and Outcomes 4: e29375. https://doi.org/10.3897/rio.4.e29375

|

|

Abstract

Background

This manuscript reports the findings from a series of inter-related meta-analyses of the effectiveness of peer-mediated learning for English language learners (ELLs). Peer-mediated learning is a broad term that as operationalized in this study includes cooperative learning, collaborative learning, and peer tutoring. Drawing from research on interaction in second language acquisition, as well as from work informed by Vygotskian perspectives on socially-mediated second language learning, these meta-analyses synthesize the results of experimental and quasi-experimental studies.

New information

Included studies were conducted with language learners between the ages of 3 and 18 in order to facilitate comparisons to US students in K-12 educational settings. All participants were identified as ELLs, though learners in both English as a Second Language (ESL) and English as a Foreign Language (EFL) settings were included. Similarly, learners from a variety of language backgrounds were included in order to facilitate generalizations to the linguistic diversity present in US schools, and abroad. Main effects analyses indicate that peer-mediated learning is effective at improving a number of outcome types, including: language outcomes, academic outcomes, and social outcomes. Funnel plots and Egger’s regression analyses were conducted to examine the probability of publication bias, and it appears unlikely in most analyses. Moderator analyses were also conducted, where sample sizes were sufficient, to examine what measured variables were capable of explaining heterogeneity in effect sizes between studies.

Introduction

This dissertation presents the results of a meta-analysis of the effectiveness of peer-mediated learning for English language learners (ELLs)*

Background

Currently, more than eleven million students in K-12 schools in the United States speak a language other than English at home, meaning that linguistically-diverse students now comprise more than 20% of the total school age population (

Not only is the population of ELLs rapidly growing and dispersing throughout US schools, ELLs are a remarkably heterogeneous group of students (

Statement of the Problem

School-level Silence: Sociopolitical Context and Program Models

ELLs are a linguistically diverse group of students, collectively speaking more than 400 languages (

This historical legacy of silence persists in contemporary examples of lost opportunities to learn and instances of the ongoing denial of students’ access to their own language and literacy practices (

Empirical evidence indicates that context influences student learning, and both the sociopolitical environment and the model of education provided to students contribute to ELLs’ academic success (

Perhaps the most widely-researched aspect of linguistic capital present in the effectiveness literature for ELLs is language of instruction (

Teacher-level Silence: Pedagogy, Preparation, and Dispositions

Current schooling practices continue to manifest messages of silence for linguistically-diverse students and teachers often reinforce these messages, creating classroom atmospheres like the following example where the teacher invokes a traditional “Initiate-Respond-Evaluate” discourse pattern that effectively stifles students: “I was struck by the silence when I entered the classroom. The teacher, positioned at the front of the traditionally organized room, began to speak. ‘Where’s the adjective in this sentence?’”(

Unfortunately, most teachers of ELLs remain largely unprepared to provide the specialized learning this growing and heterogeneous group of students requires (

Even in classrooms where talking and rich discussion are the norm, English learners are often silenced during class discussions because of inequitable distributions of power between students and teachers (

Student-level Silence: Positioning, Identity, and Resistance

ELLs are also positioned towards silence by distributions of power at the student level, distributions at once informed by sociopolitical factors in the local context and driven by the reorganization of social strata and identity formation at the student level (

Consequently, learners’ identities and motivations affect academic success in dynamic and complex ways; sometimes peer influences and individual aspirations drive learners to pursue school success, and sometimes peer networks and individual responses to power inequities lead learners to resist schooling (

In conclusion, it is worth reiterating the primary focus of the proposed study—to investigate the effectiveness of peer-mediated learning for improving language, academic, and social outcomes for ELLs. This framing of “the problem” is intended to show the multi-faceted ways that issues of power and inequity interact with learning for ELLs. However, it is not intended to advance a claim that interactive learning methods will solve all of the inequities that ELLs face. Cooperative learning alone is no panacea. Rather, it is the thesis of this statement of the problem that questions of educational effectiveness for ELLs demand attention to the ways that power and inequity interact with learning.

General Research Questions

Specifically, the meta-analysis reported in this dissertation seeks to answer the following two primary research questions. More specific questions and hypotheses are presented in Chapter 3, following the literature review in Chapter 2 that presents the case for examining specific variables of interest.

- Is peer-mediated instruction effective for promoting academic or language learning for English language learners in K-12 settings?

- What variables in instructional design, content area, setting, learners, or research design moderate the effectiveness of peer-mediated learning for English language learners?

Significance of the Study

The results of the proposed meta-analysis are intended to contribute to a growing literature on the effectiveness of specific instructional approaches for the fastest growing group of students in US schools, which contributes to an on-going discussion of equitable, high-quality instruction for ELLs. The results of the meta-analysis will offer a concise synthesis of multiple evaluation studies; specifically, standardized mean effect size estimates for language, academic, and attitudinal outcomes will provide systematic evidence of the effectiveness of peer-mediated instruction in key sets of learning outcomes for ELLs. Additionally, meta-analysis enables a systematic analysis of moderating factors that are important to consider when interpreting current and future evidence and when considering instructional decisions that might arise during implementation of peer-mediated learning in actual classroom contexts. As discussed in the Methods section, inclusion of studies conducted within the US and in other countries enables results to be broadly generalizable while allowing for analysis of the contribution of context as a moderator of effectiveness (i.e., are results produced in English-as-a-Foreign-Language and English-as-a-Second-Language settings significantly different?).

Literature Review

Peer-mediated Learning

As indicated, the purpose of this meta-analysis is to synthesize the empirical literature on the effectiveness of peer-mediated learning for English language learners in K-12 settings; specifically, the meta-analysis computes main effects and identifies important mediators of effectiveness using experimental and quasi-experimental studies. Thus, the most relevant literature to review consists of previous meta-analyses and quantitative syntheses of peer-mediation; however, important qualitative studies, especially highly-cited reviews and syntheses are included to ensure that relevant theoretical, instructional, and empirical variables are not overlooked by focusing exclusively on experimental designs in the literature review.

What is Peer-mediated Learning?

In this paper, “peer-mediated learning” refers to an instructional approach that emphasizes student-student peer interaction, and it is intended to provide a contrast to teacher-centered or individualistic approaches to learning. In practice, peer-mediated learning includes a variety of approaches, each with supporting literatures that are typically distinct from one another. Specifically, this meta-analysis synthesizes three distinct varieties of peer-mediated learning: cooperative, collaborative, and peer tutoring, a distinction employed in previous syntheses (e.g.,

The use of peer-mediated as a term to include multiple varieties of instruction not only emphasizes the similarities amongst these methods, it also reflects an underlying bias in this paper. The author currently sees a sociocognitive reading of Vygotskian theory as a conceptual common grounds between traditional second language acquisition models of L2 learner interaction and sociocultural models of L2 learner interaction, and Vygotskian perspectives on learning and cognitive development would describe all three approaches (i.e., cooperative, collaborative, and peer tutoring) as peer-mediated learning (see for example,

Thus, the treatment of several varieties of peer-mediated learning as similar does not imply that they are identical; rather, the intention is to focus on what they have in common, especially when compared to teacher-driven or individualistic approaches. However, for the sake of clarity and to maintain an awareness of how the varieties do differ in meaningful ways, each of the three focal varieties of peer-mediated learning is briefly reviewed separately below.

Cooperative learning

Cooperative learning represents what

A commonly definitive characteristic of cooperative learning approaches is the degree of structure (

The description of Jigsaw above highlights another important component that defines cooperative methods—interdependence. The concept of interdependence is closely tied to group goals, and is intended to measure the extent to which individual members rely on each other for success. Several versions of cooperative learning suggest that students are motivated to participate in cooperative tasks because the group shares a common goal; however, researchers argue that commonly shared group goals are insufficient alone (e.g.,

Collaborative Learning

A number of reviews treat cooperative and collaborative methods as if they are similar, if not identical, methods (e.g.,

Peer Tutoring

While cooperative and collaborative methods dominate the field of peer-mediated learning approaches, it is important to recognize that there is considerable diversity of approaches within the field. Inclusion of peer tutoring approaches is intended to illustrate this diversity, while acknowledging that other peer-mediated approaches exist. Peer tutoring approaches also vary widely (see

How Does Peer-mediation Promote Learning?

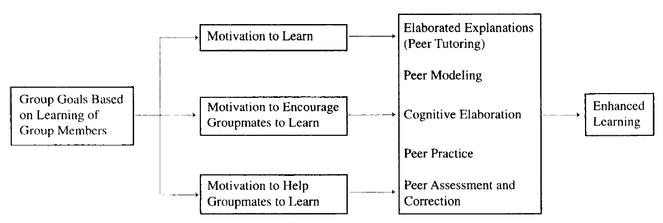

Thus, according to his model, group interdependence is a necessary component of enhanced learning through cooperation. Group interdependence is mediated by a number of motivational factors that contribute to several specific components of peer-mediated learning, including: elaborated explanations, peer modeling, and peer assessment and correction. It seems clear from the literature base of individual studies from which Slavin draws that not all of the individual components in the third box need be present for peer-mediated learning to be effective; rather, group interdependence fosters motivation which enables some of the individual components to occur. Slavin even acknowledges that limited evidence suggests that group interdependence need not always be present, but he argues that it is easiest to make cooperative methods effective when interdependence is present (

In addition to including aspects of power and equity,

Empirical Evidence for Peer-mediated Learning

Both quantitative and qualitative evidence support the claim that peer-mediated learning is effective at promoting numerous kinds of outcomes; while the qualitative syntheses, with some exceptions like Slavin’s narrative, “best-evidence” reviews (

Qualitative Evidence

In another best evidence synthesis of qualitative and quantitative studies,

Like many of the quantitative syntheses discussed below,

Finally, another synthesis of cooperative learning explores the literature on the effectiveness of cooperative methods with Asian students in preschool to college settings (

Quantitative Evidence

Unlike the theoretically-oriented syntheses presented above, the following quantitative reports offer more methodologically-focused syntheses that compare various models of cooperative learning to one another (

More recently,

Interestingly,

One approach to determining the important components of an intervention is to systematically examine the contribution of important variables over the course of many separate replications (i.e., a meta-analysis); nonetheless, a more fine-grained approach is to design a study that explicitly tests various components individually and/or in multiple combinations, and

Finally, two meta-analyses examine the impact of peer-mediated methods for particular groups of students.

In conclusion, considerable qualitative and quantitative research supports the assertion that peer-mediated methods of instruction are more effective at promoting multiple kinds of outcomes than individualistic or competitive approaches. Despite decades of consistently positive research, a number of variables of instructional structure (e.g., size of group and composition of groups) and social interaction, as well as important learner (e.g., age) and methodological (e.g., design and measurement) variables, remain important foci of current and future research. In particular, few syntheses of the effectiveness of peer-mediation for particular kinds of students exist, and none of the syntheses discussed so far even mention specific issues involving linguistically diverse students. Thus, questions of whether, why, and under what conditions peer-mediation is effective for English language learners are the focus of the remainder of this literature review.

Peer-mediated Learning and ELLs

While much of the research regarding the effectiveness of cooperative learning reviewed so far is relevant for English language learners, it is important to keep in mind that English language learners are a distinct group of learners who, by definition, must master both academic and language objectives. Thus, when considering ELLs, it is essential to consider whether peer-mediated methods are effective for both academic and language outcomes, and as noted, language outcomes are largely ignored in the studies already reviewed. Moreover, it is essential to understand whether there are important linguistic mechanisms engaged during peer-mediated learning that are conceptually distinct from the more psychological and sociological mechanisms of peer-mediated methods just discussed in order to consider the relevant instructional and theoretical foci for L2 research.

Academic Rationale for Peer-mediated Learning with ELLs

Several recent syntheses of effective instruction for English language learners suggest that cooperative and collaborative models of instruction could be effective for promoting language, literacy, and content-area learning for ELLs (

For example, the National Literacy Panel on Language-minority Youth and Children (

Two other high-profile reviews (

Investigating effective instructional approaches for ELLs in elementary and middle grades,

No synthesis of the effectiveness of peer-mediated methods at improving academic outcomes for ELLs was identified in the review of extant literature for this meta-analysis, which is a strong warrant for the pursuit of this particular study. Consequently, only high-visibility, individual studies exist to document the academic rationale for using peer-mediated methods with ELLs. What Works Clearinghouse (WWC) reports results for only the most methodologically-rigorous studies, and taken as a whole, the inclusion criteria and analyses make the WWC site something like a quantitative synthesis of research; granted, WWC does not employ meta-analysis or any other formally-synthetic method to make claims across the included studies, so the actual reports are not truly syntheses. For ELLs, What Works Clearinghouse reports separately for the following outcomes: reading/writing, mathematics, and English language development. Of the studies included for reading and writing, only three use peer-mediated methods extensively, and all three demonstrate effectiveness at promoting literacy outcomes for ELLs. Two of the peer-mediated literacy interventions are complex models of which peer-mediated learning is one of multiple components (i.e., Success for All and Bilingual Cooperative Integrated Reading and Composition), and only one of the interventions focuses exclusively on the effectiveness of peer-mediation (Peer-assisted Learning Strategies, or PALS). WWC does not report any interventions for ELLs with math/science outcomes that meet its standards for inclusion, and language outcomes are discussed in the following section that presents the linguistic rationale for using peer-mediation with ELLs.

A closer look at the full reports of the three included interventions with literacy outcomes reveals that a number of important instructional variables differ across these interventions. For example, the most effective of the three interventions is BCIRC, and the WWC report is based almost entirely on

Like BCIRC, Peer-assisted Learning Strategies (PALS) was evaluated for use in upper elementary ELL classrooms, and like BCIRC, only one evaluation study of the intervention meets WWC standards (

Linguistic Rationale for Peer-mediated Learning with ELLs

While no formal synthesis of the effectiveness of peer-mediation at promoting academic outcomes for ELLs exists, several theoretical, qualitative, and quantitative syntheses of the effectiveness of peer-mediated learning at promoting language outcomes for ELLs exist. Thus, there is a considerably stronger rationale for using peer-mediation to promote language learning for ELLs than for promoting academic outcomes, and this is a key assertion because it is precisely English language proficiency that defines this group of students. Thus, peer-mediated learning offers promise not only as an effective approach for promoting the academic success of ELLs, it may also be an important tool for removing the fundamental barrier to equal access to the mainstream school curriculum the term ELL is intended to identify: English language proficiency*

Whereas, cooperation is high-structure and collaboration is low-structure in her scheme, she finds that interaction studies vary widely on this variable. Importantly, Oxford identifies a number of additional variables that influence the effectiveness of interactive approaches; including learner variables (i.e., willingness to communicate and learning styles) and grouping dynamics (i.e., group cultures and physical arrangement of the classroom).

In a narrative review of both qualitative and quantitative empirical research,

In particular, the findings reported in

Two recent meta-analyses of the effectiveness of interaction at promoting L2 learning outcomes offer additional warrant for using peer-mediated learning methods with ELLs; and in addition to providing overall estimates of the effectiveness of peer-mediated L2 learning, they provide considerable insight into important factors that mediate effectiveness. The first of the two meta-analyses (

These syntheses provide compelling evidence that peer-mediated methods are effective at promoting a wide variety of language outcomes for second language learners, though many issues raised in the L1 research remain largely unanswered in the L2 literature. For instance, ELLs are a highly heterogeneous population (i.e., language background, prior schooling, SES, race/ethnicity, age of arrival, and length of residence), but there is little research that discusses with which ELLs peer-mediated methods might be most effective, though both

Type of task matters in both the theoretical and empirical L1 and L2 literatures reviewed so far, but neither the qualitative nor the quantitative literatures offer much feedback about which kinds of tasks are best for which types of language or academic outcomes for ELLs. Importantly,

Summary and Unanswered questions

Peer mediated methods have consistently proven effective at promoting academic, social, and language outcomes with a wide variety of first- and second-language students in a wide variety of contexts, lending support to

Summary of key variables from literature review.

|

VARIABLE |

L1 Research |

L2 Research |

|

Peer-mediated Method Matters |

|

|

|

Peer-mediated Method does not Matter |

|

|

|

High-structure is Best |

|

|

|

Low-structure is Best |

|

|

|

Interdependence is Needed |

|

|

|

Interdependence is not Needed |

|

|

|

Content Area Matters |

|

|

|

Content Area does not Matter |

|

|

|

Age of Students is Important |

|

|

|

Age of Students is not Important |

|

|

|

Ethnicity of Students Matters |

|

|

|

Ethnicity of Students does not Matter |

|

|

|

Language Proficiency (i.e., L1 or L2) of Students Matters |

|

|

|

Language Proficiency (i.e., L1 or L2) of Students does not Matter |

|

|

|

Culturally-relevant Instruction Matters |

|

|

|

Culturally-relevant Instruction does not Matter |

|

|

|

SES of Students Matters |

|

|

|

SES of students does not Matter |

|

|

|

Size of Group Matters |

|

|

|

Size of Group does not Matter |

|

|

|

Equality of Power among Students Matters |

|

|

|

Equality of Power among Students does not Matter |

|

|

|

Duration of Intervention Matters |

|

|

|

Duration of Intervention does not Matter |

|

|

|

Setting (i.e., segregated, cooperative, ESL or EFL, lab or classroom, urban or rural) Matters |

|

|

|

Setting does not Matter |

|

|

|

Journal Quality Matters |

|

|

|

Journal Quality does not Matter |

|

|

|

Sample Size Matters |

|

|

|

Sample Size does not Matter |

|

|

First, researchers disagree about the importance of the particular method, whether cooperative, collaborative, peer tutoring, or some set of specific approaches (e.g., Jigsaw, Learning Together, STAD, TGT). The clearest distinction appears to be between L1 researchers that generally agree the method matters (though which method is ultimately superior remains debatable) and L2 researchers that typically do not report differences between specific methods. To be fair, this largely reflects the nascent state of L2 research, and many of the studies listed in Table

While considerable debate exists within and across L1 and L2 literatures about which peer-mediated method is most effective, there is strong consensus that more structured approaches produce bigger gains than less-structured approaches. Despite this strong consensus, theoretical (

Notably, several variables of equity mentioned in the Statement of the Problem in Chapter 1 appear to be missing, or at least largely ignored, in the above list, including: adequate facilities, context of reception, preparation of teachers to work with ELLs, attitudes and beliefs of teachers towards ELLs, relations of power between teachers and ELLs, and length of residence of ELLs. To the extent possible, these variables will also be coded when reviewing studies for inclusion in this meta-analysis. However, the absence of these variables from the extant literature probably supports the assertion that the field of peer-mediated learning studies for ELLs remains largely driven by psychological theory and that sociological perspectives remain underrepresented (e.g.,

Methods

Research Questions

Chapter 1 presented the two fundamental research questions driving this meta-analysis; however, as indicated in the literature review in Chapter 2, there are a number of substantive theoretical, instructional, and methodological variables of potential interest. Consequently, formal hypotheses regarding the key variables of interest are presented below.

- Is peer-mediated instruction effective for promoting language, academic, or attitudinal learning for English language learners in K-12 settings?

a. Hypothesis 1a: Test of HA: Interventions testing the effectiveness of peer-mediated forms of learning against teacher-centered or individualistic control groups report language outcome effect sizes that are significantly larger.

b. Hypothesis 1b: Test of HA: Interventions testing the effectiveness of peer-mediated forms of learning against teacher-centered or individualistic control groups report academic outcome effect sizes that are significantly larger.

c. Hypothesis1c: Test of HO: Interventions testing the effectiveness of peer-mediated forms of learning against teacher-centered or individualistic control groups report attitudinal outcome effect sizes that are not significantly different.

- What variables in instructional design, content area, setting, learners, or research design moderate the effectiveness of peer-mediated learning for English language learners?

a. Hypothesis 2a: Test of HO: Interventions testing the effectiveness of cooperative, collaborative, and peer tutoring approaches report effect sizes that are not significantly different.

b. Hypothesis 2b: Test of HO: Interventions testing the effectiveness of peer-mediated approaches in English-as-Second Language (ESL) and English-as-a-Foreign Language (EFL) settings report effect sizes that are not significantly different.

c. Hypothesis 2c: Test of HO: Interventions testing the effectiveness of peer-mediated approaches in elementary, middle school, and high school settings report effect sizes that are not significantly different.

d. Hypothesis 2d: Test of HO: Interventions testing the effectiveness of peer-mediated approaches in laboratory and classroom settings report effect sizes that are not significantly different.

e. Hypothesis 2e: Test of HO: Interventions testing the effectiveness of peer-mediated approaches as part of complex interventions and those testing just peer-mediation report effect sizes that are not significantly different.

f. Hypothesis 2f: Test of HO: Interventions testing the effectiveness of peer-mediated approaches with students from different language backgrounds report effect sizes that are not significantly different.

g. Hypothesis 2g: Test of HO: Interventions testing the effectiveness of peer-mediated approaches with students from high- and low-SES backgrounds report effect sizes that are not significantly different.

h. Hypothesis 2h: Test of HA: High-quality studies report effect sizes that are significantly larger than low-quality studies.

i. Hypothesis 2i: Test of HA: Studies of longer duration report effect sizes that are significantly larger than short-duration studies.

- In what ways do select issues of power and equity impact the effectiveness of peer-mediated methods?

a. Hypothesis 3a: Test of HA: Studies conducted in settings where ELLs are segregated from their English-speaking peers will report significantly lower effect sizes than studies conducted in settings where ELLs are integrated with non-ELLs.

b. Hypothesis 3b: Test of HA: Studies conducted in settings that authors describe as having adequate facilities will report significantly higher effect sizes than studies conducted in settings that authors describe as inadequate.

c. Hypothesis 3c: Test of HA: Studies conducted with ELL-certified teachers will report significantly higher effect sizes than studies in which teachers do not possess specialized certifications to work with ELLs.

d. Hypothesis 3d: Test of HA: Studies testing interventions described by the authors as at least partially culturally-relevant will report larger effect sizes than studies that do not make culturally-relevant claims.

e. Hypothesis 3e: Test of HA: Years of teaching experience will be positively correlated with effect sizes.

f. Hypothesis 3f: Test of HA: Studies reporting interventions that utilize students’ native language during instruction will report larger effect sizes than studies using only students’ second language (i.e., English) for instruction.

Criteria for Inclusion and Exclusion of Studies

A number of researchers argue that not enough experimental evaluations of intervention effectiveness exist in the ELL literature (e.g.

Types of Studies

Both experimental and quasi-experimental studies were included in the review. For studies in which non-random assignment was used, studies must have included pre-test data, or must have statistically controlled for pre-test differences (e.g., ANCOVA). Similarly, studies which tested more than one treatment against a control group were included as long as one treatment could readily be identified as the focal treatment. If a study did not include a control group, it was excluded.

Although 20 years is a common standard for study inclusion, studies that are older than 20 years were included if they met the other criteria because scarcity of research suggests that older studies may be necessary to provide sufficient power for the detection of effects and moderator analyses.

Finally, for practical purposes studies must have been published in English, though the research may have occurred in any country with participants of any nationality. In addition, the target language must have been English in order to facilitate direct comparisons to ELLs in US schools; however, participants may have represented any language background, and instruction could have occurred in any language, as well.

Types of Participants and Interventions

Studies must have tested the effects of peer-learning involving students between the ages of 3 and 18, again in order to facilitate comparisons to US students in K-12 educational settings. For example, in studies of peer tutoring, both students for whom outcomes are measured and students who act as tutors must have been within this age range to preserve the focus on “peer” interactions. Also, participants must have included students identified as English language learners (though methods of identification and definitions of ELL varied), and results must have been exclusively, or disaggregated, for ELLs. For example, the inclusion of studies conducted internationally necessitated the inclusion of students learning English as a Foreign Language (EFL) and students in the United States learning English as a Second Language (ESL). The difference in settings (e.g. immersed in an English-dominant environment for ESL students) makes the process of language acquisition very different, but for purposes of this synthesis, both of these types of learners were subsumed under the ELL category.

Interventions may have utilized a number of instructional activities, but peer-peer interaction must have been a focal aspect of the intervention. Furthermore, comparison groups must not have received instruction for which peer-mediated learning was widely-used, and studies that only provided a cooperative intervention were coded separately from those that involved more complex interventions in which peer-mediated methods were just one component (e.g., Success for All). Studies for which peer-peer interaction could not be identified as a focal feature of the intervention were excluded, as were studies for which comparison groups used large amounts of peer assistance.

Types of Outcomes and Instruments

Cooperative learning has been used to improve almost every conceivable academic achievement outcome, but it has also been widely used to improve a number of behavioral and social outcomes. Therefore, nearly any outcome was coded, though some outcomes were not assessed frequently enough to allow inferential statistical analyses. To facilitate coding and analysis, outcomes will be divided into five conceptually-distinct categories; and while variety existed within categories (e.g. math and social studies within academic outcomes), it was presumed that enough similarity existed to facilitate comparative analyses. These categories are: oral language, written language, other academic, attitudinal and social. Oral language outcomes were those that focused on speaking and listening, while written language outcomes were those that included primarily reading and writing. Other academic outcomes included content-area outcomes from subjects like science, social studies, and mathematics. Attitudinal outcomes were psychological in nature and consisted almost entirely of measures of motivation, and social outcomes were behavioral measures of things like interactions with native speakers. In some cases, measures were broad-band, complex measures that included aspects of several of these categories. For instance, the Revised Woodcock-Johnson Test of Achievement is a widely-used instrument that explicitly measures oral language, reading fluency and comprehension, and academic achievement. In some cases, specific subtests were reported and when possible, these sub-test scores were coded separately into one of the above categories. However, in other cases, only composite scores were reported, and in some cases descriptions of the measure seemed to favor one category over another. In some cases, however, the measures were simply too inclusive to reliably choose one category over another. In these cases, in order to maintain inter-rater reliability and to provide a systematic coding approach that could be replicated later, written language was chosen as the default outcome category for complex outcomes that measured more than one category.

Similarly, a number of instruments were used to assess effectiveness, including norm-referenced tests, researcher and teacher-created measures, and psychological and sociological instruments. These characteristics were coded to enable both inferential moderator and descriptive analyses, and they followed the same construct-driven division of results just discussed.

Search Strategy for Identifying Relevant Studies

Multiple databases were searched using consistent combinations of keywords, though specific format varied according to individual database preferences (e.g. AND used between terms for the PsychINFO search). Several databases were combined into simultaneous searches. For instance, the ProQuest search included the following individually-selected databases: Dissertations at Vanderbilt University and Dissertation Abstracts International, Ethnic News Watch, and several subsets of the Research Library collection--core, education, humanities, international, multicultural, psychology, and social sciences. Similarly, PsychINFO included the following databases, which were manually-selected: ERIC, IBSS, CSA Linguistics, Language, and Behavior, PsychArticles, PsychINFO, and Sociological Abstracts.

Furthermore, potentially-relevant studies were cross-cited using the bibliographies of previous syntheses and identified studies. All studies were identified through the following process - titles and abstracts were first skimmed to identify potentially-relevant studies; if a study appeared to be a possible candidate, the full study was retrieved to the extent possible. If the study was not immediately available, Interlibrary Loan requests and librarian searches were pursued. If this did not succeed, attempts were made to contact the author of the study. Studies not retrieved at that point were deemed unavailable.

“Near-miss” studies were excluded at this point if closer examination revealed that they violated inclusion criteria or if an effect size could not be extracted from the information provided. As above, attempts were made to retrieve necessary information from the authors, though in many cases data were no longer available or the authors could not be reached. The “near miss” studies are included in the references section, but no further analyses were conducted with these studies.

The researcher functioned as the primary coder, and all of the studies were coded by the researcher. Reliability of inclusion and exclusion criteria, as well as coding of key substantive and methodological variables was assessed by comparing the primary coding with the coding of two independent coders. The additional coders were doctoral students with experimental and statistical training methods in the ExpERT program at Vanderbilt University. After some discussion of the inclusion and exclusion criteria and practice with an example, the other coders made inclusion/exclusion decisions for a sub-sample of 30 abstracts.

Description of Methods Used in Primary Studies

As already discussed, previous syntheses suggest that high-quality experimental studies are scarce in this field. Consequently, it seems appropriate to cast a wide net, a long-standing approach to social science syntheses (e.g.

Criteria for Determination of Independent Findings

As is often the case in meta-analysis, some studies reported data on several outcomes, and occasionally multiple measures of the same construct were provided by individual studies. For instance, a study may have measured outcomes of reading comprehension, reading fluency, and attitudes toward reading. Furthermore, both researcher-specific and state-mandated measures of reading comprehension were sometimes reported. For all such cases of multiple measures, the following general approach was used. First, every measure was coded in order to provide simple descriptive summaries of the kinds and frequencies of outcomes reported in the literature. Then, as part of the coding, outcomes were categorized into one of the five primary constructs outlined above. Finally, for situations in which multiple outcomes and/or measures were provided for any given construct in a single study (e.g. two different academic outcomes), a focal measurement was identified. In general, the most reliable instrument was coded as the focal instrument, though in cases where reliability information was not provided, the most widely-used measure was chosen. If neither of these criteria could be employed, the first measure discussed was chosen as a default. Although many meta-analyses average effects across measures, individual measures were utilized in this review because the measures varied considerably within constructs (e.g. math, reading and science within academic) and because coding of individual measures preserves the possibility of additional analyses at a later time. In any case, only one measurement for each of the five main constructs was identified as a focal instrument, allowing analyses within constructs that did not violate assumptions of independence.

Details of Study Coding Categories

A number of study and outcome characteristics were coded in order to enable analyses of the primary research questions as well as a number of potentially-relevant moderator analyses. A brief summary of the variables coded is provided here. Essentially, the variables included: study descriptors like design and quality, participant descriptors like age and language background, treatment descriptors like duration and frequency, and a variety of outcome descriptors. Key outcome descriptors included primary data like means and standard deviations as well as secondary calculations like effect sizes. While effect size statistics are discussed in more detail elsewhere, as much relevant information as necessary for effect size calculations was identified and coded, in keeping with guidelines provided by

Moderating variables are those that may affect overall effect size estimates leading to different effect sizes estimates for different values of the moderator. A number of study, treatment and participant variables were analyzed as moderators in CMA analysis and as correlates in SPSS. Separate analyses were conducted for each of these variables, and the results for these moderator analyses are presented separately for each moderator of interest. A potential limitation of multiple moderator analysis is that it does not account for covariation amongst moderators, and meta-regression is an alternative analysis that allows examination of the independent contributions of each variable to variance in the effect sizes. To the extent possible, meta-regression analyses of key moderators that affect outcomes was conducted to determine the unique contribution made to the variance of outcomes by methodological and substantive moderators. At minimum, single-variable regressions of potentially influential variables were run to test their viability as moderator variables, even if multivariate regression was untenable because of small sample size. Exploratory analyses of substantively important variables also included correlational analysis and descriptive statistics.

Finally, coding reliability was assessed through measurement of inter-rater reliability. Following exclusion/inclusion reliability assessment, the researcher met with the additional coders to discuss and practice using the coding manual on three examples. Following this initial training, the coders coded five studies independently. The researcher then met again with the coders to discuss the initial coding and to practice together again on two additional examples. Following the second training session, the two additional coders coded 10 more studies independently. Thus, the coders independently coded 15 studies each, with a total subsample of 25 studies included for the assessment of reliability. The studies were drawn evenly from published and unpublished studies. Cohen’s Kappa was calculated for categorical variables, while Pearson’s r was calculated for continuous variables. For variables with reliability coefficients low enough to be close to chance agreement, variable constructs were reexamined and disagreements were examined case by case to reach consensus.

The effect size statistic (ES) calculated was the Standardized Mean Difference(ESSM), which is appropriate for group contrasts made across a variety of dependent measures (

\(\overline{ES} = \dfrac{\overline{X}_{G1} - \overline{X}_{G2}}{s_{pooled}}_{pooled} = \sqrt{\dfrac{s_{1}^{2} (n_{1}-1) + s_{2}^{2} (n_{2}-1) }{n_{1} + n_{2} - 2}}\).

Thus, the mean effect size is calculated by dividing the difference between the mean for the treatment (XG1) and the mean for the control (XG2) by the pooled standard deviation (spooled). We see in the second formula that the pooled standard deviation (spooled) is equal to the square root of the sum of the weighted variance for the treatment group (s12 * [n1-1]) and the weighted variance for the control group (s22 * [n2-1]) divided by the pooled degrees of freedom (n1 + n2 - 2). In these formulas, s2 is the observed variance and n is the sample size.

The ESSM is known to be upwardly biased for small samples. Thus, the Hedges G transformation is traditionally used to correct for this bias

\(G = D \left(1-\dfrac{3}{4(n_{1} +n_{2}) - 9}\right)\).

Where Cohen’s D = ESSM, the biased effect size estimate weighted by a correction for small sample bias. This adjusted effect size, ES'SM, has its own SE and inverse variance weight formulas, as illustrated in

\(se = \sqrt{\frac{n_{1} + n_{2}}{n_{1}n_{2}} + \frac{\overline{ES}_{SM}}{2(n_{1} + n_{2})}} = \dfrac{1}{se^{2}}\)

However, the illustrated weight formula is appropriate only for fixed effects models which assume invariate effect sizes across studies. These assumptions are untenable given the broad constructs included in the proposed meta-analysis; consequently, a random effects model will be utilized in this meta-analysis, and the formulas for this model include another variance component in the denominator of the weight formula:

\(w_{i} = \dfrac{1}{se_{i}^{2} + \hat{\nu}_{\theta}}\)

In addition to the sampling error represented by the term sei2, the random effects weight includes a term for heterogeneous effect sizes, vθ. This additional term is a constant weight applied to every study, and can be computed as a method of moments estimate using the Q statistic, which is a measure of the heterogeneity of effect sizes within the sample. The formula for vθ is:

In this formula, Q is the heterogeneity statistic provided in standard CMA output, k is the number of effect sizes included in the analysis, and w is the fixed-effects weight calculated as before.

As indicated, heterogeneity was assessed using the Q statistic, which describes the degree to which effect sizes vary beyond the degree of expected sampling error. I2 is another useful measure of heterogeneity, and it indicates the amount of heterogeneity that exists between studies (

Additionally, outliers can be particularly problematic, with extreme observations affecting both effect size estimates by distorting the means of the distributions as well as calculations of variance. Furthermore, as meta-analysis is primarily a survey methodology interested in synthesizing studies and providing descriptions of typical effects, atypical results are not overly-informative. Consequently, Tukey’s guidelines were employed to identify outliers (3*IQR+75th percentile and 25th percentile-3*IQR). Results above and below these values were Winsorized to these cut-off points.

Another source of potential error involves designs that utilize cluster randomization in which intact groups are assigned en masse to conditions, and unless corrected, the standard errors upon which the inverse variance weights are based would be incorrect (

Similarly, in several studies, pre-test data was available, but the original researchers did not use pre-test data in their post data analyses. that is, pre-test differences were left unadjusted in final analyses. In these situations, post hoc adjustments were made by this researcher to control for pre-test differences. Simply, pre-test means were subtracted from post-test means for both the treatment and the control groups, and these differences were used as the mean gain scores from which effect sizes were computed.

Finally, a number of alternate computations were occasionally necessary. For instance, some studies did not provide ES estimates, and a number of formulations exist for converting other commonly reported data into ESSM. These other data include means and standard deviations, t-tests and degrees of freedom, and p values and sample sizes, and effect sizes using these alternative data were calculated as necessary.

Statistical Procedures and Conventions

General statistical analyses were computed using CMA and SPSS software; in particular, overall effect size analyses, some publication bias, and moderator analyses were computed with CMA, and diagnostic and descriptive analyses were conducted with SPSS.

Results

Chapter Four presents the data obtained from descriptive, main effects, and moderator analyses, and Chapter Five will consider the extent to which the data answers the formal research questions detailed in Chapter Three. First, descriptive information is provided for the included sample of studies. Then, descriptive statistics, main effects analyses, and moderator analyses are provided for each of the outcome categories. Because each outcome category contains independent samples of effect sizes and because outcomes are assumed to be more conceptually similar within categories than between them, Chapter Four is organized primarily by outcome type to maintain statistical and conceptual clarity.

Included Sample

Initial keyword searches returned 17, 613 results, of which 148 were unique and potentially relevant. Additionally, extant meta-analyses and syntheses (e.g.,

Included sample of studies.

|

Lead Author |

Year |

Publication Type |

Country |

Construct |

Design |

Grade Level |

|

|

Alhaidari |

2006 |

Dissertation |

Saudi Arabia |

Cooperative |

Quasi-Experiment |

Elementary |

|

|

Alharbi |

2008 |

Dissertation |

Saudi Arabia |

Cooperative |

Experiment |

High School |

|

|

Almaguer |

2005 |

Journal |

USA |

Peer Tutoring |

Quasi-Experiment |

Elementary |

|

|

August |

1987 |

Journal |

USA |

Peer Tutoring |

Quasi-Experiment |

Elementary |

|

|

Banse |

2000 |

Dissertation |

Burkina Faso |

Collaborative |

Quasi-Experiment |

High School |

|

|

Bejarano |

1987 |

Journal |

Israel |

Cooperative |

Quasi-Experiment |

Middle School |

|

|

Brandt |

1995 |

Dissertation |

USA |

Cooperative |

Quasi-Experiment |

High School |

|

|

Bustos |

2004 |

Dissertation |

USA |

Cooperative |

Experiment |

Elementary |

|

|

Calderon |

1997 |

Technical Report |

USA |

Cooperative |

Quasi-Experiment |

Elementary |

|

|

Calhoun |

2007 |

Journal |

USA |

Cooperative |

Quasi-Experiment |

Elementary |

|

|

Chen |

2011 |

Journal |

USA |

Cooperative |

Quasi-Experiment |

High School |

|

|

Cross |

1995 |

Technical Report |

USA |

Collaborative |

Quasi-Experiment |

High School |

|

|

Dockrell |

2010 |

Journal |

England |

Collaborative |

Quasi-Experiment |

Pre-K |

|

|

Ghaith |

2003 |

Journal |

Lebanon |

Cooperative |

Quasi-Experiment |

High School |

|

|

Ghaith |

1998 |

Journal |

Lebanon |

Cooperative |

Quasi-Experiment |

Middle School |

|

|

Hitchcock |

2011 |

Technical Report |

USA |

Cooperative |

Quasi-Experiment |

Elementary |

|

|

Hsu |

2006 |

Dissertation |

Taiwan |

Collaborative |

Quasi-Experiment |

High School |

|

|

Johnson |

1983 |

Journal |

USA |

Peer Tutoring |

Experiment |

Elementary |

|

|

Jung |

1999 |

Dissertation |

South Korea |

Peer Tutoring |

Quasi-Experiment |

Elementary |

|

|

Khan |

2011 |

Journal |

Pakistan |

Cooperative |

Experiment |

High School |

|

|

Kwon |

2006 |

Dissertation |

South Korea |

Collaborative |

Quasi-Experiment |

High School |

|

|

Lin |

2011 |

Journal |

Taiwan |

Cooperative |

Quasi-Experiment |

Middle School |

|

|

Liu |

2010 |

Journal |

Taiwan |

Collaborative |

Quasi-Experiment |

Middle School |

|

|

Lopez |

2010 |

Journal |

USA |

Collaborative |

Quasi-Experiment |

Elementary |

|

|

Mack |

1981 |

Dissertation |

USA |

Collaborative |

Quasi-Experiment |

Elementary |

|

|

Martinez |

1990 |

Dissertation |

USA |

Cooperative |

Quasi-Experiment |

Elementary |

|

|

Prater |

1993 |

Journal |

USA |

Cooperative |

Experiment |

Elementary |

|

|

Sachs |

2003 |

Journal |

Hong Kong |

Cooperative |

Experiment |

High School |

|

|

Saenz |

2002 |

Dissertation |

USA |

Peer Tutoring |

Quasi-Experiment |

Elementary |

|

|

Satar |

2008 |

Journal |

Turkey |

Collaborative |

Experiment |

High School |

|

|

Slavin |

1998 |

Technical Report |

USA |

Cooperative |

Quasi-Experiment |

Elementary |

|

|

Suh |

2010 |

Journal |

South Korea |

Collaborative |

Quasi-Experiment |

Elementary |

|

|

Thurston |

2009 |

Journal |

Catalonia |

Peer Tutoring |

Quasi-Experiment |

Elementary |

|

|

Tong |

2008 |

Journal |

USA |

Collaborative |

Quasi-Experiment |

Elementary |

|

|

Uludag |

2010 |

Dissertation |

Jordan |

Collaborative |

Quasi-Experiment |

Middle/ High School |

|

|

Vaughn |

2009 |

Journal |

USA |

Peer Tutoring |

Quasi-Experiment |

Middle School |

|

The 37 included studies reported relevant data on 44 independent samples (i.e., several reports described multiple experiments or included independent samples) and contained a total of 132 outcomes. As indicated in the full coding manual (in the Excel spreadsheet that accompanies this dissertation Suppl. material

Summary of Key Variables in Included Sample

|

Year (n=43) |

Pre1980-1989 = 4 |

1990-1999 = 10 |

2000-2012 = 29 |

|

|

Publication Type (n=43) |

Dissertation = 15 |

Journal = 22 |

Technical Report = 6 |

|

|

Country (n=43) |

USA = 22 |

Other = 21 |

|

|

|

Setting (n=43) |

ESL= 23 |

EFL= 20 |

|

|

|

Design (n=43) |

Experimental = 8 |

Quasi- experimental= 35 |

|

|

|

Quality (n=43) |

High = 26 |

Medium = 13 |

Low = 4 |

|

|

Dosage (Total Contacts) (n=43) |

0-30 = 17 |

31-90 = 13 |

91+ = 13 |

|

|

Construct (n=43) |

Cooperative = 17 |

Collaborative = 16 |

Peer Tutoring = 10 |

|

|

Component (n=43) |

Yes =19 |

No =24 |

|

|

|

Adequate Facilities (n=23) |

Yes = 2 |

No = 3 |

Unknown = 18 |

|

|

Segregated (n=23) |

Yes = 9 |

No = 14 |

|

|

|

Culturally Relevant (n=23) |

Yes = 5 |

No =18 |

|

|

|

Language of Instruction (n=43) |

L1 only = 2 |

Bilingual = 14 |

L2 only = 14 |

Unknown = 13 |

|

In School (n=43) |

Yes = 43 |

No = 0 |

|

|

|

Teacher Certification (n=43) |

ELL Certified = 12 |

Not ELL Certified = 2 |

Unknown =29 |

|

|

Teacher Experience (n=43) |

0-5 years= 3 |

6-10 years= 4 |

11+ years= 4 |

Unknown= 32 |

|

Teacher Ethnicity (n=43) |

Same as Students’= 7 |

Different than Students’ = 1 |

Unknown = 35 |

|

|

Grade Level (n=43) |

Elementary = 22 |

Middle = 8 |

High = 13 |

|

|

Student Ethnicity (n=43) |

Spanish = 20 |

Asian = 8 |

Other = 15 |

|

|

Student SES (n=43) |

Low = 21 |

High = 3 |

Mixed = 1 |

Unknown = 18 |

|

Student Length of Residence (n=23) |

0-2 years = 1 |

2+ = 0 |

Unknown = 22 |

|

Key outcome variables.

|

Total Outcomes= 62 |

Number of Independent Outcomes by Construct |

Number of Participants in Treatment Groups |

Number of Participants in Control Groups |

|

Oral Language |

14 |

843 |

787 |

|

Written Language |

30 |

919 |

863 |

|

Other academic |

6 |

220 |

451 |

|

Attitudinal |

10 |

397 |

394 |

|

Social |

0 |

0 |

0 |

As indicated in Table

Table

Oral Language Outcomes

Summary of Included Studies and Main Effects

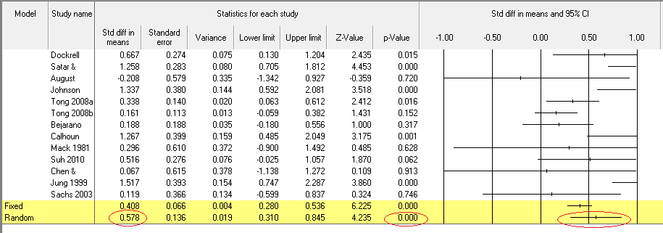

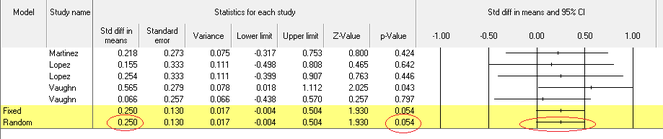

A random effects model of the un-corrected and un-Winsorized data provided a mean effect size estimate for the thirteen oral language outcomes of (.587, SE=.141, p<.001); however, after adjustments for outliers, pre-test differences, and cluster randomization, the mean effect size estimate decreased slightly and the variance decreased slightly (.578, SE=.136, p<.001), suggesting that the larger-than-average outliers and the effects of cluster randomization had very little impact on the original estimates. The adjusted distribution is illustrated by the forest plot in Fig.

Throughout the paper, random effects models are the default, primarily because the assumptions of the fixed model are generally untenable. Empirically, homogeneity analysis of the fixed model illustrates the considerable heterogeneity that exists within the observed sample, offering some empirical justification for the use of a random effects model. The Q statistic (37.213, df=12, p<.001) indicates that the observed effect sizes vary more than would be expected by sampling error alone, and the I2 statistic (67.753) indicates that approximately 68% of the observed variance in effect sizes exists between studies. Together, this suggests that moderator analyses might provide insight into what factors influence the effectiveness of peer-mediated learning for ELLs.

Publication Bias for Oral Language Outcomes

The possibility of publication bias remains a persistent concern in meta-analysis, and the following analysis examines empirical evidence for the presence of publication bias in this sample and the extent to which it might distort the estimates. Lipsey and Wilson (1993, as cited in

A recoding of the type of publication variable into a dummy-coded variable with 1=published and 0=unpublished, indicated that 84.6% of the included sample had been published, while the other 15.4% were dissertations. The mean effect size for published studies (.377, SE=.067) is surprisingly much smaller than the mean effect size for unpublished studies (1.159, SE=.330). The difference between the mean effect sizes of -.782 provides a crude estimate of the upper bounds of potential publication bias. Of course, this simple difference does not adequately account for small sample bias nor does it employ inverse variance weights; consequently, appropriately meta-analytic tests of publication bias must also be utilized.

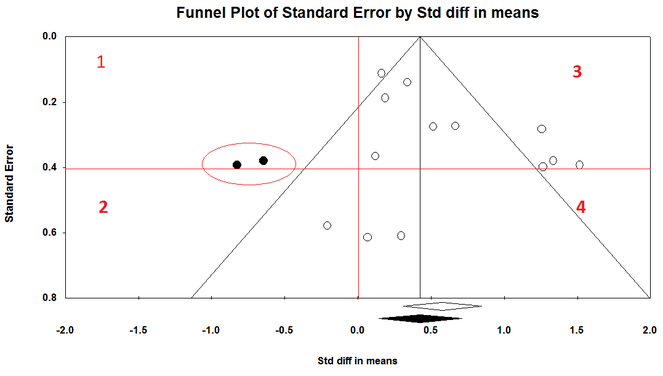

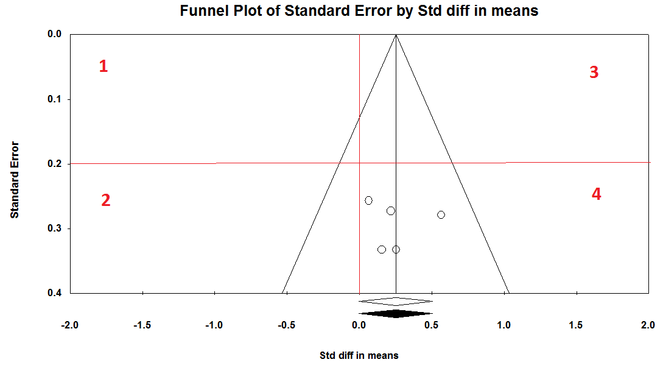

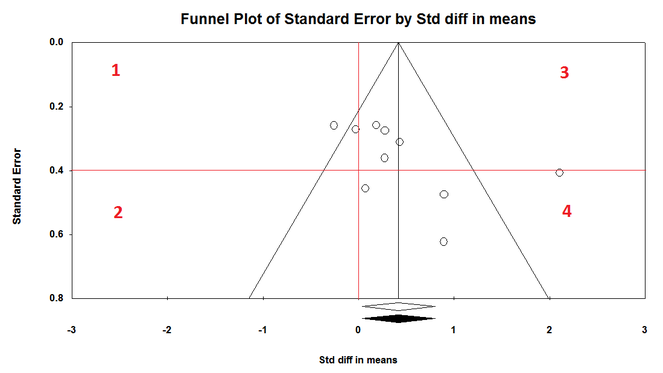

A look at a funnel plot with effect sizes plotted against standard errors is one meta-analytically-appropriate method of visually examining the distribution for the presence of publication bias. In this case, the standard error serves as a proxy for sample size, and because smaller samples are much more likely to lack the statistical power required to attain statistical significance, we look at the small-sample studies to detect publication bias. If there is no such bias, we expect small studies with negative and null results to be as frequent as small studies with positive results. The following funnel plot in Fig.

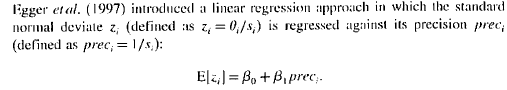

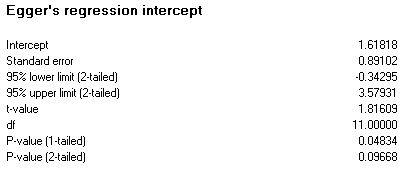

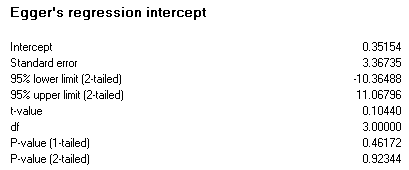

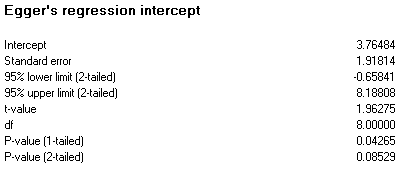

A computational alternative to visual inspection of the distribution is Egger’s regression intercept, as discussed in

Because we assume that publication bias will be positive, that is, in the direction of significantly positive effects and because it provides a more conservative estimate of significance, the p value of the single-tailed test at α=.05 is typically reported. The null hypothesis tests whether the ratio of the ES/se is > 0. While some debate exists about whether the single-tailed or two-tailed test is more appropriate, we see in Fig.

In conclusion, these varied analyses provide very little evidence for the possibility that publication bias is likely for the distribution of studies reporting oral language outcomes. Furthermore, the potential bias induced is small enough that if a sufficient number of small sample studies with null or negative results were included to make the distribution more symmetrical, the mean effect size estimate would hardly change. As indicated, very few studies in the sample have null or negative effect size estimates; as such, it remains distinctly possible that the literature search failed to uncover those studies that for one reason or another simply were not published because they failed to yield significantly positive results.

Moderator Analyses for Oral Language Outcomes

The distribution of oral language effect sizes was heterogeneous, as indicated by the Q and I2 statistics; consequently, we might expect post hoc examination of moderator variables to uncover some statistically-significant moderator variables. However, the sample is modest (n=13) and underpowered for meta-regression analysis of the partial contributions for multiple independent variables. Given these limitations, analysis of moderators is primarily motivated by a priori questions of interest, and findings are qualified by the recognition that small differences may be difficult to detect with the small sample employed and confounding and lurking variables may temper any observed differences between sub-groups. Occasionally, when a categorical variable had too few studies on one or more categories, the category was recoded, often into a binary variable, to enable a more reliable comparison. Table

Summary of moderator analyses for oral language outcomes.

|

Moderator (Sub-group) |

Number in sub-group |

Effect Size Point-estimate |

Standard Error of estimate |

p-value of estimate |

Q-within of Sub-group |

I2 of Sub-group |

Q-between in Random Effects Model |

Observed Inter- correlation |

|

Published |

|

|

|

|

|

|

.601 (p=.438) |

Yes |

|

Yes |

11 |

.377 |

.067 |

.000 |

29.005 (p=.001) |

65.523 |

|

|

|

No |

2 |

1.159 |

.330 |

.099 |

3.683 (p=.09) |

64.681 |

|

|

|

Study Quality |

|

|

|

|

|

|

4.089 (p=.129) |

Yes |

|

High |

7 |

.587 |

.164 |

.000 |

18.544 (p=.005) |

67.644 |

|

|

|

Medium |

4 |

.761 |

.364 |

.036 |

8.266 (p=.041) |

63.077 |

|

|

|

Low |

2 |

.174 |

.167 |

..299 |

.028 (p=.866) |

.000 |

|

|

|

Instrument Type |

|

|

|

|

|

|

2.513 (p=.285) |

Yes |

|

Researcher-created |

5 |

.478 |

.238 |

.045 |

10.408 (p=.034) |

61.570 |

|

|

|

Standard-Narrow |

6 |

.743 |

.204 |

.000 |

25.583 (p=.000) |

80.456 |

|

|

|

Standard-Broad |

2 |

.031 |

.420 |

.941 |

.0359 (p=.549) |

.000 |

|

|

|

Post Hoc Researcher Adjusted |

|

|

|

|

|

|

4.634 (p=.031) |

Yes |

|

Yes |

2 |

.174 |

.167 |

.299 |

.028 (p=.866) |

.000 |

|

|

|

No |

11 |

.675 |

.162 |

.000 |

34.863 (p=.000) |

71.136 |

|

|

|

Construct |

|

|

|

|

|

|

2.503 (p=.286) |

Yes |

|

Cooperative |

2 |

.105 |

.315 |

.738 |

10.283 (p=.068) |

51.378 |

|

|

|

Collaborative |

6 |

.506 |

.157 |

.001 |

.005 (p=.942) |

.000 |

|

|

|

Peer Tutoring |

5 |

.837 |

.348 |

.016 |

18.721 (p=.001) |

78.634 |

|

|

|

Component |

|

|

|

|

|

|

1.035 (p=.309) |

Yes |

|

Yes |

4 |

.388 |

.172 |

.024 |

7.406 (p=.06) |

59.494 |

|

|

|

No |

9 |

.651 |

.193 |

.001 |

24.013 (p=.002) |

66.684 |

|

|

|

Setting |

|

|

|

|

|

|

.380 (p=.538) |

Yes |

|

EFL |

5 |

.691 |

.269 |

.010 |

17.426 (p=.002) |

77.045 |

|

|

|

ESL |

8 |

.498 |

.161 |

.002 |

17.332 (p=.015) |

59.612 |

|

|

|

Segregated |

|

|

|

|

|

|

5.412 (p=.020) |

Yes |

|

Yes |

2 |

.230 |

.088 |

.009 |

.966 (p=.326) |

.000 |

|

|

|

Other (Not and Unknown) |

11 |

.686 |

.175 |

.000 |

26.944 (p=.003) |

62.866 |

|

|

|

Language of Instruction |

|

|

|

|

|

|

.681 (p=.711) |

Yes |

|

L1 (L1-only and bilingual) |

7 |

.649 |

.186 |

.000 |

24.282 (p=.000) |

75.291 |

|

|

|

L2 Only |

4 |

.427 |

.215 |

.047 |

2.36 (p=.501) |

.000 |

|

|

|

Unknown |

2 |

.702 |

.535 |

.189 |

9.946 (p=.002) |

89.946 |

|

|

|

Culturally Relevant |

|

|

|

|

|

|

.739 (p=.691) |

Yes |

|

Yes |

3 |

.413 |

.196 |

.035 |

7.405 (p=.025) |

72.933 |

|

|

|

No |

5 |

.572 |

.264 |

.03 |

6.701 (p=.153) |

40.309 |

|

|

|

Not U.S.A. |

5 |

.691 |

.269 |

.01 |

17.426 (p=002) |

77.045 |

|

|

|

Grade Level |

|

|

|

|

|

|

.240 (p=.624) |

Yes |

|

Elementary |

9 |

.628 |

.164 |

.000 |

25.846 (p=.001) |

69.047 |

|

|

|

Other |

4 |

.454 |

.314 |

.148 |

11.320 (p=.010) |

73.499 |

|

|

|

SES |

|

|

|

|

|

|

.194 (p=.908) |

Yes |

|

Low |

5 |

.518 |

.193 |

.007 |

6.821 (p=.146) |

41.36 |

|

|

|

High |

2 |

.788 |

.582 |

.176 |

3.099 (p=.078) |

67.731 |

|

|

|

Unknown |

6 |

.550 |

.202 |

.007 |

19.731 (p=.001) |

74.659 |

|

|

|

Student Hispanic |

|

|

|

|

|

|

.541 (p=.462) |

|

|

Hispanic |

7 |

.472 |

.181 |

.009 |

15.801 (p=.015) |

62.027 |

|

|

|

Other( Asian, Arabic, Bangladeshi, Israeli) |

6 |

.68 |

.217 |

.002 |

17.535 (p=.004) |

71.486 |

|

|

|

Student Asian |

|

|

|

|

|

|

.139 (p=.71) |

|

|

Asian |

3 |

.696 |

.376 |

.064 |

7.206 (p=.027) |

72.244 |

|

|

|

Other |

10 |

.545 |

.15 |

.000 |

28.272 (p=.001) |

68.166 |

|

|

As indicated in the Q-between column, only two moderators were statistically significant at the p=.05 level: post hoc researcher adjusted and segregated. In cases where post-test effects sizes were unadjusted for pre-test differences by authors in the original study reports, the researcher of this meta-analysis adjusted post-test effect sizes post hoc. In these cases, post hoc adjustments resulted in much smaller effect sizes on average (G=.174) than unadjusted (G=.675). This finding indicates that methodological rigor and care in synthesizing previous research can exert a large influence on reported results. The other significant moderator of the effectiveness of peer-mediated learning for improving oral language outcomes was whether or not the intervention occurred in settings where ELLs were segregated from their non-ELL peers. ELLs in segregated settings performed much lower (G=.230) than they did in settings that were not segregated or in settings for which segregation was unreported (G=.636). Some care should be taken when interpreting this result, in particular. First, the confluence of segregated settings with ambiguous settings (i.e., researchers did not report if segregated) presents some conceptual challenges in interpreting the results because some of the ambiguous settings may very well have been segregated in practice. Secondly, the number of studies that reported that they were segregated was relatively small (n=2), and so the estimate is not as precise as it could have been.

For all other variables, differences in mean effect sizes were evident across variables, but none proved to be significant moderators. Because the sample size for oral language outcomes is relatively small, this general lack of statistically significant moderators likely represents a lack of statistical power to detect meaningful differences. Thus, some of these moderators might prove significant if additional studies were included, and future meta-analyses may benefit from larger sample sizes as the field continues to produce experimental and quasi-experimental evaluations of peer-mediated learning.

Written Language Outcomes

Summary of Included Studies and Main Effects

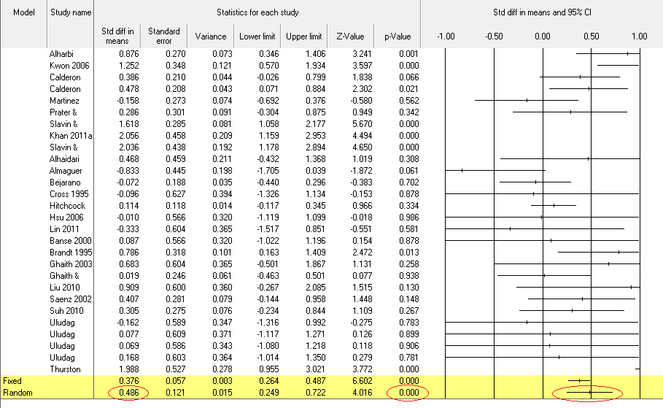

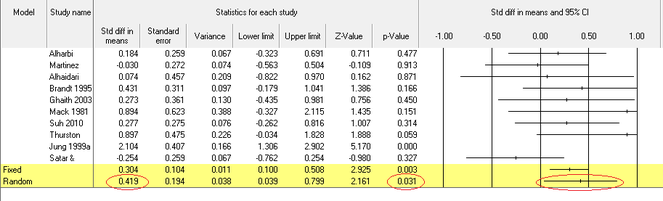

A random effects model of the un-corrected and un-Winsorized data provided a mean effect size estimate for the twenty eight written language outcomes of (.551, SE=.111, p<.001); however, after adjustments for outliers, pre-test differences, and cluster randomization, the mean effect size estimate decreased and the variance increased slightly (.486, SE=.121, p<.001), suggesting that outliers and cluster randomization had some noticeable impact on the original estimates. The adjusted distribution of written language outcomes is illustrated by the forest plot in Fig.

The distribution of effect sizes for written language outcomes was even more heterogeneous than the distribution of oral language outcomes. The Q statistic (97.135, df=27, p<.001) indicates that the observed effect sizes vary more than would be expected by sampling error alone, and the I2 statistic (72.204) indicates that approximately 72% of the observed variance in effect sizes exists between studies. Together, this suggests that moderator analyses might provide insight into what factors influence the effectiveness of peer-mediated learning for ELLs for written language outcomes.

Publication Bias for Written Language Outcomes

A recoding of the type of publication variable into a dummy-coded variable with 1=published and 0=unpublished, indicated that 64.3% of the included sample were unpublished (i.e., technical reports and dissertations), while the other 36.7% were dissertations. The mean effect size for published studies (.442, SE=.24) is not much smaller than the mean effect size for unpublished studies (.524, SE=.142). The difference between the mean effect sizes of -.082 provides a crude estimate of the upper bounds of potential publication bias.

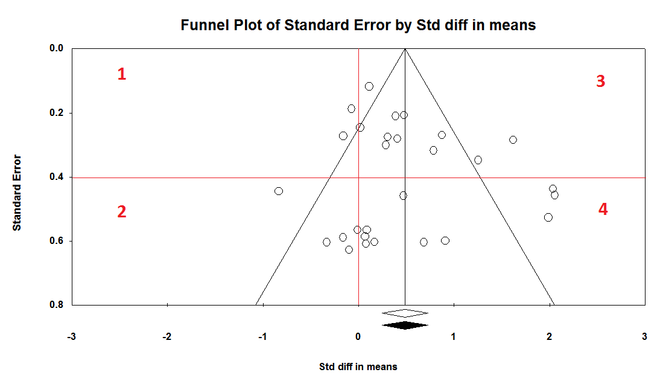

The funnel plot in Fig.

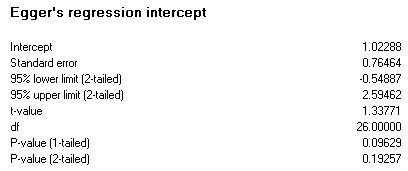

We see in Fig.

In conclusion, these analyses provide no evidence for the possibility that publication is likely for the distribution of studies reporting written language outcomes. Additionally, several studies in the sample have null or negative effect size estimates; thus, it seems unlikely that the literature search failed to uncover those studies that for one reason or another simply were not published because they failed to yield significantly positive results, and as indicated by the funnel plot and the difference in means between published and unpublished studies, the possible impact of studies lurking in the “the file drawer” on the mean effect size estimates appears relatively minor in this case.

Moderator Analyses for Written Language Outcomes

The distribution of oral language effect sizes was heterogeneous, as indicated by the Q and I2 statistics; consequently, we might expect post hoc examination of moderator variables to uncover some statistically-significant moderator variables. The sample is large enough (n=28) and sufficiently powered for meta-regression analysis of the partial contributions for at least a few, (e.g., 2-3) independent variables. As before, analysis of moderators is primarily motivated by a priori questions of interest, and findings remain qualified by the recognition that small differences may be difficult to detect with the size of the sample employed and confounding and lurking variables may temper any observed differences between sub-groups. Table

Summary of Moderator Analyses for Written Language Outcomes

|

Moderator (Sub-group) |

Number in sub-group |

Effect Size Point-estimate |

Standard Error of estimate |

p-value of estimate |

Q-within of Sub-group |

I2 of Sub-group |

Q-between in Random Effects Model |

Observed Inter- correlation |

|

Published |

|

|

|

|

|

|

.086 (p=.770) |

Yes |

|

Yes |

10 |

.442 |

.240 |

.065 |

38.89 (p=.000) |

76.858 |

|

|

|

No |

18 |

.524 |

.142 |

.000 |

55.851 (p=.000) |

60.562 |

|

|

|

Study Quality |

|

|

|

|

|

|

10.635 (p=.005) |

Yes |

|

High |

17 |

.637 |

.144 |

.000 |

56.534 (p=.000) |

71.7 |

|

|

|

Medium |

8 |

.328 |

.311 |

.291 |

31.991 (p=.000) |

78.119 |

|

|

|

Low |

3 |

-.095 |

.173 |

.582 |

.170 (p=.981) |

.000 |

|

|

|

Instrument Type |

|

|

|

|

|

|

1.107 (p=.575) |

Yes |

|

Researcher-created |

17 |

.411 |

.147 |

.005 |

35.743 (p=.003) |

55.236 |

|

|

|

Standard-Narrow |

7 |

.338 |

.168 |

.033 |

50.012 (p=.000) |

88.003 |

|

|

|

Standard-Broad |

4 |

.746 |

.420 |

.045 |

5.677 (p=.128) |

47.156 |

|

|

|

Post Hoc Researcher Adjusted |

|

|

|

|

|

|

9.058 (p=.003) |

Yes |

|

Yes |

3 |

-.095 |

.173 |

.583 |

.170 (p=.918) |

.000 |

|

|

|

No |

25 |

.554 |

.129 |

.000 |

88.612 (p=.000) |

72.916 |

|

|

|

Construct |

|

|

|

|

|

|

1.391 (p=.499) |

Yes |

|

Cooperative |

14 |

.632 |

.168 |

.000 |

64.105 (p=.000) |

79.721 |

|

|

|

Collaborative |

10 |

.376 |

.162 |

.02 |

9.94 (p=.355) |

9.460 |

|

|

|

Peer Tutoring |

4 |

.310 |

.414 |

.454 |

19.234 (p=.000) |

84.403 |

|

|

|

Component |

|

|

|

|

|

|

1.07 (p=.301) |

Yes |

|

Yes |

12 |

.633 |

.184 |

.001 |

30.714 (p=.001) |

64.186 |

|

|

|

No |

16 |

.385 |

.154 |

.012 |

55.422 (p=.000) |

72.935 |

|

|

|

Setting |

|

|

|

|

|

|

.023 (p=.879) |

Yes |

|

EFL |

17 |

.504 |

.170 |

.003 |

45.017 (p=.000) |

64.458 |

|

|

|

ESL |

11 |

.465 |

.184 |

.012 |

51.969 (p=.000) |

80.758 |

|

|

|

Segregated |

|

|

|

|

|

|

.504 (p=.478) |

Yes |

|

Yes |

5 |

.373 |

.135 |

.006 |

5.755 (p=218) |

30.942 |

|

|

|

Other (Not and Unknown) |

23 |

.518 |

.155 |

.001 |

91.38 (p=.000) |

75.952 |

|

|

|

Language of Instruction |

|

|

|

|

|

|

.274 (p=.872) |

Yes |

|

L1 (L1-only and bilingual) |

9 |