|

Research Ideas and Outcomes : Guidelines

|

|

Corresponding author: Lyubomir Penev (penev@pensoft.net)

Received: 26 Feb 2017 | Published: 28 Feb 2017

© 2018 Lyubomir Penev, Daniel Mietchen, Vishwas Chavan, Gregor Hagedorn, Vincent Smith, David Shotton, Éamonn Ó Tuama, Viktor Senderov, Teodor Georgiev, Pavel Stoev, Quentin Groom, David Remsen, Scott Edmunds

This is an open access article distributed under the terms of the Creative Commons Attribution License (CC BY 4.0), which permits unrestricted use, distribution, and reproduction in any medium, provided the original author and source are credited.

Citation: Penev L, Mietchen D, Chavan V, Hagedorn G, Smith V, Shotton D, Ó Tuama É, Senderov V, Georgiev T, Stoev P, Groom Q, Remsen D, Edmunds S (2017) Strategies and guidelines for scholarly publishing of biodiversity data. Research Ideas and Outcomes 3: e12431. https://doi.org/10.3897/rio.3.e12431

|

|

Abstract

The present paper describes policies and guidelines for scholarly publishing of biodiversity and biodiversity-related data, elaborated and updated during the Framework Program 7 EU BON project, on the basis of an earlier version published on Pensoft's website in 2011. The document discusses some general concepts, including a definition of datasets, incentives to publish data and licenses for data publishing. Further, it defines and compares several routes for data publishing, namely as (1) supplementary files to research articles, which may be made available directly by the publisher, or (2) published in a specialized open data repository with a link to it from the research article, or (3) as a data paper, i.e., a specific, stand-alone publication describing a particular dataset or a collection of datasets, or (4) integrated narrative and data publishing through online import/download of data into/from manuscripts, as provided by the Biodiversity Data Journal.

The paper also contains detailed instructions on how to prepare and peer review data intended for publication, listed under the Guidelines for Authors and Reviewers, respectively. Special attention is given to existing standards, protocols and tools to facilitate data publishing, such as the Integrated Publishing Toolkit of the Global Biodiversity Information Facility (GBIF IPT) and the DarwinCore Archive (DwC-A).

A separate section describes most leading data hosting/indexing infrastructures and repositories for biodiversity and ecological data.

Keywords

biodiversity data publishing, data publishing licenses, Darwin Core, Darwin Core Archive, data re-use, data repository

Data Publishing in a Nutshell

Introduction

Data publishing in this digital age is the act of making data available on the Internet, so that they can be downloaded, analysed, re-used and cited by people and organisations other than the creators of the data (

- Data hosting, long-term preservation and archiving

- Documentation and metadata

- Citation and credit to the data authors

- Licenses for publishing and re-use

- Data interoperability standards

- Format of published data

- Software used for creation and retrieval

- Dissemination of published data

The present guidelines are based on an earlier version published in PDF on Pensoft's website in 2011 (

The FORCE11 group dedicated to facilitating change in knowledge creation and sharing, recognising that data should be valued as publisheable and citable products of research, has developed a set of principles for publishing and citing such data. The FAIR Data Publishing Group formulated the following four FAIR principles of fata publishing (

- Data should be Findable

- Data should be Accessible

- Data should be Interoperable

- Data should be Re-usable.

A key outcome of FORCE11 is the Joint Declaration of Data Citation Principles (see also

- Importance: Data should be considered legitimate, citable products of research. Data citations should be accorded the same importance in the scholarly record as citations of other research objects, such as publications.

- Credit and Attribution: Data citations should facilitate giving scholarly credit and normative and legal attribution to all contributors to the data, recognizing that a single style or mechanism of attribution may not be applicable to all data.

- Evidence: In scholarly literature, whenever and wherever a claim relies upon data, the corresponding data should be cited.

- Unique Identification: A data citation should include a persistent method for identification that is machine actionable, globally unique, and widely used by a community.

- Access: Data citations should facilitate access to the data themselves and to such associated metadata, documentation, code, and other materials, as are necessary for both humans and machines to make informed use of the referenced data.

- Persistence: Unique identifiers — and metadata describing the data and its disposition — should persist, even beyond the lifespan of the data they describe.

- Specificity and Verifiability: Data citations should facilitate identification of, access to, and verification of the specific data or datum that support a claim. Citations or citation metadata should include information about provenance and permanence sufficient to facilitate verfiying that the specific timeslice, version and/or granular portion of data retrieved subsequently is the same as was originally cited.

- Interoperability and Flexibility: Data citation methods should be sufficiently flexible to accommodate the variant practices among communities, but should not differ so much that they compromise interoperability of data citation practices across communities.

The Research Data Alliance (RDA) promotes the open sharing of data by building upon the underlying social and technical infrastructure. Established in 2013 by the European Union, the National Science Foundation and the National Institute of Standards and Technology (USA) as well as the Department of Innovation (Australia), it has grown to include some 4,200 members from 110 countries who collaborate through Work and Interest Groups "to develop and adopt infrastructure that promotes data-sharing and data-driven research, and accelerate the growth of a cohesive data community that integrates contributors across domain, research, national, geographical and generational boundaries" (

- Data Description Registry Interoperability Model

- Persistent Identifier Type Registry

- Workflows for Research Data Publishing: Models and Key Components

- Bibliometric Indicators for Data Publishing

- Dynamic Data Citation Methodology

- Repository Audit and Certification Catalogues

One RDA output, the Scholix Inititive, under the RDA/WDS (ICSU World Data System) Publishing Data Services Work Group is of particular relevance, as it seeks to develop an interoperability framework for exchanging information about the links between scholarly literature and data, i.e., what data underpins literature and what literature references data.

Within RDA, a Biodiversity Data Integration Interest Group has been established, which aims to "increase the effectiveness of biodiversity e-Infrastructures by promoting the adoption of common tools and services establishing data interoperability within the biodiversity domain, enabling the convergence on shared terminology and routines for assembling and integrating biodiversity data."

With regard to biodiversity, some recently published papers emphasise the importance of publishing of biodiversity data (

The EU BON project funded by the European Union's Framework Program Seven (FP7) (Building the European Biodiversity Observation Network, grant agreement ENV30845) was launched to contribute towards the achievement of these challenging tasks within a much wider global initiative, the Group on Earth Observations Biodiversity Observation Network (GEO BON), which itself is a part of the Group of Earth Observation System of Systems (GEOSS). A key feature of EU BON is the delivery of near-real-time data, both from on-ground observation and remote sensing, to the various stakeholders to enable greater interoperability of different data layers and systems, and provide access to improved analytical tools and services; furthermore, EU BON is supporting biodiversity science-policy interfaces, facilitate political decisions for sound environmental management (

The present paper outlines the strategies and guidelines needed to support the scholarly publishing and dissemination of biodiversity data, that is publishing through the academic journal networks.

What Is a Dataset

A dataset is understood here as a digital collection of logically connected facts (observations, descriptions or measurements), typically structured in tabular form as a set of records, with each record comprising a set of fields, and recorded in one or more computer data files that together comprise a data package. Certain types of research datasets, e.g., a video recording of animal behaviour, will not be in tabular form, although analyses of such recordings may be. Within the domain of biodiversity, a dataset can be any discrete collection of data underlying a paper – e.g., a list of all species occurrences published in the paper, data tables from which a graph or map is produced, digital images or videos that are the basis for conclusions, an appendix with morphological measurements, or ecological observations.

More generally, with the development of XML-based publishing technologies, the research and publishing communities are coming to a much wider definition of data, proposed in the BioMed Central (BMC) position statement on open data: "the raw, non-copyrightable facts provided in an article or its associated additional files, which are potentially available for harvesting and re-use" (

As these examples illustrate, while the term "dataset" is convenient and widely used, its definition is vague. Data repositories such as Dryad, wishing for precision, do not use the term "dataset". Instead, they describe data packages to which metadata and unique identifiers are assigned. Each data package comprises one or more related data files, these being data-containing digital files in defined formats, to which unique identifiers and metadata are also assigned. Nevertheless, the term "dataset" is used below, except where a more specific distinction is required.

For practical reasons, we propose a clear distinction between static data that represent specific completed compilations of data upon which the analyses and conclusions of a given scientific paper may be based, and curated data that belong to a large data collection (usually called a "database") with ongoing goals and curation, for example the various bioinformatics databases that curate ever growing amounts of nucleotide sequences (

Curated data, on the other hand, are usually hosted on external servers or in data hosting centres. A primary goal of the data publishing process in this case is to guarantee that these data are properly described, up to date, available to others under appropriate licensing schemes, peer-reviewed, interoperable, and where appropriate linked from a research article or a data paper at the time of publication. Especially in cases where the long-term viability of the curated project may be insecure (e.g. in the case of grant funded projects) (

Why Publish Data

Data publishing has become increasingly important and already affects the policies of the world's leading science funding frameworks and organizations — see for example the NSF Data Management Plan Requirements, the data management policies of the National Institutes of Health (NIH), Wellcome Trust, or the Riding the Wave (How Europe Can Gain From the Rising Tide of Scientific Data) report submitted to the European Commission in October 2010. More generally, the concept of "open data" is described in the Protocol for Implementing Open Access Data, the Open Knowledge/Data Definition, the Panton Principles for Open Data in Science, and the Open Data Manual. There are several incentives for authors and institutions to publish data (after

- There is a widespread conviction that data produced using public funds should be regarded as a common good, and should be openly published and made available for inspection, interpretation and re-use by third parties.

- Open data increases transparency and the overall quality of research; published datasets can be re-analyzed and verified by others.

- Published data can be cited and re-used in the future, either alone or in association with other data.

- Open data can be integrated with other datasets across both space and time.

- Data integration increases recognition and opportunities for collaboration.

- Open data increases the potential for interdisciplinary research, and for re-use in new contexts not envisaged by the data creator.

- Needless duplication of data-collecting efforts and associated costs will be reduced.

- Published data can be indexed and made discoverable, browsable and searchable through internet services (e.g. Web search engines) or more specific infrastructures (e.g., GBIF for biodiversity data).

- Collection managers can trace usage and citations of digitized data from their collections.

- Data creators, and their institutions and funding agencies, can be credited for their work of data creation and publication through the conventional channels of scholarly citation; priority and authorship is achieved in the same way as with a publication of a research paper.

- Datasets and their metadata, and any related data papers, may be inter-linked into research objects, to expedite and mutually extend their dissemination, to the benefit of the authors, other scientists in their fields, and society at large.

- Published data may be structured as "Linked Data", by which term is meant data accessible using RDF, the Resource Description Framework, one of the fundamentals of the semantic web. Since RDF descriptions are based on publicly available ontology terms, ideally derived from a limited number of complementary ontologies, this permits automated data integration, since data elements from different sources have built-in syntactic and semantic alignment.

How to Publish Data

There are four main routes for scholarly publication of data, most of which are available with various journals and publishers:

- Supplementary files underpinning a research paper and available from the journal's website.

- Data hosted at external repositories but linked back from the research article it underpins.

- Stand-alone description of the data resource in the form of scholarly publication (e.g., Data Paper, or Data Note - see, for example,

Newman and Corke 2009 ,Chavan and Penev 2011 , andCandela et al. 2015 ). - Data published within the article text and downloadable from there in the form of structured data tables or as a result of text mining. This "integrated data publishing" approach has been implemented by the Biodiversity Data Journal (BDJ), which was developed in the course of the EU funded project ViBRANT (

Smith et al. 2013 ). Other examples of a similar approach are executable code published in an article (Veres and Adolfsson 2011 ), or linking of a standard article to an integrated external platform that hosts all data associated with the article, and provides additional data analysis tools and computing resources (an example for that are GigaDB and the GigaScience journal - seeEdmunds et al. 2016 ), or various kinds of implementing 3D visualisations on the basis of MicroCT files (Stoev et al. 2013 ).

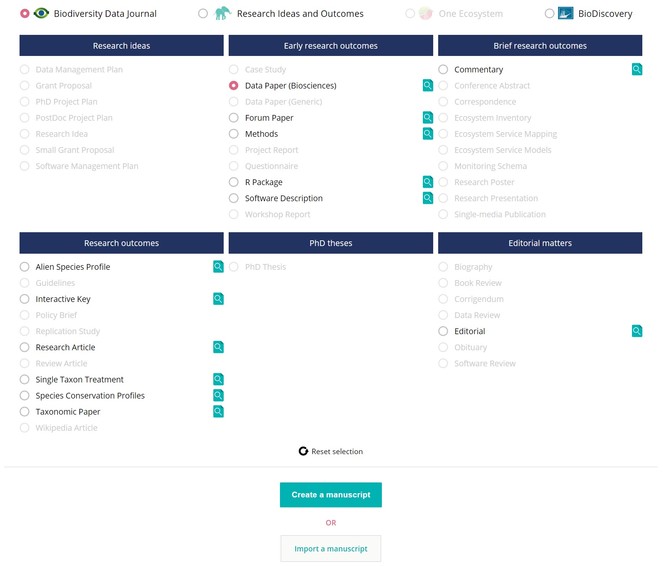

Within these main data publishing modes, Pensoft developed a specific set of applications designed to meet the needs of the biodiversity community. Most of these were implemented in the Biodiversity Data Journal and its associated ARPHA Writing Tool (AWT):

- Import of primary biodiversity data from Darwin Core compliant spreadsheets, or manually via a Darwin Core editor, into manuscripts and their consequent publication in a structured and downloadable format (

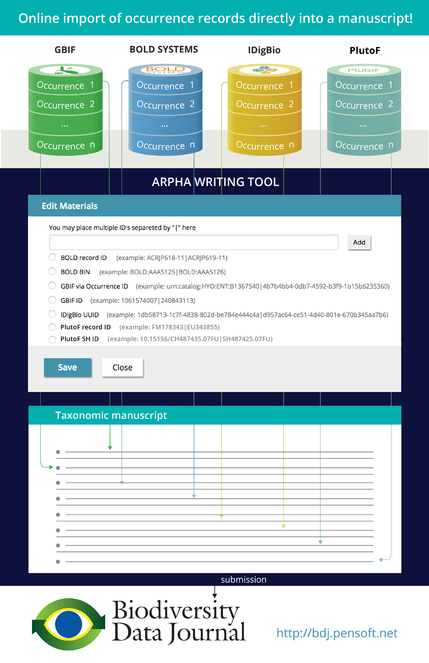

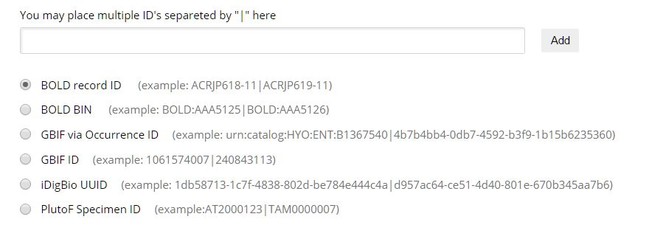

Smith et al. 2013 ). - Direct online import of Darwin Core compliant primary biodiversity data from GBIF, Barcode of Life, iDigBio, and PlutoF into manuscripts through web services and their consequent publication in a structured and downloadable format (

Senderov et al. 2016 ). - Import of multiple occurrence records of voucher specimens associated with a particular Barcode Index Number (BIN) (

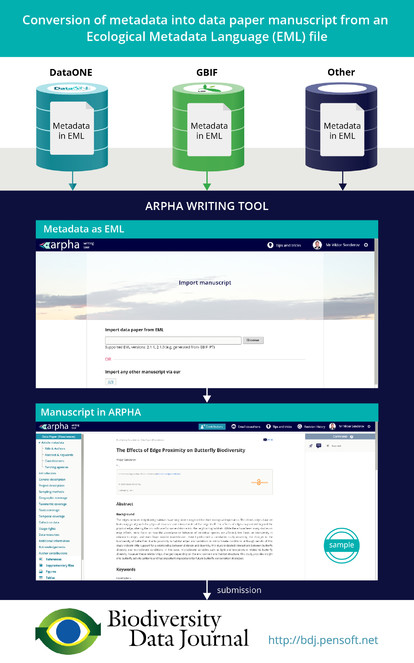

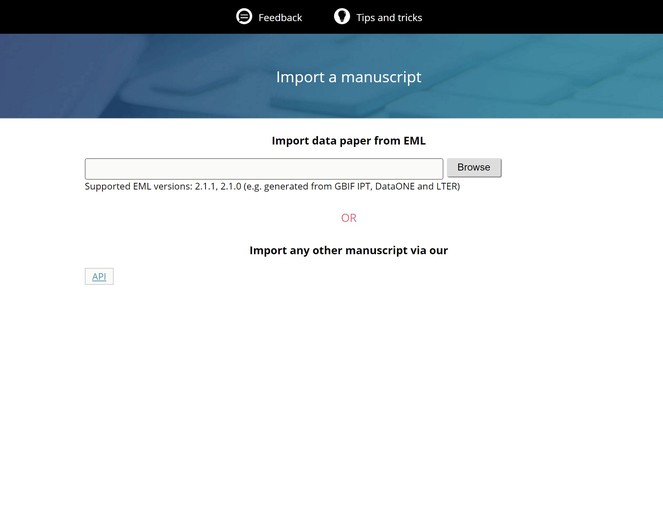

Ratnasingham and Hebert 2013 ) from the Barcode of Life. - Automated generation of data paper manuscripts from Ecological Metadata Language (EML) metadata files stored at GBIF Integrated Publishing Toolkit (GBIF IPT), DataONE, and the Long Term Ecological Research Network (LTER) (

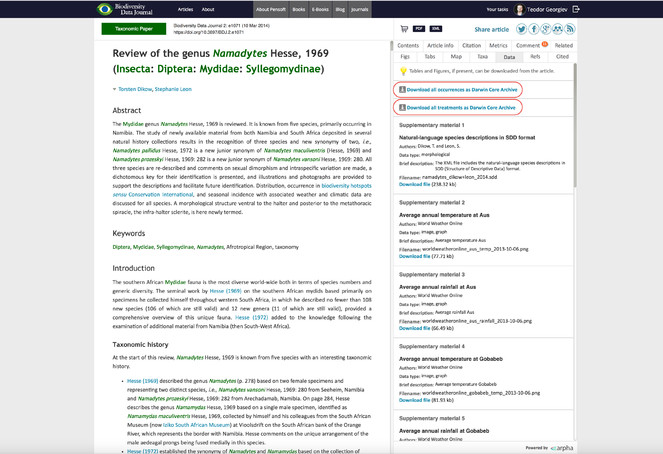

Senderov et al. 2016 , see also Pensoft's blog for details). - Automated export of the occurrence data published in BDJ into Darwin Core Archive (DwC-A) format (

Wieczorek et al. 2012 ) and its consequent ingestion by GBIF. The DwC-A is freely available for download from each article's webpage that contains occurrence data. - Automated export of the taxonomic treatments published in BDJ into Darwin Core Archive. The DwC-A is freely available for download from each article that contains taxonomic treatments data.

- Novel article types in the ARPHA Writing Tool and its associated journals (Biodiversity Data Journal, Research Ideas and Outcomes (RIO Journal), and One Ecosystem): Monitoring Schema, IUCN Red List compliant Species Conservation Profile (

Cardoso et al. 2016 ), IUCN Global Invasive Species Database (GISD) compliant Alien Species Profile, Single-media Publication, Data Management Plan, Research Idea, Grant Proposal, and others. - Nomenclatural acts modelled and developed in BDJ as different types of taxonomic treatments for plant taxonomy.

- Markup and display of biological collection codes against the Global Registry of Biological Repositories (GRBIO) vocabulary (

Schindel et al. 2016 ). - Workflow integration with the GBIF Integrated Publishing Toolkit (IPT) for deposition, publication, and permanent linking between data and articles, of primary biodiversity data (species-by-occurrence records), checklists and their associated metadata (

Chavan and Penev 2011 ). - Workflow integration with the Dryad Data Repository for deposition, publication, and permanent linking between data and articles, of datasets other than primary biodiversity data (e.g., ecological observations, environmental data, genome data and other data types) (see Pensoft blog for details).

- Automated archiving of all articles published in Pensoft's journals in the Biodiversity Literature Respository (BLR) of Zenodo on the day of publication.

Best practice recommendations

- For any form of data publishing, follow the FAIR Data Publishing Principles (

Wilkinson et al. 2016 ). - Follow the Joint Declaration of Data Citation Principles for citation of data in scholarly articles (

Altman et al. 2015 ). - Deposition of data in an established international repository is always to be preferred to supplementary files published on a journal's website.

- Smaller data files, especially those directly underpinning an article, should also be deposited at a data repository and linked from the article. We recommended, however these to be published also as supplementary file(s) to the related article, to ensure an additional joint preservation and presentation of the article together with its associated data.

- If a specialized and well establisdhed repository for a given kind of data exists, it should be preferred over non-specialized ones (see also section "Data Deposition in Open Repositories" below for finer detail), for example:

- Primary biodiversity data (species-by-occurrence) records should be deposited through the GBIF IPT.

- Sample-based biodiversity data (e.g., species abundances from monitoring or inventory studies) should be deposited through the GBIF IPT.

- Genomic data should be deposited at any of the three INSDC repositories (GenBank, European, Nucleotide Archive, ENA and the DNA Databank of Japan, DDBI) either directly or via an affiliated repository, e.g. Barcode of Life Data Systems (BOLD).

- Barcoding and metabarcoding data should be deposited at the Barcode of Life Data Systems (BOLD) or PlutoF.

- Metagenomic data should be deposited at EBI Metagenomics

- Protein sequence data should be deposited at UniProtKB.

- X-ray microtomography (micro-CT) scans should be deposited at Morphosource.

- Phylogenetic data should be deposited at TreeBASE.

- Heterogeneous datasets, or data packages containing various data types should be deposited in generalist repositories, for example Dryad Data Repository, Zenodo, Dataverse, or in another appropriate repository.

- Repositories not mentioned above or in the "Data Deposition in Open Repositories" section below, may be used at the discretion of the author, if they provide long-term preservation of various data types, persistent identifiers to datasets, discoverability, open access to the data, and well proven sustainablility record.

- Digital Object Identifiers (DOIs) or other persistent identifiers (e.g., "stable URIs") to the data deposited in repositories, as well as the name of the repository, should always be published in the paper using or describing that data resource.

- Exceptional cases when publication of data is not possible, or some of the data remain closed or obfuscated, should be discussed with the publisher in advance. In such cases, the authors should provide an open statement explaining why restrictions in open data publishing are needed to be put in force. The author's statement should be published together with the article.

How to Cite Data

This section originates from a draft set of Data Citation Best Practice Guidelines that has been developed for publication by David Shotton, with assistance from colleagues at Dryad and elsewhere, and from earlier papers concerning data citation mechanisms (

The well-established norm for citing genetic data, for example, is that one simply cites the GenBank identifier (accession number) in the text. Similar usage is also commonplace for items in other bioinformatics databases. The latest developments in the implementation of the data citation principles, however, strongly recommend references to data to be included in the reference lists, similarly to literature references (

For such data in data repositories, each published data package and each published data file should always be associated with a persistent unique identifier. A Digital Object Identifier (DOI) issued by DataCite, or CrossRef, should be used wherever possible. If this is not possible, the identifier should be one issued by the data repository or database, and should be in the form of a persistent and resolvable URL. As an example, the use of DOIs in the Dryad Data Repository is explained on the Dryad wiki.

Data citations may relate either to the author's own data, or to data created and published by others ("third-party data"). In the former case, the dataset may have been previously published, or may be published for the first time in association with the article that is now citing it. All these types of data should, for consistency, be cited in the same manner.

Best practice recommendations

As is the norm when citing another research article, any citation of a data publication, including a citation of one's own data, should always have two components:

- An in-text citation statement containing an in-text reference pointer that directs the reader to a formal data reference in the paper's reference list.

- A formal data reference within the article's reference list.

We recommend that the in-text citation statement also contains a separate citation of the research article in which the data were first described, if such an article exists, with its own in-text reference pointer to a formal article reference in the paper's reference list, unless the paper being authored is the one providing that first description of the data. If the in-text citation statement includes the DOI for the data (a strongly desirable practice), this DOI should always be presented as a dereferenceable URI, as shown below. Further to this, both DataCite and CrossRef recommend displaying DOIs within references as full URLs, which serve a similar function as a journal volume, issue and page number do for a printed article, and also give the combined advantages of linked access and the assurance of persistence (

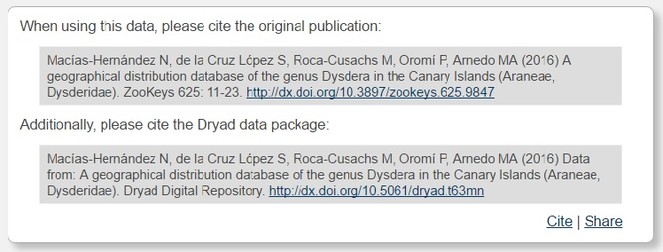

For example, Dryad recommends to cite always both the article in association with which data were published and the data themselves (Fig.

The data reference in the article's reference list should contain the minimal components recommended by the FORCE11 Data Citation Synthesis Group (

- Author(s)

- Year

- Dataset Title

- Data Repository or Archive

- Global Persistent Identifier

- Version, or Subset, and/or Access Date

These components should be presented in whatever format and punctuation style the journal specifies for its references.

The following example demonstrates in general terms what is required.

In-text citation:

“This paper uses data from the [name] data repository at https://doi.org/***** (Jones et al. 2008a), first described in Jones et al. 2008b. “

Data reference and article reference in reference list:

Jones A, Bloggs B, Smith C (2008a). <Title of data package>. <Repository name>. doi: https://doi.org/#####. [Version and/or date of access].

Jones A, Saul D, Smith C (2008b). <Title of journal article>. <Journal> <Volume>: <Pages>. doi: https://doi.org/#####.

Note that the authorship and the title of the data package may, for valid academic reasons, differ from those of the author's paper describing the data: indeed, to avoid confusion of what is being referenced, it is highly desirable that the titles of the data package and of the associated journal article are clearly different.

Requirements for data citation in Pensoft's journals

1. When referring to the author's own newly published data, cited from within the paper in which these data are first described, the citation statement and the data reference should take the following form:

- The citation statement of data deposition should be included in the body of the paper, in a separate section named Data Resources, situated after the Material and Methods section.

- In addition, the formal data reference should be included in the paper's reference list, using the recommended journal's reference format.

The following example demonstrates what is required.

In-text citation:

“The data underpinning the analysis reported in this paper were deposited in the Dryad Data Repository at https://doi.org/10.5061/dryad.t63mn (

AND/OR

"The data underpinning the analysis reported in this paper were deposited in the Global Biodiversity Information Facility (GBIF) at http://ipt.pensoft.net/resource?r=montenegrina&v=1.5 (the URI should be used as identifier only in cases when DOI is not available) (

Data reference in reference list:

Macías-Hernández N, de la Cruz López S, Roca-Cusachs M, Oromí P, Arnedo MA (2016) Data from: A geographical distribution database of the genus Dysdera in the Canary Islands (Araneae, Dysderidae). Dryad Digital Repository. https://doi.org/10.5061/dryad.t63mn [Version and/or date of access].

AND/OR

Feher Z, Szekeres M (2016): Geographic distibution of the rock-dwelling door-snail genus Montenegrina Boettger, 1877 (Mollusca, Gastropoda, Clausiliidae). v1.5. ZooKeys. Dataset/Occurrence deposited in the GBIF. doi: https://doi.org/10.15468/###### OR http://ipt.pensoft.net/resource?r=montenegrina&v=1.5, (the latter to be used in cases when DOI is not available). [Version and/or date of access].

2. When acknowledging re-use in the paper of previously published data (including the author's own data) that is associated with another published journal article, the citation and reference should take the same form, except that the full correct DOI should be employed, and that the journal article first describing the data should also be cited:

- A statement of usage of the previously published data, with citation of the data source(s) and of the related journal article(s), should be placed in a separate section named Data Resources, situated after the Material and Methods section.

- In addition, the formal data reference and a formal reference to the related journal article should be included in the paper's reference list, using the recommended journal's reference format.

The following example demonstrates what is required.

In-text citation:

“The data underpinning this analysis were obtained from the Dryad Data Repository at https://doi.org/10.5061/dryad.t63mn (

Data reference and article reference in reference list:

Macías-Hernández N, de la Cruz López S, Roca-Cusachs M, Oromí P, Arnedo MA (2016) A geographical distribution database of the genus Dysdera in the Canary Islands (Araneae, Dysderidae). ZooKeys 625: 11-23. https://doi.org/10.3897/zookeys.625.9847.

Macías-Hernández N, de la Cruz López S, Roca-Cusachs M, Oromí P, Arnedo MA (2016) Data from: A geographical distribution database of the genus Dysdera in the Canary Islands (Araneae, Dysderidae). Dryad Digital Repository. https://doi.org/10.5061/dryad.t63mn [Version and/or date of access].

3. When acknowledging re-use of previously published data (including the author's own data) that has NO association with a published research article, the same general format should be adopted, although a reference to a related journal article clearly cannot be included:

- A statement of usage of previously published data, with citation of the data source(s), should be placed in a separate section named Data Resources, situated after the Material and Methods section.

- In addition, the formal data reference should be included in the paper's reference list, using the recommended journal's reference format for data citation.

The following real example demonstrates what is required.

In-text citation:

“The present paper used data deposited by the Zoological Institute of the Russian Academy of Sciences in the Global Biodiversity Information Facility (GBIF) at https://doi.org/10.15468/c3eork (

Data reference in reference list:

Volkobitsh M, Glikov A, Khalikov R (2017) Catalogue of the type specimens of Polycestinae (Coleoptera: Buprestidae) from research collections of the Zoological Institute, Russian Academy of Sciences. Zoological Institute, Russian Academy of Sciences, St. Petersburg, deposited in GBIF. https://doi.org/10.15468/C3EORK. [Version and/or date of access].

Data Publishing Policies

General Policies for Biodiversity data

One of the basic postulates of the Panton Principles is that data publishers should define clearly the license or waiver under which the data are published, so re-use rights are clear to potential users. They recommend use of the most liberal licenses, or of public domain waivers, to prevent legal and operational barriers for data sharing and integration. For clarity, we list here the short version of the Panton Principles:

- When publishing data, make an explicit and robust statement of your wishes regarding re-use.

- Use a recognized waiver or open publication license that is appropriate for data.

- If you want your data to be effectively used and added to by others, it should be fully "open" as defined by the Open Knowledge/Data Definition – in particular, non-commercial and other restrictive clauses should not be used.

- Explicit dedication of data underlying published science into the public domain via PDDL or CC-Zero is strongly recommended and ensures compliance with both the Science Commons Protocol for Implementing Open Access Data and the Open Knowledge/Data Definition.

A domain-specific implementation of the open access principles for biodiversity data was elaborated during the EU project pro-iBiosphere and resulted in the widely endorsed Bouchout Declaration for Open Biodiversity Knowledge Management. Further, the EU project EU BON analysed the current copyright legislation and data policies in various European countries and elaborated a set of best practice recommendations (

- Promoting the understanding that primary biodiversity data are facts and therefore NOT a subject of copyright; they belong to the public domain, independent of their source;

- Requiring explicit statements that clearly place biodiversity data in the public domain, by applying a standardised waiver for any eventual copyright or database protection right, for example Creative Commons Zero (CC0). Some countries may still need special licenses for data irrespective of its source (cf. https://github.com/unitedstates/licensing/issues/31).

- To the maximum possible extent, rendering printed materials, PDFs, and other non-machine-actionable biodiversity data and narratives, into machine-readable and harvestable formats.

Data Publishing Licenses

In practice, a variety of waivers and licenses exist that are specifically designed for and appropriate for the treatment of data, as listed in Table

Data publishing licenses recommended by Pensoft.

| Data publishing license | URL |

| Open Data Commons Attribution License | http://www.opendatacommons.org/licenses/by/1.0/ |

| Creative Commons CC-Zero Waiver | http://creativecommons.org/publicdomain/zero/1.0/ |

| Open Data Commons Public Domain Dedication and License | http://www.opendatacommons.org/licenses/pddl/1-0/ |

The default data publishing license used by Pensoft is the Open Data Commons Attribution License (ODC-By), which is a license agreement intended to allow users to freely share, modify, and use the published data(base), provided that the data creators are attributed (cited or acknowledged).

As an alternative, the other licenses or waivers, namely the Creative Commons CC0 waiver (also cited as “CC-Zero” or “CC-zero”) and the Open Data Commons Public Domain Dedication and Licence (PDDL), are also STRONGLY encouraged for use in Pensoft journals. According to the CC0 waiver, "the person who associated a work with this deed has dedicated the work to the public domain by waiving all of his or her rights to the work worldwide under copyright law, including all related and neighbouring rights, to the extent allowed by law. You can copy, modify, distribute and perform the work, even for commercial purposes, all without asking permission."

Publication of data under a waiver such as CC0 avoids potential problems of "attribution stacking" when data from several (or possibly many) sources are aggregated, remixed or otherwise re-used, particularly if this re-use is undertaken automatically. In such cases, while there is no legal requirement to provide attribution to the data creators, the norms of academic citation best practice for fair use still apply, and those who re-use the data should reference the data source, as they would reference others' research articles.

The Attribution-ShareAlike Open Data Commons Open Database License (OdbL) is NOT recommended for use in Pensoft's journals, because it is very difficult to comply with the share-alike requirement in scholarly publishing. Nonetheless, it may be used as an exception in particular cases.

Many widely recognized open access licenses are intended for text-based publications to which copyright applies, and are not intended for, and are not appropriate for, data or collections of data which do not carry copyright. Creative Commons licenses apart from CC-Zero waiver (e.g., CC-BY, CC-BY-NC, CC-BY-NC-SA, CC-BY-SA, etc.) as well as GFDL, GPL, BSD and similar licenses widely used for open source software, are NOT appropriate for data, and their use for data associated with Pensoft journal articles is strongly discouraged.

Authors should explicitly inform the publisher if they want to publish data associated with a Pensoft journal article under a license that is different from the Open Data Commons Attribution License (ODC-By), Creative Commons CC0, or Open Data Commons Public Domain Dedication and Licence (PDDL).

Any set of data published by Pensoft, or associated with a journal article published by Pensoft, must always clearly state its licensing terms in both a human-readable and a machine-readable manner.

Where data are published by a public data repository under a particular license, and subsequently associated with a Pensoft research article or data paper, Pensoft journals will accept that repository license as the default for the published datasets.

Images, videos and similar "artistic works" are usually covered by copyright "automatically", unless specifically placed in the public domain by use of a public domain waiver such as CC0. Where copyright is retained by the creator, such multimedia entities can still be published under an open data attribution license, while their metadata can be published under a CC0 waiver.

Databases can contain a wide variety of types of content (images, audiovisual material, and sounds, for example, as well as tabular data, which might all be in the same database), and each may have a different license, which must be separately specified in the content metadata. Databases may also automatically accrue their own rights, such as the European Union Database Right, although no equivalent database right exists in the USA. In addition, the contents of a database, or the database itself, can be covered by other rights not addressed here (such as private contracts, trademark over the name, or privacy rights / data protection rights over information in the contents). Thus, authors are advised to be aware of potential problems for data re-use from databases, and to clear other rights before engaging in activities not covered by the respective license.

Data Deposition in Open Repositories

General Information

Open data repositories (public databases, data warehouses, data hosting centres) are subject- or institution-oriented infrastructures, usually based at large national or international institutions. These provide data storage and preservation according to widely accepted standards, and provide free access to their data holdings for anyone to use and re-use under the minimum requirement of attribution, or under an open data waiver such as the CC0 waiver. We do NOT include here and do NOT recommend for use repositories which provide data after permission or by other methods of human-controlled registration.

Advantages of depositing data in internationally recognised repositories include:

- Visibility: Making your data available online (and linking it to the publication) provides an independent way for others to discover your work.

- Citability: all data you deposit will receive a persistent, resolvable identifier that can be used in a citation, as well as listed on your CV.

- Workload reduction: if you receive individual requests for data, you can simply direct them to the files in Dryad.

- Preservation: your data files will be safely archived in perpetuity.

- Impact: other researchers have more opportunities to use and cite your work.

There are several directories of data repositories relevant to biodiversity and ecological data, such as re3data, or those listed in the Open Access Directory, or in Table 2 of

A very useful resource that puts together information on journal data policies, repositories, and standards grouped by domain, type of data, and organisation is BioSharing (

Such repositories could be used to host data associated with a published data paper, as explained below. For their own data, authors are advised to use an internationally recognised, trusted (normally ISO-certified), specialized repository (see

- PANGAEA. The information system PANGAEA operates as an open data repository aimed at archiving, publishing and distributing georeferenced data from earth system research. Each dataset can be identified and cited by using a DOI. Data are archived as supplements to publications or as citable data collections. Data citations are available through the portal of the German National Library of Science and Technology (GetInfo). Data management and archiving policies follow the recommendations of the Commission on Professional Self Regulation in Science, the ICSU Data Sharing Principles, and the OECD Principles and Guidelines for Access to Research Data from Public Funding. PANGAEA is open to any project or individual scientist to archive and publish data. Data submission can be started here.

- The Knowledge Network for Biocomplexity (KNB) is a USA-based national network intended to facilitate data management and preservation in ecological and environmental research. For scientists, the KNB is an efficient way to discover, access, interpret, integrate and analyze complex ecological data from a highly-distributed set of field stations, laboratories, research sites, and individual researchers.

- DataBasin is a free system that connects the users with spatial datasets, non-technical tools, and a network of scientists and practitioners. One can explore and download a vast library of datasets, upload and publish own data, create working groups, and produce customized maps that can be easily shared.

- DataONE provides the distributed framework, management, and robust technologies that enable long-term preservation of diverse multi-scale, multi-discipline, and multi-national observational data. DataONE initially emphasises observational data collected by biological (genome to ecosystem) and environmental (atmospheric, ecological, hydrological, and oceanographic) scientists, research networks, and environmental observatories.

- Dataverse is an open source web application to share, preserve, cite, explore, and analyze research data. A Dataverse repository is the software installation, which then hosts multiple dataverses. Each dataverse contains datasets, and each dataset contains descriptive metadata and data files (including documentation and code that accompany the data). As an organising method, dataverses may also contain other dataverses. Some dataverses may have non-CC0 data, for example the Singaporean NTU dataverse has CC-BY-NC licensed data by default.

- Protocols.io is an open access platform for publishing, sharing and finding life science research protocols.

Taxonomy

There are several aggregators and registries of taxonomic data, which differ in their content, policies and methods of data submisison.

- Catalogue of Life (CoL) is a comprehensive and authoritative global index of species and their associated taxonomic hierarchy. The Catalogue holds essential information on the names, relationships and distributions of over 1.6 million species, continuosly compiled from 158 contributing databases around the world (as of February 2017). The Catalogue of Life is led by Species 2000, working in partnership with the Integrated Taxonomic Information System (ITIS). Authors can submit their global taxon checklists to the CoL editors following a template and guidelines.

- Integrated Taxonomic Information System (ITIS) is established by several federal agencies of the USA to provide an authoritative taxonomic information on species of plants, animals, fungi, and microbes, and their hierarchical classification, with a focus on North America. Potential contributors to ITIS are encouraged to view the ITIS Submittal Guidelines.

- NCBI Taxonomy serves as a taxonomic backbone for the National Center for Biotechnology Infomation of the USA (NCBI), and its services, for example GenBank. NCBI Taxonomy is not a single taxonoimic treatise, but rather a compiler of taxonomic information from a variety of sources, including the published literature, web-based databases, and the advice of sequence submitters and outside taxonomy experts.

-

International Plant Name Index (IPNI) is a collaborative effort between The Royal Botanic Gardens, Kew, the Harward University Herbaria, and the Australian National Herbarium to provide a single point of registration and reference for the names and associated basic bibliographical details of seed plants, ferns and lycophytes. The data are gathered and curated by a team of editors from the published literature and are freely available to the community. The pre-publication indexing of new plant taxa and nomenclatural acts in IPNI and inclusion of the IPNI identifiers in the original descriptions (protologues) was first trialled and made a routine practice in the journal PhytoKeys since the publication of its first issue in 2011 (

Kress and Penev 2011 ,Knapp et al. 2011 ). Later, Pensoft created an automated registration pipeline for new names in IPNI (Penev et al. 2016 ). -

MycoBank is the leading online database, established by the International Mycological Association (IMA), for documenting new names and combinations of fungy, and associated data, for example descriptions and illustrations. The nomenclatural novelties are assigned a unique MycoBank identifier that can be cited in the publication where the nomenclatural novelty is introduced. These identifiers are also used by the nomenclatural database Index Fungorum. As a result of changes to the International Code for Nomenclature of algae, fungi, and plants, ICNafp) (previously International Code for Botanical Nomenclature, ICBN), pre-publication registration of names and inclusion of record identifiers in the published protologues is mandatory since January 1st 2013 (see

Hawksworth 2011 ). - Index Fungorum is a global fungal nomenclator currently coordinated and supported by The Royal Botanic Gardens, Kew. Index Fungorum contains names of fungi (including yeasts, lichens, chromistan fungal analogues, protozoan fungal analogues and fossil forms) at all ranks. Index Fungorum now provides a mechanism to register names of new taxa, new names, new combinations and new typifications following the changes to the ICNafp (see above).

-

ZooBank is the Official Register of the International Commission on Zoological Nomenclature (ICZN) for registration of new nomenclatural acts, published works, and authors. Since 1st of January 2012, pre-publication registration in ZooBank has become mandatory for electronic-only publications (

International Commission on Zoological Nomenclature 2012 ). The Pensoft journal ZooKeys was the first to apply a mandatory registration of new zoological names at ZooBank since the publication of its first issue in 2008 (Penev et al. 2008 ). Authors publishing nomenclatural novelties in Pensoft journals do not have to deal with registration of these at ZooBank because it is provided in-house, through an automated pipeline (Penev et al. 2016 ). - PaleoBiology Database is a public resource whose purpose is to provide global, collection-based occurrence and taxonomic data for marine and terrestrial animals and plants of any geological age, as well as web-based software for statistical analysis of the data. The project's wider, long-term goal is to encourage collaborative efforts to answer large-scale paleobiological questions by developing a useful database infrastructure and bringing together large data sets. There is an option to protect data for private use only.

-

TreatmentBank is a resource that stores and provides access to taxonomic treatements and data therein, extracted from the literature. TreatmentBank is established by Plazi, who also provide a tool for text mining and data extraction called GoldenGATE Document Editor. Authors who publish in Pensoft's journals do not have to deal with deposition of their taxonomic treatments to Plazi, as the latter are harvested automatically using the TaxPub extension to the Journal Archival Tag Suite (JATS) (

Catapano 2010 ,Penev et al. 2012 ).

Species-by-Occurrence and Sample-Based data

The Global Biodiversity Information Facility (GBIF) was established in 2001 and is now the world's largest multilateral initiative for enabling free and open access to biodiversity data via the Internet. It comprises a network of 54 countries and 39 international organisations that contribute to its vision of "a world in which biodiversity information is freely and universally available for science, society, and a sustainable future". It seeks to fulfil this mission by promoting an international data infrastructure through which institutions can publish data according to common standards, thus enabling research that had not been possible before. The GBIF network facilitates access to over 704 million species occurrences in 30,894 datasets sourced from 867 data-publishing institutions (as of January 2017).

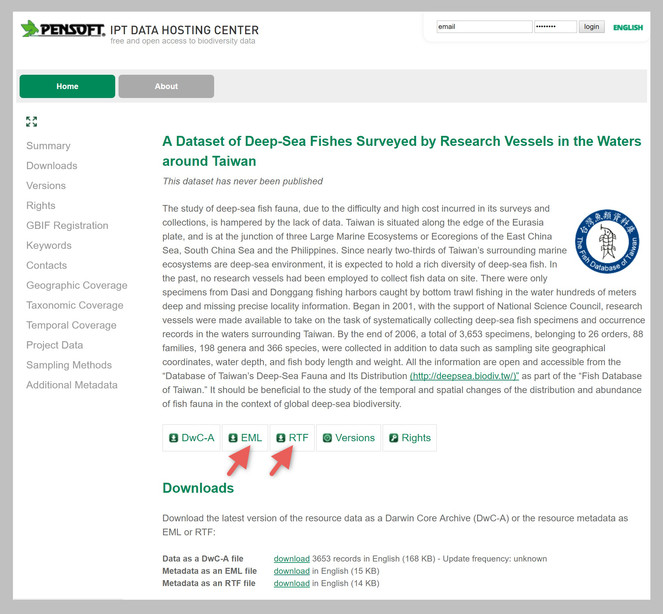

GBIF is not a repository in the strict sense, but a distributed network of data publishers and local data hosting centres that publish data based on community-agreed standards for exchange/sharing of primary biodiversity data. At a global scale, discovery and access to data is facilitated through the GBIF data portal. Pensoft facilitates publishing of data and metadata to the GBIF network through Pensoft’s IPT Data Hosting Center, which is based on the GBIF Integrated Publishing Toolkit (IPT) (

The Darwin Core Archive (DwC-A) (see also http://rs.tdwg.org/dwc/terms/guides/text/index.htm and

- One or more data files keeping all records of the particular dataset in a tabular format such as a comma-separated or tab-separated list;

- The archive descriptor (meta.xml) file describing the individual data file columns used, as well as their mapping to DwC terms; and

- A metadata file describing the entire dataset which GBIF recommends to be based on EML (Ecological Metadata Language 2.1.1).

The format is defined in the Darwin Core Text Guidelines. Darwin Core is no longer restricted to occurrence data, and together with the more generic Dublin Core metadata standard (on which its ideas are based), it is used by GBIF and others to encode data about organism names, taxonomies, species information, and, more recently, sample data (i.e., data from ecological/environmental investigations that are typically quantitative and adhere to standardised protocols, so that changes and trends in populations can be detected).

GBIF has produced a series of documents and supporting tools that focus primarily on data publishing using the Darwin Core standard. Guides are available for publishing:

Besides the GBIF Integrated Publishing Toolkit, there are two additional tools developed for producing Darwin Core Archives:

- The Darwin Core Archive Spreadsheet Processor provides a set of MS Excel templates, which are coupled with a web service that processes completed files and returns a validated Darwin Core Archive. Templates exist for primary biodiversity data, simple checklists, and EML metadata. See http://tools.gbif.org/spreadsheet-processor/ for further details.

- The Darwin Core Archive Assistant is a browser-based tool that composes an XML metafile, the only XML component of a Darwin Core Archive. It displays a drop-down list of Darwin Core and extension terms, accessed dynamically from the GBIF registry, and displays these to the user who describes the data files. This allows Darwin Core Archives to be created for sharing without the need to install any software. See: http://tools.gbif.org/dwca-assistant/ for details.

The Darwin Core Archive (DwC-A) files can be used to publish data underlying any taxonomic revision or checklist through the GBIF IPT or as supplementary files (see

As of version 2.2, the GBIF IPT incorporates use of DOIs allowing data publishers to automatically connect with either DataCite or EZID for DOI assignment. GBIF will issue DOIs for all newly published datasets where absent while recognizing and displaying publisher-assigned DOIs for existing datasets. The GBIF IPT now also requires publishers to select one of three standardised machine-readable data waivers or licenses (CC0, CC-BY, CC-BY-NC) for their data to clarify the conditions for re-use.

Images

Images can be deposited at generic repositories, such as Zenodo, figshare or Flickr. There are also specialized repositories for biodiversity images:

- Morphbank is a database of images and metadata used for international collaboration, research and education. Images deposited in Morphbank :: Biological Imaging document a wide variety of research including: specimen-based research in comparative anatomy, morphological phylogenetics, taxonomy and other biodiversity-related fields.

- Morphosource is a project-based data archive that allows researchers to store and organize, share, and distribute 3D data, for example raw microCt data and surface meshes representing vouchered specimens. File formats include tiff, dicom, stanford ply, and stl.

- Biodiversity Literature Repository (BLR) at Zenodo (see details in the section Biodiversity Literature below).

Phylogenies

There are relatively few repositories dealing with phylogentic data, of which we recommend the following:

-

TreeBASE is a repository of phylogenetic information, specifically user-submitted phylogenetic trees and the data used to generate them. TreeBASE accepts all types of phylogenetic data (e.g., trees of species, trees of populations, trees of genes) representing all biotic taxa. Data in TreeBASE are exposed to the public if they are used in a publication that is in press or published in a peer-reviewed scientific journal, book, conference proceedings, or thesis. Data used in publications that are in preparation or in review can be submitted to TreeBASE but are embargoed until after publication, and only available before publication to the publication editors or reviewers using a special access code. We also recommend following the best practices for sharing and publishing phylogenies, as detailed in

Stoltzfus et al. (2012) andCranston et al. (2014) . - MorphoBank is an online database and workspace for images and affiliate data with those images (labels, species names, etc.) used in evolutionary research in systematics. MorphoBank provides a platform for live collaboration on phylоgenetic matrices by teams in a private workspace where they can interlink images with phylogenetic matrices. MorphoBank stores: phylogenetic matrices (Nexus or TNT format), 2D (including JPEG, GIF, PNG, TIFF and Photoshop) and 3D (PLY, STL, ZIP, TIFF and DCM) image data and video (MPEG-4, QuickTime and WindowsMedia). MorphoBank also offers a Documents folder for additional files related to the research, such as PDFs, Word documents, and text files (e.g., morphometric data, phylogenetic trees).

Gene Sequence

Pensoft journals collaborate with four repositories for genomic data, albeit with the assumption that no matter where gene sequence data will be deposited, they should finally be submitted also to GenBank. Data and metadata formatting should comply with the Genomic Standards Consortium (GSC) sample metadata guidelines respectively, allowing data interoperability across the wider genomics community. Inclusion of the hyperlinked accession numbers in the article is a prerequisite for publication in Pensoft journals. The most important repositories for genomic data are:

- International Nucleotide Sequence Database Collaboration (INSDC), mostly known through its founding partner, the NCBI GenBank. GenBank is a genetic sequence database, an annotated collection of all publicly available DNA sequences. Hosted at the National Center for Biotechnology Information (NCBI) at the National Library of Medicine (NLM) under the umbrella of the National Institutes of Health (NIH), GenBank is part of the International Nucleotide Sequence Database Collaboration (INSDC), which also comprises the DNA DataBank of Japan (DDBJ) and the European Bioinformatics Institute (EBI), which is part of the European Molecular Biology Laboratory (EMBL-EBI). Raw sequencing data in particular needs to be deposited in one of the INSDC data repositories, such as the NCBI Sequence Read Archive (SRA), the EBI European Nucleotide Archive (ENA), or the DDBJ Sequence Read Archive (DRA). These three organizations exchange data on a daily basis. They also handle assembled and annotated sequence data, as well as host a number of linked databases that handle more processed data types like variation data (with both the EBI and NCBI handling genetic and structural variants). An example of a GenBank record for a Saccharomyces cerevisiae gene may be viewed here. There are several options for submitting data to GenBank.

- The Barcode of Life Data System (BOLD) is established at the University of Guelph as "an informatics workbench aiding the acquisition, storage, analysis, and publication of DNA barcode (mostly COI) records" (

Ratnasingham and Hebert 2007 ). BOLD have an agreement with GenBank for deposition of barcode (COI) sequences in GenBank as well through a web-based Barcode Submission Tool. - PlutoF Biodiversity Platform has been developed originally as a data management platform for barcode data based mostly on the Internal Transcribed Spacer (ITS) region as most suitable for the identification of fungi. Recently PlutoF was developed into a platform to "create, manage, share, analyse and publish biology-related databases and projects". PlutoF have an agreement with GenBank for deposition of gene sequences in GenBank.

Best practice recommendations for biodiversity genomic data

- Always aim at depositing data before submission of the manuscript, so that they can be linked to and from the manuscript and are made freely available for peer-review. Even if not yet public during the review process, reviewer access is available via NCBI.

- Gene sequences should always be published in GenBank, either directly or through INSDC, even if they are openly available in other repositories.

- A paper dealing with gene sequences should always contain the GenBank accession numbers, and where possible should use the BioProject accession (formatted PRJxxxxx) as well.

- When including gene sequences deposited in other repositories, authors should provide hyperlinked identifiers (e.g. accession numbers) of those records in the manuscript text.

- It is strongly recommended to publish large genomic databases, or separate species genomes, or barcode reference libraries in the form of data papers or "BARCODE data release papers". A BARCODE data release paper is a short manuscript that announces and documents the public deposit to a member of the INSDC of a significant body of data records that meets the BARCODE data standards (for examples, see

Rougerie et al. 2015 ,Schindel et al. 2011 ).

Protein Sequence

- The Protein Data Bank (PDB) created by the the Research Collaboratory for Structural Bioinformatics (RCSB) contains information about experimentally-determined structures of proteins, nucleic acids, and complex assemblies. As a memberof the Worldwide Protein Data Bank (wwPDB), the RCSB PDB curates and annotates PDB data according to agreed standards. See its data deposition policies and services.

- The Universal Protein Resource (UniProt) is a comprehensive resource for protein sequence and annotation data. The UniProt databases are the UniProt Knowledgebase (UniProtKB), the UniProt Reference Clusters (UniRef), and the UniProt Archive (UniParc).

Genomics

- ArrayExpress (EMBL-EBI) and the NCBI Gene Expression Omnibus (NCBI GEO) are archives for data from high-throughput functional genomics experiments such as microarrays and sequencing based approaches such as RNA-seq, miRNA-seq, ChIP-seq, methyl-seq, etc. Data are collected to MIAME (Minimum Information About a Microarray Experiment) and MINSEQE (Minimum Information about a high-throughput SEQuencing Experiment) standards. Experiments are submitted directly to ArrayExpress or are imported from the NCBI GEO database and vice versa. ArrayExpress and NCBI GEO are strongly recommended for deposition of species genomes or transcriptomes, metagenomic and other biodiversity-related functional genomics data. For high-throughput sequencing based experiments the raw data is brokered to the EBI or GenBank, while the experiment descriptions and processed data are archived in these databases.

- EBI Metagenomics (EMBL-EBI) is a pipeline for the analysis and archiving of metagenomic data automatically archived in the European Nucleotide Archive (ENA) and intended for public release.

-

GigaDB primarily serves as a repository to host data and tools associated with articles in the journal GigaScience; however, it also includes a subset of datasets that are not associated with GigaScience articles. GigaDB defines a dataset as a group of files (e.g., sequencing data, analyses, imaging files, software programs) that are related to and support an article or study. An example of multifunctional use of GigaDB or various types of biodiversity data is the paper of

Stoev et al. (2013) and its associated editorial (Edmunds et al. 2013 ).

Other Omics

Metabolomics

Metabolomics data should be deposited in any of the member databases of the Metabolomexchange data aggregation and notification consortium. Such partners, for example, are the EMBL-EBI MetaboLights repository and the Metabolomics Workbench of NIH, which are data archives for metabolomics experiments and derived information.

Proteomics

Proteomics data should be deposited in any of the members of the ProteomeXchange consortium and following the MIAPE (The Minimum Information About a Proteomics Experiment) guidelines. The founding members of ProteomeXchange are Pride, the PRoteomics IDEntifications Database at the EMBL-EBI and PeptideAtlas, part of the Institute of Systems Biology in Seattle, USA. The other two repositories at ProteomeXchange are MassIVE and jPost.

Various Data Types

Dryad Data Repository

Pensoft encourages authors to deposit data underlying biological research articles in the Dryad Data Repository in cases where no suitable more specialized public data repository (e.g., GBIF for species-by-occurrence data and taxon checklists, or GenBank for genome data) exists. Dryad is particularly suitable for depositing data packages consisting of different types of data, for example datasets of species occurrences, environmental measurements, and others.

Pensoft supports Dryad and its goal of enabling authors to publicly archive sufficient data to support the findings described in their journal articles. Dryad is a safe, sustainable location for data storage, and there are no restrictions on data format. Note that data deposited in Dryad are made available for re-use through the Creative Commons CC0 waiver, detailed above.

Data deposition in Dryad is a subject to a small charge that the authors or their institutions should regulate directly with Dryad.

Data can be deposited with Dryad either before or at the time of submission of the manuscript to the journal, or after the manuscript acceptance but before submission of the finally revised, ready-for-layout version for publication. Nonetheless, the authors should always aim at depositing data before submission of the manuscript, so that they can be linked both from and to the manuscript and made freely available for peer-review.

The data deposition at Dryad is integrated with the workflow in Pensoft's ARPHA Journal Publishing System. The acceptance letters automatically generated by email by Pensoft's journals on the day of acceptance of a manuscript contain instructions on how to upload data underpinning the article to Dryad, if desired by the authors (see this blog post for details).

Once you deposit your data package, it receives a unique and stable identifier, namely a DataCite DOI. Individual data files within this package are given their own DOIs, based on the package DOI, as do subsequent versions of these data files, as explained under DOI usage on the Dryad wiki. You should include appropriate Dryad DOIs in the final text of the manuscript, both in the in-text citation statement in the Data Resources section and in the formal data reference in your paper's reference list, as explained and exemplified above. This is very important, since if the data DOI does not appear in the final published article, that greatly weakens its connection to the underlying data.

More information about depositing data in Dryad can be found at http://www.datadryad.org/repo/depositing.

You may wish to take a look at some example data packages in Dryad to see how data packages related to published articles are displayed, such as doi: 10.5061/dryad.7994 and doi: 10.5061/dryad.8682.

Data deposited in Dryad in association with Pensoft journal articles will be made public immediately upon publication of the article.

Zenodo

Zenodo is a research data repository launched in 2013 by the EU-Funded OpenAIRE project and CERN to provide a place for researchers to deposit datasets of up to 50 GB in any subject area. Zenodo code is open source, and is built on the foundation of the Invenio digital library which is also open source. The work-in-progress, open issues, and roadmap are shared openly in GitHub, and contributions to any aspect are welcomed from anyone. All metadata is openly available under CC0 waiver, and all open content is openly accessible through open APIs.

Zenodo assigns a DataCite DOI to each stored research object, or uses the original DOIs of the articles or research objects, if available. Scientists may use Zenodo to store any kind of data that can thereafter be linked to and cited in research articles.

The repository allows non-open-access materials to be uploaded but not displayed in public, except for their metadata which are freely available under the CC0 waiver.

Biodiversity Literature

Biodiversity Heritage Library (BHL) is a searchable archive of scanned public domian books and journals. Originally BHL was focusing mostly on the historical biodiversity literature, however now it is possible to incorporate also materials that are still under copyright through agreements with publishers. Pensoft journals harvest the BHL content for mentions of taxon names and display the original sources through the Pensoft Taxon Profile tool. Bibliographical metadata of the articles published in Pensoft's journals are submitted to BHL on the day of publication. On the top of the BHL content, Roderick Page from the University of Glasgow built BioStor as an open source application that searches and displays the BHL articles by article metadata and individual pages.

The Biodiversity Literature Repository (BLR) is an open community repository at Zenodo built by Plazi and Pensoft to archive articles, images and data in the biodiversity domain. Plazi uploads article PDFs and other materials extracted from legacy literature through their GoldenGATE Imagine tool. Pensoft journals are automatically archiving in BLR all biodiversity-related articles, supplementary files and individual images, through Web services, on the day of publication. The uploaded materials are archived at Zenodo under their own DOIs, if exisiting, or are assigned Zenodo DOIs.

The Bibliography of Life (BoL) was created by the EU FP7 project ViBRANT to search, retrieve and store bibliographic references and is currently maitained by Pensoft and Plazi. BoL consists of the search and discovery tool ReFindit and a repository for bibliographic references harvested from the literature, RefBank.

Guidelines for Authors

Data Published within Supplementary Information Files

Online publishing allows an author to provide data sets, tables, video files, or other information as supplementary information files associated with papers, or to deposit such files in one of the repositories described above, which can greatly increase the impact of the submission. For larger biodiversity datasets, authors should consider the alternative of submitting a separate data paper (see description below).

Submission of data to a recognised data repository is encouraged as a superior and more sustainable method of data publication than submission as a supplementary information file with an article. Nevertheless, Pensoft will accept supplementary information files if authors wish to submit them with their articles and demonstrate that no suitable repository exists. Details for uploading such files are given in Step 4 of the Pensoft submission process (example from ZooKeys) available through the “Submit a Manuscript” button on any of the Pensoft journal websites.

By default, the maximum file size for each supplementary information file that can be uploaded onto the Pensoft web site is 50 MB. If you need more than that, or wish to submit a file type not listed below, please contact Pensoft's editorial office before uploading.

When submitting a supplementary information file, the following information should be completed:

- File format (including name and a URL of an appropriate viewer if the format is unusual).

- Title of the supplementary information file (the authorship will be assumed to be the same as for the paper itself, unless explicitly stated otherwise).

- Description of the data, software listings, protocols or other information contained within the supplementary information file.

All supplementary information files should be referenced explicitly by file name within the body of the article, e.g. “See Supplementary File 1: Movie 1 for a recording of the original data used to perform this analysis”.

The ARPHA Writing Tool and the journals currently based on it (Biodiversity Data Journal, Research Ideas and Outcomes, One Ecosystem, and BioDiscovery) provide the functionality to cite the supplementary materials through in-text citations in the same way as figures, tables or references are cited.

Ideally, the supplementary information file formats should not be platform specific, and should be viewable using free or widely available tools. Suitable file formats are:

For supplementary documentation:

- RTF (Rich Text Format)

- PDF (Adobe Acrobat; ISO 32000-1)

- HTML (Hypertext Markup Language)

- XML (Extensible Markup Language)

For animations:

- SWF (Shockwave Flash)

- DHTML (Dynamic HTML)/HTML5

For images:

- SVG (Scalable Vector Graphics)

- GIF (Graphics Interchange Format)

- JPEG/JFIF (JPEG File Interchange Format)

- PNG (Portable Network Graphics)

- TIFF (Tagged Image File Format)

For movies:

- MOV (QuickTime)

- MPG (MPEG)

- OGG (an open and free multimedia container format)

- WebM (an open and free multimedia container format)

For datasets:

- CSV (Comma separated values)

- TSV (Tab separated values)

The file names should use the standard file extensions (as in “Supplementary-Figure-1.png”). Please also make sure that each supplementary information file contains one particular data type, or is of a single table, figure, image or video.

To facilitate comparisons between different pieces of evidence, it is common to produce composite figures or to concatenate originally separate recordings into a single audio or video file. We do not recommend such practice, since it is often simpler to just open the two (or more) raw files in question and to appreciate and manipulate them side by side, and such concatenation is a barrier to re-use. Likewise, we do not recommend to provide metadata in non-editable ways (e.g., adding a letter or an arrow into bitmap images or video frames), which complicates re-use too (e.g. translation into another language, or zooming in for additional details).

Best practice recommendations

- Open data formats should be preferred over proprietary ones (for example, for spreadsheets, CSV should always be preferred over XLS).

- Always follow community-accepted standards within the respective scientific domain (if such exist) when formatting data files, because this will make your data interoperable with other data in the same domain.

- To maximise interoperability, plain-text data files should be UTF-8 encoded with no embedded line breaks.

- For species-by-occurrence data, the authors are strongly encouraged to publish these through the GBIF Integrated Publishing Tookit (see above) first, then link to the data in the "Data resources" section of the article and also cite the dataset in the reference section via its GBIF DOI or the GBIF IPT unique HTTP identifier. In addition, authors may also publish the same data as supplementary files to the article in Darwin Core Archive. The Darwin Core Archive of the data can be downloaded from the GBIF IPT or created in another way.

- For species-by-occurrence data published as supplementary files to the article, authors should use a Darwin Core compliant spreadsheets or tabular text files (http://arpha.pensoft.net/lib/files/Species_occurrence-1_v1_DwC_Template.xls).

Import of Darwin Core Specimen Records into Manuscripts

This specific functionality is available in the ARPHA Writing Tool (AWT) and currently being used in the "Materials" subsection of the "Taxon treatment" section in the "Taxonomic paper" template of the Biodiversity Data Journal. Darwin Core compliant specimen records can be imported into structured format in the manuscript text in three ways:

- manually through the Darwin Core compliant HTML editor embedded in the AWT,

- from a Darwin Core compliant spreadsheet template (for example, from an Excel spreadsheet; the template is available in the AWT through the link http://arpha.pensoft.net/lib/files/Species_occurrence-1_v1_DwC_Template.xls),

- automatically, through web services from online biodiversity data platforms (GBIF, Barcode of Life, iDigBio, and PlutoF).

While the first two methods of data import speak for themselves and one could easily implement them following the instructions on the user interface, the third one deserves a more detailed description, as it is still unique in the data publishing landscape.

The workflow has been thoroughly described from the user's perspective in a blog post and in the paper of

- At one of the supported data portals (GBIF, Barcode of Life, iDigBio, and PlutoF), the author locates the specimen record he/she wants to import into the Materials section of a Taxon treatment (available in the Taxonomic Paper manuscript template in the Biodiversity Data Journal).

- Depending on the portal, the user finds either the occurrence identfier of the specimen, or a database record identifier of the specimen record, and copies that into the respective upload field of the ARPHA system (Fig.

3 ). - After the user clicks on "Add," a progress bar is displayed, while the specimens are being uploaded as material citations.

- The new material citations are rendered in both human- and machine-readable DwC format in the Materials section of the respective Taxon treatment and can be further edited in AWT, or downloaded from there as a CSV file.

Data Published in Data Papers

What is a data paper

A data paper is a scholarly journal publication whose primary purpose is to describe a dataset or a group of datasets, rather than to report a research investigation (

- to provide a citable journal publication that brings scholarly credit to data creators,

- to describe the data in a structured human-readable form, and

- to bring the existence of the data to the attention of the scholarly community.

The description should include several important elements (usually called metadata, or “description of data”) that document, for example, how the dataset was collected, which taxa it covers, the spatial and temporal ranges and regional coverage of the data records, provenance information concerning who collected and who owns the data, details of which software (including version information) was used to create the data, or could be used to view the data, and so on.

Most Pensoft journals welcome submission and publication of data papers, that can be indexed and cited like any other research article, thus bringing registration of priority, a permanent publication record, recognition, and academic credit to the data creators. In other words, the data paper is a mechanism to acknowledge efforts in authoring ‘fit-for-use’ and enriched metadata describing a data resource. The general objective of data papers in biodiversity science is to describe all types of biodiversity data resources, including environmental data resources.

An important feature of data papers is that they should always be linked to the published datasets they describe, and that link (a URL, ideally resolving a DOI) should be published within the paper itself. Conversely, the metadata describing the dataset held within data archives should include the bibliographic details of the data paper once that is published, including a resolvable DOI. Ideally, the metadata should be identical in the two places — the data paper and the data archive — although this may be difficult to achieve with some archive metadata templates, so that there may be two versions of the metadata. This is why referring to the the data paper DOI is so important.

How to write and submit a data paper

In principle, any valuable dataset hosted in a trusted data repository can be described in a data paper and published following these Guidelines. Each data paper consists of a set of elements (sections), some of which are mandatory and some not. An example of such a list of elements needed to describe primary biodiversity data is available in the section data papers Describing Primary Biodiversity Data below.

Sample data papers which can be used as illustration of the concept can be downloaded from several Pensoft journals, for example, ZooKeys (examples), or Biodiversity Data Journal (examples).

All claims in a data paper should be substantiated by the associated data. If the methodology is standard, please explain in what respects your data are unique and merit a publication in the form of a data paper.

Alternatively, if the methodology used to acquire the data differs significantly from established approaches, please consider submitting your data to an open repository and associating them with a standard or data paper, in which these methodologies can be more fully explained.